Epistemic status: Practising thinking aloud. There might be an important question here, but I might be making a simple error.

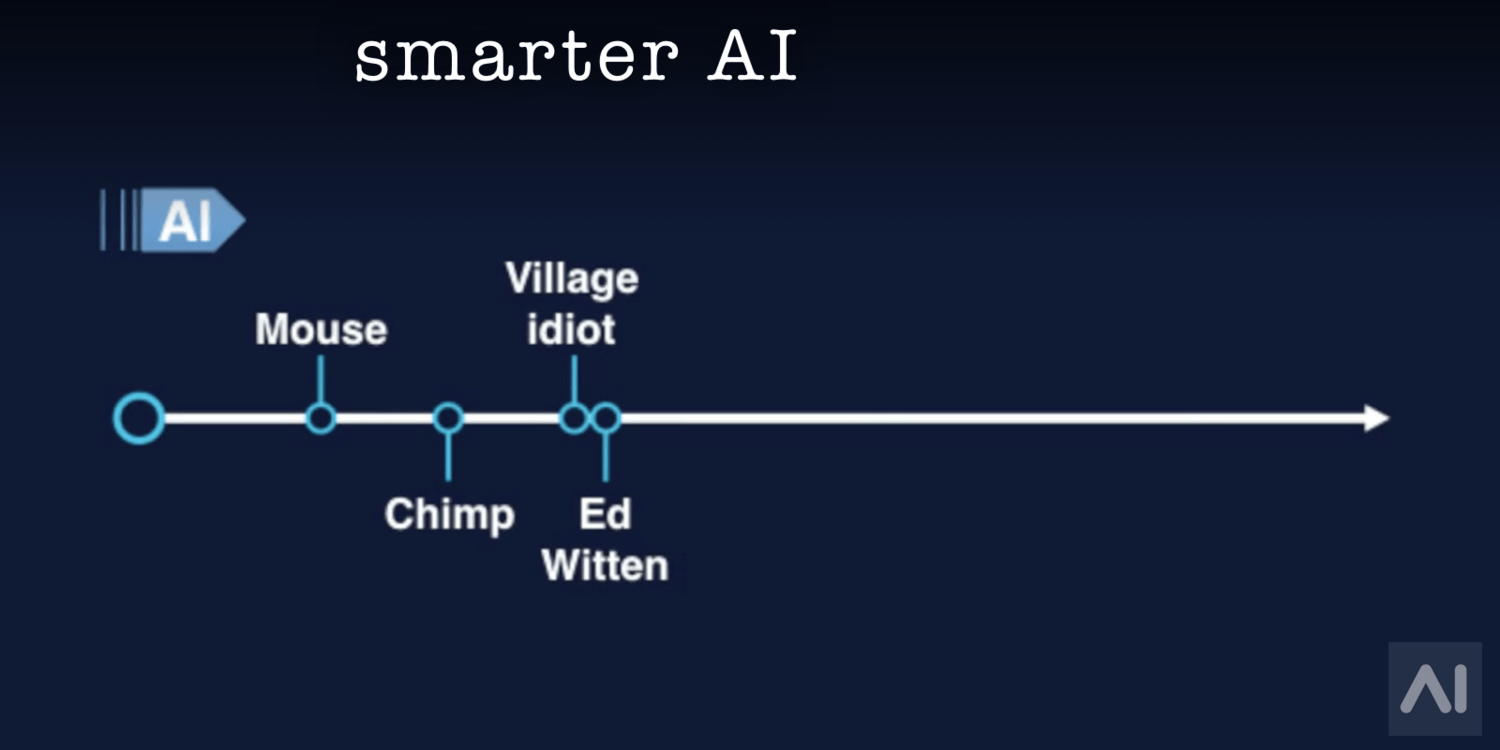

There is a lot of variance in general competence between species. Here is the standard Bostrom/Yudkowsky graph to display this notion.

There’s a sense that while some mice are more genetically fit than others, they’re broadly all just mice, bound within a relatively narrow range of competence. Chimps should not be worried about most mice, in the short or long term, but they also shouldn’t worry especially so about peak mice—there’s no incredibly strong or cunning mouse they ought to look out for.

However, my intuition is very different for humans. While I understand that humans are all broadly similar, that a single human cannot have a complex adaptation that is not universal [1], I also have many beliefs that humans differ massively in cognitive capacities in ways that can lead to major disparities in general competence. The difference between someone who does understand calculus and someone who does not, is the difference between someone who can build a rocket and someone who cannot. And I think I’ve tried to teach people that kind of math, and sometimes succeeded, and sometimes failed to even teach basic fractions.

I can try to operationalise my hypothesis: if the average human intelligence was lowered to be equal to an IQ of 75 in present day society, that society could not have built rockets or do a lot of other engineering and science.

(Sidenote: I think the hope of iterated amplification is that this is false. That if I have enough humans with hard limits to how much thinking they can do, stacking lots of them can still produce all the intellectual progress we’re going to need. My initial thought is that this doesn’t make sense, because there are many intellectual feats like writing a book or coming up with special relativity that I generally expect individuals (situated within a conducive culture and institutions) to be much better at than groups of individuals (e.g. companies).

This is also my understanding of Eliezer’s critique, that while it’s possible to get humans with hard limits on cognition to make mathematical progress, it’s by running an algorithm on them that they don’t understand, not running an algorithm that they do understand, and only if they understand it do you get nice properties about them being aligned in the same way you might feel many humans are today.

It’s likely I’m wrong about the motivation behind Iterated Amplification though.)

This hypothesis doesn’t imply that someone who can do successful abstract reasoning is strictly more competent than a whole society of people who cannot. The Secret of our Success talks about how smart modern individuals stranded in forests fail to develop basic food preparation techniques that other, primitive cultures were able to build.

I’m saying that a culture with no people who can do calculus will in the long run score basically zero against the accomplishments of a culture with people who can.

One question is why we’re in a culture so precariously balanced on this split between “can take off to the stars” and “mostly cannot”. An idea I’ve heard is that if a culture is easily able to reach technologically maturity, it will come later than a culture who is barely able to become technologically maturity, because evolution works over much longer time scales than culture + technological innovation. As such, if you observe yourself to be in a culture that is able to reach technologically maturity, you’re probably “the stupidest such culture that could get there, because if it could be done at a stupider level then it would’ve happened there first.”

As such, we’re a species whereby if we try as hard as we can, if we take brains optimised for social coordination and make them do math, then we can just about reach technical maturity (i.e. build nanotech, AI, etc).

That may be true, but the question I want to ask about is what is it about humans, culture and brains that allows for such high variance within the species, that isn’t true about mice and chimps? Something about this is still confusing to me. Like, if it is the case that some humans are able to do great feats of engineering like build rockets that land, and some aren’t, what’s the difference between these humans that causes such massive changes in outcome? Because, as above, it’s not some big complex genetic adaptation some have and some don’t. I think we’re all running pretty similar genetic code.

Is there some simple amount of working memory that’s required to do complex recursion? Like, 6 working memory slots makes things way harder than 7?

I can imagine that there are many hacks, and not a single thing. I’m reminded of the story of Richard Feynman learning to count time, where he’d practice being able to count a whole minute. He’d do it while doing the laundry, while cooking breakfast, and so on. He later met the mathematician John Tukey, who could do the same, but they had some fierce disagreements. Tukey said you couldn’t do it while reading the newspaper, and Feynman said he could. Feynman said you couldn’t do it while having a conversation, and Tukey said they could. They then both surprised each other by doing exactly what they said they could.

It turned out Feynman was hearing numbers being spoken, whereas Tukey was visualising the numbers ticking over. So Feynman could still read at the same time, and his friend could still listen and talk.

The idea here is that if you’re unable to use one type of cognitive resource, you may make up for it with another. This is probably the same situation as when you make trade-offs between space and time in computational complexity.

So I can imagine different humans finding different hacky ways to build up the skill to do very abstract truth-tracking thinking. Perhaps you have a little less working memory than average, but you have a great capacity for visualisation, and primarily work in areas that lend themselves to geometric / spacial thinking. Or perhaps your culture can be very conducive to abstract thought in some way.

But even if this is right I’m interested in the details of what the key variables actually are.

What are your thoughts?

[1] Note: humans can lack important pieces of machinery.

A mouse brain has ~75 million neurons, a human brain ~85 billion neurons. The standard deviation of human brain size is ~10%. If we think of that as a proportional increase rather than an absolute increase in the # of neurons, that’s ~74 standard deviations of difference. The correlation between # of neurons and IQ in humans is ~0.3, but that’s still a massive difference. Total neurons/computational capacity does show a pattern somewhat like that in the figure. Chimps’ brains are a factor of ~3x smaller than humans, ~12 standard deviations.

Selection can cumulatively produce gaps that are large relative to intraspecific variation (one can see the same relationships even more blatantly considering total body mass). Mice do show substantial variation in maze performance, etc.

And the cumulative cognitive work that has gone into optimizing the language, technical toolkit, norms, and other factors involved in human culture and training into are immensely beyond those of mice (and note that human training of animals can greatly expand the set of tasks they can perform, especially with some breeding to adjust their personalities to be more enthusiastic about training). Humans with their language abilities can properly interface with that culture, dwarfing the capabilities both of small animals and people in smaller earlier human cultures with less accumulated technology or economies of scale.

Hominid culture took off enabled by human capabilities [so we are not incredibly far from the minimum need for strongly accumulating culture, the selection effect you reference in the post], and kept rising over hundreds of thousands and millions of years, at accelerating pace as the population grew with new tech, expediting further technical advance. Different regions advanced at different rates (generally larger connected regions grew faster, with more innovators to accumulate innovations), but all but the smallest advanced. So if humans overall had lower cognitive abilities there would be slack for technological advance to have happened anyway, just at slower rates (perhaps manyfold), accumulating more by trial and error.

Human individual differences are also amplified by individual control over environments, e.g. people who find studying more congenial or fruitful study more and learn more.

Four factors of some relevance:

First, humans aren’t at equilibrium; as you point out, our environment has shifted much more quickly than evolution has time to catch up with. So we should expect that many analyses that make sense at equilibrium aren’t correctly describing what’s happening now.

Second, while it seems like “humans are very different yet mice are all the same,” this is often because it’s easy to track the differences in humans but difficult to track the differences in mice. What fraction of mice become parents (a decent proxy for the primary measure of success, according to evolution)? Would it look like the core skills of being a mouse (finding food, evading predators, sociability, or so on) have variance comparable to the human variation in intelligence? What fraction of humans become parents?

Third, while we have some evidence that humans are selected for intelligence (like the whole skull/birth canal business), intelligence is just one of many traits that are useful for humans, and we don’t have reason to believe this is the equilibrium that would result if intelligence were the only determinant of fitness. Consider Cochran et al on evidence for selection for intelligence for Ashkenazi Jews; they estimate that parents had perhaps a 1 IQ point edge over non-parents for the last ~500 years (with lower estimates on the heritability of intelligence having to only slightly increase that number).

Fourth, rapid population growth generally amplifies variance along dimensions that aren’t heavily selected for if the population growth is accomplished in part by increasing the number of parents.

Half formed thoughts towards how I think about this:

Something like Turing completeness is at work, where our intelligence gains the ability to loop in on itself, and build on its former products (eg definitions) to reach new insights. We are at the threshold of the transition to this capability, half god and half beast, so even a small change in the distance we are across that threshold makes a big difference.

.

Hunter-gathering probably needed skills that are harder to AI-replace *for the common individual* even if farmer societies can use their greater numbers to accumulate more technology. This is because farmer societies move around less and have more specialized labor, making life require more narrower tasks and less general problem solving. That latter is what we call “intelligence”. The 21st century is reversing this trend rapidly with automation.

Farming existed almost all over the world for long enough for natural selection to matter but not enough for our DNA and cultures to completely “forget” life as a hunter gatherer. Modern society is (most likely) a mix of ~10 hunter-gather phenotypes to ~90 farmers. The farmer phenotype has a lot of less interest in asking “why”. The “intelligence” difference between a person who asked “why” since age 3 and one who didn’t is a result of massive differences in training. Same with any other talent such as violin.

No non-human animal species with a well-studied intelligence had a sudden transition in the “window” of ~10000 years ago that drastically changed the skills needed to get by.

we cannot conclude “that a single human cannot have a complex adaptation that is not universal”

You seem to have missed an important word, so I bolded it for you. A reproductively isolated population is a very different case. For example, a bunch of finches got stuck in the Galapagos a few million years ago; you might have heard of them.

I think there’s a lot of variance in the intelligence of animals too. (I was a veterinary surgeon and am definitely an “animal person”.)

Variance in human intelligence, (but how are you judging that? - ability to learn and repeat, ability to problem solve?) but anyway a quick list …

Genetics. Roll a multi-faced (Humans have about 20,000 to 23,000 genes. - Merck manual) dice, roll another one. That’s your randomly selected DNA.

Interactions with others. - positive influences on your life. People that teach and explain. (when kids are at that “why” stage they should be answered with quality information).

Opportunity and Stimulation. - exposure to knowledge/education/new experiences.

Environmental factors. - Nutrition. Exposures to negative influences (disease, pollution)

Attitude—personality, desire to learn/interest in a subject.

Just a few thoughts.

I have seen articles that track IQ of countries in Northern Hemisphere vs countries along the equator. The IQ of people in colder countries is significantly higher than along the equator. Maybe historically people who lived in cold climates had to struggle to survive against the climate and this caused them to exercise larger % of the brain in order to survive??

You have to struggle to survive even harder in hotter temperatures or at least that is how I feel. Nowadays, I associate higher temperatures with an invitation to leisure and laziness somehow. Also it happnes that the countries along the Equator are somewhat less developed or have been dealing with political, climate, economical and health issues most of their existence.

In terms of countries near equator are you seeing cause or effect. Equator regions should have been able to live off the land (eat and use plants that they did not have to grow) In northern countries people either hunted to survive or became agriculture based society. Either way they would have put in more planning and effort. Repeat this for a few hundred years might result in more creativity and reasoning ability.

The sample size isn’t big enough: nearby countries are too strongly coupled with each-other. Regions are closer to independent. But the only major regions were Europe, Middle East, East Asia, India, West Africa, East Africa, South Africa, and several in the new world. It’s hard to form statistics around such a small number.

Of these, four enjoyed “top of the world” civilization status at some point in time: the Middle East + North Africa, Europe, China, and India. Mesoamerica lived independently until it got destroyed by Europe, so it is hard to place in a global hierarchy. This list is pretty random; there is no evidence for or against an “avoidance of the equator”.

And it keeps moving. After the industrial revolution, the “top of the world” in terms of innovation moved from England to New York (Edison days) and most recently into the Silicon Valley.

But why does the king of the hill keep changing? So what breaks the technology/resource extraction/warfare feedback? It’s basically crumbling infrastructure combined with regulatory capture at all levels of institutions. The trouble is the timescales are now so fast that the silicon valley is already elderly. There are 5 gas-tank apps. Many startups use the same recycled formula with social media, block-chains, “Uber for X”, etc rather than addressing new problems. We already have to go to wherever is “next” (which may or may not be in the same physical area) if we want to use our skills in a young blossoming community.

I have my own (unoriginal) answer, outlined in this post.

In short, I think it’s important to distinguish learning ability and competence. The reason why Magnus Carlsen towers above me in chess is because he has more practice, not because he’s superintelligent.

However, I also think that even putting this distinction aside, we shouldn’t be surprised by large variation. If we think of the brain like a machine, and cognitive deficits as parts being broken parts in the machine, then it is easy to see how distributed cognitive deficits can lead to wide variation.

My theory is that 4 is enough, and the extra that many people have is just there because it’s useful overkill that’s there because it doesn’t hurt anything and extra memory makes things easier faster.

So, first, note that for computing pairwise operations, you only need a stack as high as 3 (so long as additional future inputs can come from somewhere else). If you’ve ever worked with a stack-based calculator, like one that supports reverse polish notation (RPN), or a stack-based computer language, you probably found this out empirically, but you might have also known it from the fact that early stack-based calculators had only 3 registers and that was good enough.

Well, almost. With 3 registers you can only carry forward a single term between operations and only perform scalar operations. With 4 registers to construct the stack you can do pairwise operations of two variables or carry forward an additional term. But more importantly in practice having only 3 registers is annoying and although you can theoretically do whatever you want it requires careful ordering of operations to avoid a stack overflow. With 4 you rarely have to think ahead, which is nice: you just perform the operations and having the extra register lets you get into and out of near overflows without actually running out of space and having to start over.

More registers are nice, but registers cost money, and for a long time stack-based calculators settled on using 4 registers because it was the best balance of cost, functionality, and flexibility. Again, 3 was enough, but annoying enough to work with that everyone was happy to pay for 4, but few were willing to pay for more.

Now, does this mean 4 is enough for complex recursion? I mean, sure, so long as you are tail call optimizing. More just makes life easier and means you don’t have to recurse. Why wouldn’t you want to do that, though?

Well, doing all this assumes you can perform the complex mental operations you want as pairwise operations. And maybe you can do that, but also maybe you don’t know how, so you end up needing more working memory to deal with trying to perform operations you can’t perform “natively”, yet.

And why think of the mind as performing mental operations over working memory at all or that you can develop access to more powerful operations that let you do more with the same memory? That’s a long topic, but I’d recommend this paper as a starting point that melds well with the viewpoint I’ve expressed here.

I’d say that intelligence variations are more visible in (modern) humans, not that they’re necessarily larger.

Let’s go back to the tribal environment. In that situation, humans want to mate, to dominate/be admired, to have food and shelter, and so on. Apart from a few people with mental defects, the variability in outcome is actually quite small—most humans won’t get ostracised, many will have children, only very few will rise to the top of the hierarchy (and even there, tribal environments are more egalitarian that most, so the leader is not that much different from the others). So we might say that the variation in human intelligence (or social intelligence) is low.

Fast forward to an agricultural empire, or to the modern world. Now the top minds can become god emperor, invading and sacking other civilizations, or can be part of projects that produce atom bombs and lunar rockets. The variability of outcomes is huge, and so the variability in intelligence appears to be much higher.

Some points to consider:

1. Has it been demonstrated that variations in intelligence is that much greater for humans than for mice or chimps? This may be true, but you didn’t indicate any references.

Whereas I could imagine a test for chimp intelligence, and even timed maze experiments on mice, the concept of what we mean by intelligence becomes increasingly attenuated as we deal with ever simpler life forms; so that, at some point, and maybe even quite early, experts will begin disagreeing on what they are trying to measure.

2. Modern day humans have a big advantage not only over other animals, but also over our cognitively equivalent ancestors of 12+ thousand years ago. Thanks to the invention of culture, we pass knowledge to our offspring, meaning that knowledge can be accumulated from generation to generation. Variations in cognitive performance isn’t only a consequence in variations in intelligence, but also reflects large differences in the quality of acculturation.

3. I wonder if your decision to compare interspecies variations in intelligence follows from a mistaken analogy. Consider, that intelligence is a human specialty. Other species have their own specialities. For instance, maybe we should be comparing variations in human intelligence with variations in the maximum speed of healthy, adult cheetahs. (I wonder, whether anyone has ever done this?)

4. The idea that we can assign a number to the variation in human intelligence is suspect. True, we can claim that the standard deviation in IQ is 15% of average IQ value. But it doesn’t follow that a +1 sigma individual is 15% smarter than an average individual, because the IQ scale itself is arbitrary and intelligence has never been defined apart from performance on the test. To make the point in another way, 1-sigma variations in intelligence was arbitrarily set to 15 IQ points purely for convenience. We might just as well have set the mark at 900 IQ points. But that wouldn’t mean that the +1-sigma individual was then ten times as intelligent as average.

Compare the situation with the cheetahs, where a statement like: “the ratio between the standard deviation in maximum running speeds and the average individual’s maximum running speed is .15”, really means something in terms of performance that can be measured with a metre and stopwatch.

What would be wanted to put IQ and maximum speed on par, would be credible results showing that a certain superiority in IQ is closely connected with a certain improved ability in raising fertile offspring to maturity, which is the definition of evolutionary success.

How are measuring intelligence? Most of the WAIS puts machines far, far ahead of humans. This includes block design, arithmetic, digit symbol, anything that tests memory, etc.

Why do we care about intelligence? We actually care about “mental skills humans have that machines can’t yet replace”. Measuring this doesn’t seem easy, especially if the WAIS favors machines so much.

This just makes me think if you can see such different output using essentially the same hardware, what kind of difference would make an improved one

I think intelligence is much like homosexuality …

… in that, it mostly benefits the tribe/gene-pool, but not the individual.

Being of average intelligence you are more intelligent than a good portion of the population and that helps you, just as being sub-average might be a hindrance in some situations. But being that much more intelligent does not help that individual much.

One does not have to be intelligent to profit from the intelligence of others. “We flew to the moon.” No, *we* did not. We did not find Antibiotics, but we have much more breeding success because of it.

The fact that someone does not understand calculus, does not imply that they are incapable of understanding calculus. They could simply be unwilling. There are many good reasons not to learn calculus. For one, it takes years of work. Some people may have better things to do. So I suggest that your entire premise is dubious—the variance may not be as large as you imagine.

Personally, I learned a semester worth of calculus in three weeks for college credit at a summer program (the Purdue College Credit Program circa 1989, specifically) when I was 16. Out of 20ish students (pre-selected for academic achievement), about 15% (see note 1) aced it while still goofing around, roughly 60% got college credit but found the experience difficult, and some failed. Two years later, my freshman roommate (note 2) took the same Purdue course over 16 weeks and failed it. The question isn’t “why don’t some people understand calculus”, but “why do some people learn it easily while others struggle, often failing”.

Note 1: This wasn’t a statistically robust sample. “About 15%” means “Chris, Bill, and I”.

Note 2: That roommate wanted to be an engineer and was well aware that he could only achieve that goal by passing calculus. He was often working on his homework at 1:30 am, much to my annoyance. He worked harder on that course than I had, despite being 18 years old and having a (presumably) more mature brain.

I’m assuming this is a response to my “takes years of work” claim, I have a few natural questions:

1. Why start counting time from the start of that summer program? Maybe you had never heard of calculus before that, but you had been learning math for many years already. If you learned calculus in 3 weeks, that simply means that you already had most of the necessary math skills, and you only had to learn a few definitions and do a little practice in applying them. Many people don’t already have those skills, so naturally it takes them a longer time.

2. How much did you learn? Presumably it was very basic, I’m guessing no differential equations and nothing with complex or multi-dimensional functions? Possibly, if you had gone further, your experience might have been different.

3. Why does speed even matter? The fact that someone took longer to learn calculus does not necessarily imply that they end up with less skill. I’m sure there is some correlation but it doesn’t have to be high. Although slow people might get discouraged and give up midway.

My point isn’t that there is no variation in intelligence (or potential for doing calculus), but that there are many reasons why someone would overestimate this variation and few reasons to underestimate it.

1) True, but by the time that roommate took the class he had had comparable math foundations to what I had had when I took the class. Considering the extra years, arguably rather more. (Upon further thought I realized that I had taken the class in 1988 at the age of 15)

2) That was first-semester calc, Purdue’s Math 161 class (for me and the roommate). Intro calc. Over the next two years I took two more semesters of calc, one of differential equations, and one of matrix algebra. By the time I met my freshman roommate (he was a bit older than me) and he started the calc class, I’d had five semesters of college math (which was all I ever took b/c I don’t enjoy math). Also, that roommate was a below-average college student, but there are people in the world with far less talent than he had.

3) Because time is the only thing you can’t buy. Time in college can be bought, but not cheaply even then. I got through school with good grades and went on to grad school as planned; his plans didn’t work out. Of course time marched on and I had failures of my own.

I agree that there’s more to success than one particular kind of intelligence. Persistence, looks, money, luck, and other factors matter. But my roommate’s calculus aptitude was a showstopper for his engineering ambitions, and I don’t think his situation was terribly uncommon.