It’s like poetry, they rhyme: Lanier and Yudkowsky, Glen and Scott

[EDIT: I’ve edited this post based on feedback. The original is on archive.org.]

I’ve gazed long into the exchanges between Jaron Lanier and Eliezer Yudkowsky, then longer in to those between Glen Weyl and Scott Alexander. The two exchanges rhyme in a real but catawampus kind of way. It’s reminiscent of an E.E. Cummings poem. If you puzzle over it long enough, you’ll see it. Or, your English teacher explains it to you.

(Understanding “r-p-o-p-h-e-s-s-a-g-r” is not required for the rest of this post, or even really worth your time. But a hint if you want to try: the line preceding the date is something like a pre-ASCII attempt at ASCII art.)

Anyway, for the duration of this post, I will be your hip substitute English teacher. Like hip substitutes, instead of following the boring lesson plan your real teacher left, we’re going to talk about Freud, bicycles and a computer demo from 1968.

Who are Jaron Lanier and Glen Weyl?

Jaron Lanier has written several books, most recently Ten Arguments for Deleting Your Social Media Accounts Right Now, which distills many of his more salient points from his previous books You Are Not a Gadget, Who Owns the Future and Dawn of the New Everything. Eric Weinstein, says there’s “four stages of Jaron Lanier.”

The first one, you think he’s a crazy person. Then you’re told that he’s a visionary. Then you realize no no no, he’s probably just faking it. And then, when you get to the final stage you realize he’s pretty much usually always spot on and never cowed by his employer or the group think of Silicon Valley.

If this is your first encounter with Jaron and he sounds crazy, you’re not alone, but maybe give him and second, third and fourth look. Glen works with Jaron closely, and I’m familiar with Glen’s work where it overlaps with Jaron’s.

(Unrelated but perhaps interesting to other neurodivergents who share personality traits with me—I strongly suspect that if I saw Jaron’s Big Five test results, they would look a lot like mine. Part of my interest in him is to see how someone high in openness and low in conscientiousness—he may of had the most overdue book contract ever in American publishing, for example—succeeds in doing things. FWIW two strategies he uses are cross-procrastination and the hamster wheel of pain. I wish he would share more of these.)

The easy rhyme: mentor-protégé lineage

In 2008 Eliezer Yudkowsky and Jaron Lanier had a conversation. Then, in 2021. their protégés Scott Alexander and E. Glen Weyl had their own exchange (see Glen’s initial essay, Scott’s response to Glen and Glen’s response to Scott).

In their joint appearance at the San Francisco Blockchain Summit, Glen adulates Jaron as “one of his heroes.” I’d suspect Glen and Jaron first crossed paths while working at Microsoft Research. Glen and Jaron also frequently write articles together; see AI is an Ideology, Not a Technology and A Blueprint for a Better Digital Society as examples.

Scott describes his early relationship with Eliezer with the term “embarrassing fanboy.” In The Ideology Is Not The Movement Scott facetiously describes Eliezer as the rightful caliph of the rationalist movement. I know it’s a joke, but still.

Humanisms

In his 2010 book, You Are Not A Gadget, Jaron describes the distinction between these two groups of people well, if with uncharitable terminology. Jaron uses the terms “cybernetic totalists” and “digital Maoists” to describe the kind of people that are technologists lacking in a kind of humanism.

(This is a long except, but it’s worth it.)

An endless series of gambits backed by gigantic investments encouraged young people entering the online world for the first time to create standardized presences on sites like Facebook. Commercial interests promoted the widespread adoption of standardized designs like the blog, and these designs encouraged pseudonymity in at least some aspects of their designs, such as the comments, instead of the proud extroversion that characterized the first wave of web culture. Instead of people being treated as the sources of their own creativity, commercial aggregation and abstraction on sites presented anonymized fragments of creativity as products that might have fallen from the sky or been dug up from the ground, obscuring the true sources.

The way we got here is that one subculture of technologists has recently become more influential than the others. The winning subculture doesn’t have a formal name, but I’ve sometimes called the members “cybernetic totalists” or “digital Maoists.” The ascendant tribe is composed of the folks from the open culture/Creative Commons world, the Linux community, folks associated with the artificial intelligence approach to computer science, the web 2.0 people, the anti-context file-sharers and remashers, and a variety of others. Their capital is Silicon Valley, but they have power bases all over the world, wherever digital culture is being created. Their favorite blogs include Boing Boing, TechCrunch, and Slashdot, and their embassy in the old country is Wired.

Obviously, I’m painting with a broad brush; not every member of the groups I mentioned subscribes to every belief I’m criticizing. In fact, the groupthink problem I’m worried about isn’t so much in the minds of the technologists themselves, but in the minds of the users of the tools the cybernetic totalists are promoting. The central mistake of recent digital culture is to chop up a network of individuals so finely that you end up with a mush. You then start to care about the abstraction of the network more than the real people who are networked, even though the network by itself is meaningless. Only the people were ever meaningful.

When I refer to the tribe, I am not writing about some distant “them.” The members of the tribe are my lifelong friends, my mentors, my students, my colleagues, and my fellow travelers. Many of my friends disagree with me. It is to their credit that I feel free to speak my mind, knowing that I will still be welcome in our world.

*On the other hand, I know there is also a distinct tradition of computer science that is humanistic. Some of the better-known figures in this tradition include the late Joseph Weizenbaum, Ted Nelson, Terry Winograd, Alan Kay, Bill Buxton, Doug Englebart, Brian Cantwell Smith, Henry Fuchs, Ken Perlin, Ben Schneiderman (who invented the idea of clicking on a link), and Andy Van Dam, who is a master teacher and has influenced generations of protégés, including Randy Pausch. Another important humanistic computing figure is David Gelernter, who conceived of a huge portion of the technical underpinnings of what has come to be called cloud computing, as well as many of the potential practical applications of clouds. *

And yet, it should be pointed out that humanism in computer science doesn’t seem to correlate with any particular cultural style. For instance, Ted Nelson is a creature of the 1960s, the author of what might have been the first rock musical (Anything & Everything), something of a vagabond, and a counter-culture figure if ever there was one. David Gelernter, on the other hand, is a cultural and political conservative who writes for journals like Commentary and teaches at Yale. And yet I find inspiration in the work of them both.

The intentions of the cybernetic totalist tribe are good. They are simply following a path that was blazed in earlier times by well-meaning Freudians and Marxists—and I don’t mean that in a pejorative way. I’m thinking of the earliest incarnations of Marxism, for instance, before Stalinism and Maoism killed millions.

Movements associated with Freud and Marx both claimed foundations in rationality and the scientific understanding of the world. Both perceived themselves to be at war with the weird, manipulative fantasies of religions. And yet both invented their own fantasies that were just as weird.

The same thing is happening again. A self-proclaimed materialist movement that attempts to base itself on science starts to look like a religion rather quickly. It soon presents its own eschatology and its own revelations about what is really going on—portentous events that no one but the initiated can appreciate. The Singularity and the noosphere, the idea that a collective consciousness emerges from all the users on the web, echo Marxist social determinism and Freud’s calculus of perversions. We rush ahead of skeptical, scientific inquiry at our peril, just like the Marxists and Freudians.

Premature mystery reducers are rent by schisms, just like Marxists and Freudians always were. They find it incredible that I perceive a commonality in the membership of the tribe. To them, the systems Linux and UNIX are completely different, for instance, while to me they are coincident dots on a vast canvas of possibilities, even if much of the canvas is all but forgotten by now.

Remember the names from the fifth paragraph quoted above, they’ll come up again in a second.

Jaron and Eliezer in 2008

Jaron and Eliezer weren’t talking about technocracy in 2008, but found themselves getting to base case related to humanism through a discussion on consciousness. Below is an excerpt starting around 26:01. (As a side note, this discussion was so much friendlier then it’s 2021 counterpart, if you’re keeping track of cultural changes).

JARON: Imagine you have a mathematical structure that consists of this huge unbounded continuous yield of scalars, and then there’s one extra point that’s isolated. And I think that the knowledge available to us has something like this. There’s this whole world of empirical things that we can study and then there’s this one thing which is that there is there’s also the epistemological channel of experience, you know, which is different, which is very hard to categorize very hard to talk about, and not subject to empirical study...

ELIEZER: … if I can interrupt and ask a question here. Suppose that a sort of “super Dennett” or even if you like a “super Lanier” shows up tomorrow and explains consciousness to you. You now know how it works. Have you lost something or gained something?

JARON: It’s an absurd thing to say. I mean that’s like saying… it’s just a nutty… The whole construction is completely absurd and misses the point.

ELIEZER: Wait, are you ignorant about consciousness or something else going on here? I don’t quite understand. Do you not know how consciousness works are you making some different type of claim?

JARON: I don’t think consciousness works. I don’t think consciousness is a machine. But actually to be precise, I don’t know what it is. And this is where we get back to the point of epistemological modesty. I do know how a lot of things work. I’ve built models of parts of the brain. I’ve worked on machine vision algorithms that can recognize faces better than I can. [Comment: readers may be interested to know that in Dawn of the New Everything Jaron explains he has prosopagnosia]. I’m really interested in the olfactory bulb and worked on that. I’m really really interested in the brain, I just think that’s a different question from consciousness.

ELIEZER: Ok so if you don’t know how consciousness works, where do you get the confidence that it cannot be explained to you? Because this would seem to be a kind of positive knowledge about the nature of consciousness and is sort of very deep and confusing positive knowledge at that.

JARON: (laughs)

ELIEZER: I’m sorry if I’m being too logical for your taste over here.

JARON: You know this is a conversation I’ve been having for decades, for decades, for decades. And it’s a peculiar thing. I mean it’s really like talking to a religious person. It’s so much like talking to a fundamentalist Christian or Muslim. Where you have this… I’m the one who’s saying I don’t know, and you’re the one who’s saying you do know.

ELIEZER: No no no no. I’m not saying you do know. I’m asking you to acknowledge that if you’re ignorant then you can’t go around making positive definite sure statements this can’t be explained to me, that the possibility is absurd. That to me sounds like the religious statement. Saying “I don’t know but I’m willing to find out” is science. Saying “I don’t know and you’re not allowed to explain it to me” is religion.

JARON: But that wasn’t my statement. What I’m suggesting is that… the possibility of experience itself is something separate from phenomena that can be experienced. I mean, or another way to put this is consciousness is precisely the only thing that isn’t reduced if it were an illusion, and that does put it in the class by itself. And, you know, I… and so therefore it becomes absurd to approach it with the same strategies for explanations that we can use for phenomena, which we can study empirically. Now I think that actually makes me a better scientist and I think it makes sort of Hard AI people sort of more superstitious and weird scientists and creates confusion. I submit that humanism is a better it’s a better framework for being a sort of a clear-headed scientist than the sort of Hard AI religion.

ELIEZER: Well, well, of course I consider myself a humanist...

Glen and Scott in 2021

I’m not going to quote from either of their essay responses, where it really gets revealing is in the comments.

Comment 1156922 - Glen responding to Scott (only most relevant bit quoted, wiki link is mine):

2. I continue to find it challenging to understand how you think that by insisting on design in a democratic spirit I am somehow entirely undermining any possibility of technology playing a role in society. It is very clear from my essay that this is not my intent, as I hope you will agree. And if you are having trouble parsing the distinction, I would suggest the work of John Dewey (founder of progressive education), Douglas Engelbart (inventor of the personal computer), Norbert Wiener (founder of cybernetics), Alan Kay (inventor of the GUI and key pioneer of object-oriented programming), Don Norman (founding figure of human-computer interaction), Terrance Winograd (advisor of Sergei [sic] and Larry) or Jaron Lanier (inventor of virtual reality). Distinctions of exactly the kind I am making have been central to all their thinking and many of them express it in much “air-fairier” humanistic terms than I do. I particularly enjoy Norman’s Design of Everyday Things and his distinction between complexity and complication. If you want empirical illustrations of this distinction, I’d suggest comparing the internet to GPT-3 or the iPhone to the Windows phone. In fact, I would go as far as to challenge you to name a major inventor of a technology as important or widely used as those above who publicly expressed a philosophy of invention and design that didn’t include a significant element of pushback against the rationalism of their age and an emphasis on reusability and redeployment.

Comment 1159339 - Scott responding to Glen (again removing some of the more irrelevant parts):

I’m still having trouble nailing down your position. I’m certainly not accusing you of being anti-technology. I think my current best guess at it is something like “you want expert planners to use very simple ideas and consult the population very carefully before implementing them”. I agree that all else being equal, simple things are better than complex ones and consulting people is better than ignoring them. But I feel like you’re taking an extremely exaggerated straw-mannish view of these things, until you can use it as a cudgel against anyone who tries to think rigorously at all.… What we need is for good technocrats like you to feel emboldened to challenge bad policies using language that acknowledges that some things can be objectively better or more fair than others, and I think you’re hamstringing your own ability to do that.

My usual tactic is to trust non-experts near-absolutely when they report how they feel and what they’re experiencing, be extremely skeptical of their discussions of mechanism and solution, and when in any doubt to default to nonintervention. When my patients say we need to stop a medication because they’re getting a certain side effect, I would never in a million years deny the side effect, but I might tell them that it only lasts a few days and ask if they think they can muddle through it, or that it’s probably not a med side effect and they just have the flu (while also being careful to listen to any contrary evidence they have, and realistically I should warn them about this ahead of time). Or more politically, if someone says “I’m really angry about being unemployed so we need to ban those immigrants who are stealing jobs”, I would respect their anger and sorrow at unemployment, but don’t think they necessarily know better than technocratic studies about whether immigrants decrease employment or not. I think generally talking about how technocrats are insular and biased and arrogant doesn’t distinguish between the thing we want (technocrats to listen to people’s concerns about unemployment and act to help them) and the thing we don’t want (technocrats agreeing to ban immigration in order to help these people). The thing that does help that is finding ways to blend rationality with honesty and compassion—something which I feel like your essay kind of mocks and replaces with even more denunciations of how arrogant those people probably are. I’m not sure *what* I would be trusting the general public with in terms of spectrum auctions. Maybe to report that they want fair distribution and don’t want to unfairly enrich the already privileged? But it hardly takes an anthropological expedition to understand that people probably want this.

Comment 1162634 - Glen responds to Scott:

1. Do you see no discernable or meaningful distinction between the operating philosophy of these pairs: Audrey Tang v. Eliezer Yudokowsky or Paul Milgrom, Douglas Engelbart v. Marvin Minsky, Don Norman v. Sam Altman etc.? Or are you unaware of the former cases?

2. Let me agree that it would be good if we could formalize more what this distinction is and define it more precisely. However, I am trying to understand whether you think that the inability to define the distinction more precisely means we should just ignore the distinction/never attempt to invoke it, or simply that it would be good to work harder on refining the definition?

Comment 1164478 - An other commentator, jowymax, interjects (quoted in part):

2. Formalizing the distinction would be really helpful for me. It seems that you see yourself, Danielle, Audrey, (and Vitalik?) as a new kind of designer—a more human centered designer. At first I thought the distinction your were making between technocrats and yourself was similar to the distinction between an engineer and a product manager. But maybe that’s not right?

Comment 1164942 - Glen responds to jowymax:

I wouldn’t say we are new, at least not within technology...this type of philosophy has been around for ages, at least since Wiener and Engelbart in the 50s-60s and is responsible for most of the tech that we actually use. But within economic mechanism design it is less common, and Audrey is the consummate practitioner (though so was Henry George in the late 19th century). In the tech world the right analog is not engineer v. product manager but engineer v. human-centered designer (those are the most powerful people at Apple, though sadly not at most other tech companies).

The hard rhyme: philosophical camps taking shape

Pointing out the obvious, the names Glen mentions in these comments look familiar with the 2008 conversation.

Glen’s 2021 list of humanist design influences: John Dewey, Paul Milgrom, Douglas Engelbart, Norbert Wiener, Alan Kay, Don Norman, Terrance Winograd, Jaron Lanier, Henry George, Danielle Allen, Vitalik Buterin and Audrey Tang.

Jaron’s 2010 list of humanist design influences: Joseph Weizenbaum, Ted Nelson, Terry Winograd, Alan Kay, Bill Buxton, Doug Engelbart, Brian Cantwell Smith, Henry Fuchs, Ken Perlin, Ben Schneiderman, Andy Van Dam, Randy Pausch and David Gelernter.

The union includes: Douglas (Doug) Engelbart, Alan Kay, Terrance (Terry) Winograd and Jaron Lanier (assuming Jaron would have put himself on his own list).

People that would be outside of this set, I suppose we could call them cybernetic totalists for lack of a more charitable term, seem to include Eric Horvitz, Marvin Minsky, Sam Altman and… Eliezer?

You can see Glen and Jaron are respectively saying that Eliezer and Scott are lacking in a kind of humanism and/or human-centered way of design. Both Eliezer and Scott respond, effectively, by saying that they’re humanists. (In Scott’s case, he doesn’t quite use the word but I believe implies it with statements like “finding ways to blend rationality with honesty and compassion”).

It also seems clear to me that neither side has a good way of defining this distinction, although both sides seem to recognize the need for one (as in comment 1162634 explicitly).

So what is the formal distinction between cybernetic totalists and the people who are not that?

These are likely not the cybernetic totalists you’re looking for

I’m going to use the earliest computer-related name that appears on both Glen and Jaron’s lists, Doug Engelbart, and some more specific distinctions made in one of Jaron’s early essays to draw what I believe is a fair boundary separating cybernetic totalists from everyone else. This isn’t science, this is just me having spent a lot of time reading and digesting all of this. Interested parties probably will disagree to an extent, and I’m happy about that so long as I’ve improved the overall quality of the disagreement.

At one point in time, Jaron attempted to formalize what a cybernetic totalist was, but to get there we’ll have to go… all the way back to the year 2000.

In the year 2000… Jaron wrote “One Half A Manifesto” and listed beliefs cybernetic totalist were likely to hold. (If you like reading comments, don’t skip these ones on edge.org). I’m thinking of this along the lines of a DSM criteria in the sense that some beliefs are evidence in favor of a “diagnosis” but others are more salient when it comes to “diagnostic criteria.” The following three sections contain the concepts I believe are most relevant from his essay, and what I think is a useful way of stating what Glen and Jaron mean by humanism.

Campus imperialism

I’m not sure if Jaron coined this term or not but—purely linguistically speaking—I love it. It’s so parsimonious I’m not sure why Glen didn’t use it in his original essay on technocracy or any of the follow-up, because it elegantly describes a common failure mode of the technocratic mindset. This is especially true if you broaden the term “campus” from the first association many of us have—universities—to include, say, corporate campuses, government agency campuses, think-tank campuses, etc. Jaron uses this term twice.

The first appearance:

...Cybernetic Totalists look at culture and see “memes”, or autonomous mental tropes that compete for brain space in humans somewhat like viruses. In doing so they not only accomplish a triumph of “campus imperialism”, placing themselves in an imagined position of superior understanding vs. the whole of the humanities, but they also avoid having to pay much attention to the particulars of culture in a given time and place.

The second appearance:

Another motivation might be the campus imperialism I invoked earlier. Representatives of each academic discipline occasionally assert that they possess a most privileged viewpoint that somehow contains or subsumes the viewpoints of their rivals. Physicists were the alpha-academics for much of the 20th century, though in recent decades “postmodern” humanities thinkers managed to stage something of a comeback, at least in their own minds. But technologists are the inevitable winners of this game, as they change the very components of our lives out from under us. It is tempting to many of them, apparently, to leverage this power to suggest that they also possess an ultimate understanding of reality, which is something quite apart from having tremendous influence on it.

The Singularity (or something like it) and autonomous machines

The other important bits are the “belief” in a coming singularity, and affinity for autonomous machines. Jaron uses “autonomous machines” in this essay like an umbrella term for a category of technology that includes AI.

Belief #6: That biology and physics will merge with computer science (becoming biotechnology and nanotechnology), resulting in life and the physical universe becoming mercurial; achieving the supposed nature of computer software. Furthermore, all of this will happen very soon! Since computers are improving so quickly, they will overwhelm all the other cybernetic processes, like people, and will fundamentally change the nature of what’s going on in the familiar neighborhood of Earth at some moment when a new “criticality” is achieved—maybe in about the year 2020. To be a human after that moment will be either impossible or something very different than we now can know.

More on autonomous machines:

When a thoughtful person marvels at Moore’s Law, there might be awe and there might be terror. One version of the terror was expressed recently by Bill Joy, in a cover story for Wired Magazine. Bill accepts the pronouncements of Ray Kurtzweil and others, who believe that Moore’s Law will lead to autonomous machines, perhaps by the year 2020. That is the when computers will become, according to some estimates, about as powerful as human brains.

Even more on autonomous machines:

I share the belief of my cybernetic totalist colleagues that there will be huge and sudden changes in the near future brought about by technology. The difference is that I believe that whatever happens will be the responsibility of individual people who do specific things. I think that treating technology as if it were autonomous is the ultimate self-fulfilling prophecy. There is no difference between machine autonomy and the abdication of human responsibility.

Engelbartian humanism (bicycles for the mind)

Let me my give you a little background on Doug Engelbart, if you’re not familiar. He’s perhaps most well-know for The Mother of All Demos. If you haven’t seen that you may want to stop now and give it a few minutes of your time. It’s a demo of the oN-Line System (NLS) that’s like… a real desktop-like computer in 1968. I mean it’s not punch cards, it’s like you’re watching this and you’re like “oh, this a lot of what my computer does now, but this was in 1968!” NLS was developed by Engelbart’s team at the Augmentation Research Center (ARC) in the Stanford Research Institute (SRI).

I had thought for most of my adult life that Xerox PARC invented the concept of a GUI with all of the rudimentary accoutrements, then at some point Apple and Microsoft stole their ideas. It’s more like the GUI we use now was invented at SRI and then a bunch of people who worked at SRI went to Xerox PARC.

I’ll call what Glen and Jaron mean by humanism, Engelbartian humanism. That is humanism in the sense of Engelbart’s goal of “augmenting human intellect” with the constraint that augmenting human intellect does not mean replacing human intellect. Steve Jobs rephrased and punched-up this concept likening computers to “bicycles for the mind.”

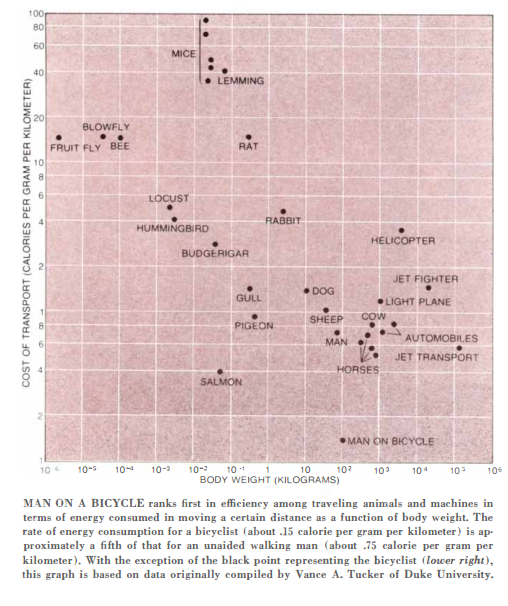

I think one of the things that really separates us from the high primates is that we’re tool builders. I read a study that measured the efficiency of locomotion for various species on the planet. The condor used the least energy to move a kilometer. And, humans came in with a rather unimpressive showing, about a third of the way down the list. It was not too proud a showing for the crown of creation. So, that didn’t look so good. But, then somebody at Scientific American had the insight to test the efficiency of locomotion for a man on a bicycle. And, a man on a bicycle, a human on a bicycle, blew the condor away, completely off the top of the charts.

And that’s what a computer is to me. What a computer is to me is it’s the most remarkable tool that we’ve ever come up with, and it’s the equivalent of a bicycle for our minds.

Chart from Scientific American, 1973. I don’t see condors, but it more or less makes the same point.

At the time, that was probably more of a descriptive statement, but I suspect in 2021 Glen and Jaron would see it as more normative.

Proposed cybernetic totalist diagnostic criteria

I propose four criteria, three as a positive indicator and one as a negative. If the score is 2 or above, you are diagnosed with cybernetic totalism!

+1 if subject believes in the Singularity or something like it

+1 if subject exhibits unchecked tendencies towards campus imperialism

+1 if subject is working on autonomous machines (AI) 50% or more of the time

-1 if subject is working on augmenting human intellect 50% or more of the time

Scoring Scott and Eliezer on the first criterion

I know (1) isn’t true in Scott’s case and strongly suspect it’s no longer true in Eliezer’s. A particularly apt example I recall is from Scott’s post “What Intellectual Progress Did I Make In The 2010s?”

In the 2000s, people debated Kurzweil’s thesis that scientific progress was speeding up superexponentially. By the mid-2010s, the debate shifted to whether progress was actually slowing down. In Promising The Moon, I wrote about my skepticism that technological progress is declining. A group of people including Patrick Collison and Tyler Cowen have since worked to strengthen the case that it is; in 2018 I wrote Is Science Slowing Down?, and late last year I conceded the point. Paul Christiano helped me synthesize the Kurzweillian and anti-Kurzweillian perspectives into 1960: The Year The Singularity Was Cancelled.

(I’ll even go as far as to say Scott’s take here in principle sounds like Jaron’s take on Belief #5 in “One Half A Manifesto.” I’ve already quoted too much, but it’s worth reading.)

I’m less familiar with Eliezer, but judging by these two articles, the Singulariry section on yudkowsky.net and his 2016 interview with Scientific American, I would say, no, Eliezer is not a Singularity ideolog.

Scoring Scott and Eliezer on the remaining criteria

On the question of campus imperialism… I’d invite everyone to judge based on their bodies of work. Do you see them dismissing the rest of the culture or carefully trying to understand it? Do they think of themselves better judges of reality than others based on their proficiency with technology? Would they be willing to impose their judgement of better realities on others without sufficient consent? I would answer no to these questions, but of course I’m biased. In terms of working on AI 50% or more of the time, Eliezer gets a 1 and Scott gets a 0. In terms of augmenting human intellect, the entirety of the rationalist community represents an attempt at augmenting human intellect, even if the “bicycles” it creates are more along the lines of concepts, habits, principles, methods, etc. On the above scale, I would score criteria 1, 2, 3, 4 as follows:

Going easy on Scott and Eliezer (e.g. negligible campus imperialism)

Scott: 0, 0, 0, −1. Total score: −1

Eliezer: 0, 0, 1, −1. Total score: 0

Going hard on Scott and Eliezer (e.g. sufficient campus imperialism)

Scott: 0, 1, 0, −1. Total score: 0

Eliezer: 0, 1, 1, −1. Total score: 1

In either case, they’re both below 2.

For reference here, I would give Ray Kurzweil, for example, a 3. But I may have some bias there as well.

Conclusion

There are obvious humanistic intentions driving work on AI and especially AI alignment. As Eliezer mentions around 49:09 we want AI to do things like cure AIDS and solve aging, but more importantly we want to make sure a hypothetical AGI at a baseline doesn’t annihilate us as a species. It’s easy for me to see the humanist intentions there even if reasonable people can disagree on the inevitability of AGI.

It also seems obvious to me that we should avoid getting in a campus imperialist mindset. I similarly see how it’s easy to get excited about building cool technology and forgetting to ask, “is this more like a bicycle or is this more like a Las Vegas gambling machine?”

I don’t fault Glen and Jaron for being a bit cranky and bolshy about pushing back. The business models of Facebook and Twitter don’t align with ethical humanistic design practices, if that latter phrase is to mean anything. I do, however, see Glen and Jaron’s energy as being misdirected at Scott, Eliezer, AI alignment and effective altruism.

After reading this article and the Scott/Weyl exchanges, I’m left with the impression that one side is saying: “We should be building bicycles for the mind, not trying to replace human intellect.” And the other side is trying to point out: “There is no firm criteria by which we can label a given piece of technology a bicycle for the mind versus a replacement for human intellect.”

Perhaps uncharitably, it seems like Weyl is saying to us, “See, what you should be doing is working on bicycles for the mind, like this complicated mechanism design thing that I’ve made.” And Scott is sort of saying, “By what measure are you entitle to describe that particular complicated piece of gadgetry a bicycle for the mind, while I am not allowed to call some sort of sci-fi exocortical AI assistant a bicycle for the mind?” And then Weyl, instead of really attempting to provide that distinction, simply lists a bunch of names of other people who had strong opinions about bicycles.

Parenthetically, I’m reminded of the idea from the Dune saga that it wasn’t just AI that was eliminated in the Butlerian Jihad, but rather, the enemy was considered to be the “machine attitude” itself. That is, the attitude that we should even be trying to reduce human labor through automation. The result of this process is a universe locked in feudal stagnation and tyranny for thousands of years. To this day I’m not sure if Herbert intended us to agree that the Butlerian Jihad was a good idea, or to notice that his universe of Mentats and Guild Navigators was also a nightmare dystopia. In any case, the Dune universe has lasguns, spaceships, and personal shields, but no bicycles that I can recall.

Let’s take Audrey Tang, Eliezer Yudokowsky, Glen Weyl and Jaron Lanier.

Audrey and Eliezer both created open spaces for web discussion where people can vote (Eliezer did two of those projects) while Glen Weyl decided against doing so when it comes to the Radical Market community and Jaron Lanier is against online communities to the point of speaking against Wikipedia and the Open source movement.

You can make an argument that cybernetic systems and web discussions where people vote being cybernetic in nature.

The thing that distinguises Eliezer the most from the others is that Eliezer is a successful fiction author while the others aren’t. You can discuss the merits of writing fiction to influence people but it seems to me to qualify as chosing humanistic ways of achieving goals.

That sounds to me like a strawman. We are having this discussion on LessWrong which a bicycle in the Engelbartian sense. We aren’t having this discussion on facebook or on Reddit but on software that’s designed by the rationalist community to facilitate debate.

The rationalist community is not about limiting itself to the lines of concepts, habits, principles, methods. I would expect that’s much more likely true of Weyl’s RadicalXChange.

Should have mentioned the first time — Jaron is critical of, but not against, Wikipedia or open source.

I’ve been a Wikipedia editor since 2007, and admit that most of his criticisms are valid. Anyone that has, say, over 1000 edits on Wikipedia either knows that it sucks to be a Wikipedia editor or hides how much it sucks because they’re hoping to be an admin at some point and don’t complain about it outside their own heads… in which case they don’t really add new content to articles, they argue about content in articles and modify what other people have already contributed.

He points on open source don’t seem to be any different than what people said in Revolution OS In like 2001–open source and proprietary software can co-exist. e.g. Bruce Perens said his only difference with Richard Stallman was the thought “that free software and non-free software should coexist.” https://www.youtube.com/watch?v=4vW62KqKJ5A#t=49m39s

That’s a fair point, I should have included the software that’s been written as part of the rationalist movement.

Thank you very much for taking the time to write this. Scott Alexander and Glen Wyel are two of my intellectual hero’s, they’ve both done a lot for my thinking in economics, coordination, and just how to go about a dialectic intellectual life in general.

So I was also dismayed (to an extent I honestly found surprising) when they couldn’t seem to find a good faith generative dialogue. If these two can’t then what hope is there for the average Red vs Blue tribe member?

This post have me a lot of context though, so thanks again 😊