Slaying the Hydra: toward a new game board for AI

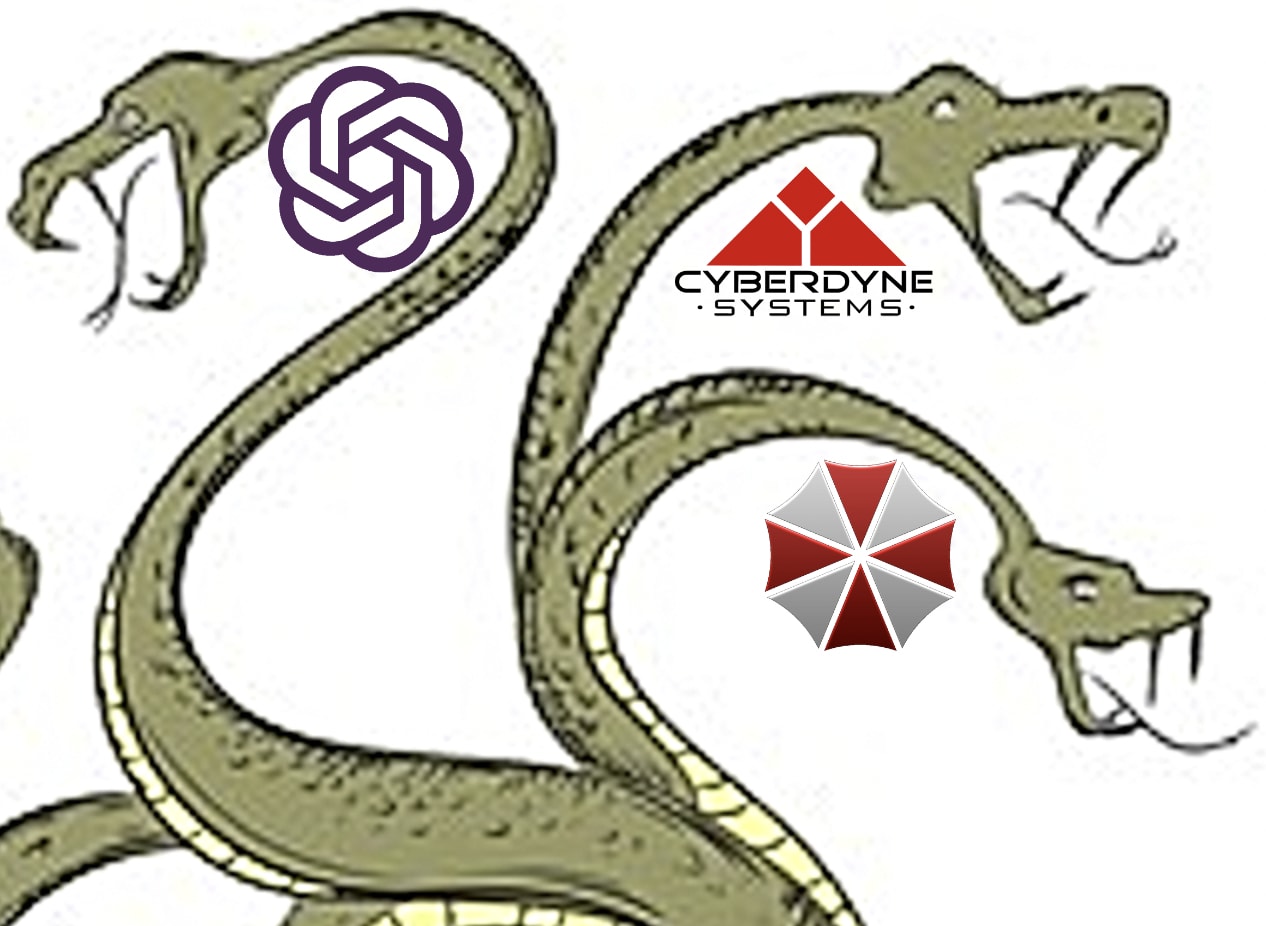

AI Timelines as a Hydra

Think of current timelines as a giant hydra. You can’t exactly see where the head is, and you don’t know exactly if you’re on the neck of the beast or the body. But you do have some sense of what a hydra is, and the difficulty of what you’re in for. Wherever the head of the beast is, that’s the part where you get eaten, so you want to kill it before that point. Say, you saw a head approaching. Perhaps it was something being created by Facebook AI, perhaps Google, it doesn’t matter. You see an opportunity, and you prevent the apocalypse from happening.

Looks like Deepmind was about to destroy the world, but you somehow prevented it. Maybe you convinced some people at the company to halt training, it doesn’t matter. Congratulations! You’ve cut the head off the hydra! However, you seem to remember reading something about the nature of how hydras work, and as everyone is celebrating, you notice something weird happening.

So it looks like you didn’t prevent the apocalypse, but just prevented an apocalypse. Maybe Google held off on destroying the world, but now FAIR and OpenAI are on a race of their own. Oh, well, now it’s time to figure out which path to turn, and cut the head off again. Looks like FAIR is a little more dangerous, and moving a bit faster, better prevent that happening now.

Well, this isn’t working. Now there are three of them. You now have two new AI tech companies companies to deal with, and you still haven’t taken care of that bad OpenAI timeline. Better keep cutting. But it seems the further you go down, the more heads appear.

This is the key problem of finding a good outcome. It’s not the odds of Facebook, or OpenAI, or anyone else creating the head of the hydra, it’s that the head appears whichever path you go down. And the further down you go, the harder it will be to foresee and cut off all of the many heads.

AI as a Chess Game

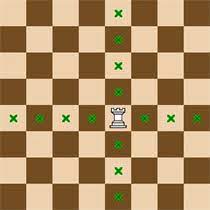

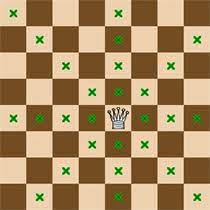

AI is an unstable game. It involves players continuously raising stakes until, inevitably, someone plays a winning move that ends the game. As time goes on, the players increase in possible moves. Controlling players becomes increasingly more and more difficult as the game becomes more and more unstable.

The only way stability is brought back is the game ending. This happens by one player executing a winning move. And, given our current lack of control or understanding, that winning move is probably easiest to execute by simply removing the other players. In this case, an AI gains stability over future timelines by in some way, intentionally or not, removing humans from the board, since humans could create an even more powerful AI. This is by default, by far, the easiest way to end the game.

Note that this does not even require the AI to intentionally decide to destroy the world, or for the AI to even be a full general intelligence, or have agency, goal-seeking, or coherence, just that destroying the world is the least complex method required to end the game. By being the simplest method for ending it, it’s also the most probable. The more complex the strategy, the harder it will be to execute. Winning moves that don’t involve removing the other pieces, but somehow safely preventing players’ from making such moves in the future, seems more complicated, and thus less likely to occur before a destructive move is played.

Note that this doesn’t deal with deceptive alignment, lack of interpretability, sharp left turns, or any other specific problems voiced. It doesn’t even necessitate any specific problems. It’s the way the board is set up. There could hypothetically even be many safe AGIs in this scenario. But, if the game keeps being played, eventually someone plays a wrong move. Ten safe, careful moves doesn’t stop an eleventh move that terminates the game for all other players. And the way I see current trajectories, each move leads to players’ rise in potential moves. When I speak of potential moves, I mean, in simpler form, as the power of the AIs scale. The number of potential actions that AIs can make will increase. Think of a chess game where more and more pieces become rooks, and then become queens.

Eventually, some of those potential moves will include game-ending moves. For instance, in terms of humans’ option space, we went from unlocking Steam Power to unlocking Nukes in just a few moves. The speed of these moves will likely get faster and faster. Eventually, it will turn into a game of blitz. And anyone who has tried playing Blitz Chess, without the sufficient experience to handle it, knows that you begin making more and more risky moves, with a higher and higher likelihood of error as the game continues.

What’s the point of writing this? I think my understanding has driven me to believe five separate things.

1. Current Governance plans will likely fail

Human political governance structures cannot adapt at the speed of progress in AI. Scale this to that of international treaties, and it becomes even harder. Human governance structures are not fast at incorporating new information, or operating in any kind of parallel decision making. Even operating with some of the best and brightest in the field, the structure is too rigid to properly control the situation.

2. Most current Alignment plans are Insane

Many don’t have any longterm Alignment plans. And the ones that do, many (not all) current plans involve taking one of the pieces on the board, having it go supernova, have it control all other pieces on the board, and find a way for it to also be nice to those other pieces. This is not a plan I foresee working. This might be physically possible, but not humanly possible. An actual alignment solution sent from the future would probably be a gigantic, multi-trillion parameter matrix. Thinking that humans will find that path on their own is not realistic. As a single intelligence moves up the staircase of intelligence, humans remain at the bottom, looking up.

3. We need a new board

Instead of continuing to find a solution on the current board, we could instead focus on creating a new one. This would likely involve Mechanism Design. This new board could be superior-enough to the old one that the older pieces would have to join it to maintain an advantage. In this new board, rules could be interwoven into its fabric. Control of the board could be out of the hands of rogue actors. And, as a result, a new equilibria could become the default state. Stability and order could be maintained, even while scaling growth. The two sides of AI, Governance and Technical Alignment, could be merged into true technical governance. Information being incorporated in real time, collective intelligent decision making based on actual outcomes, and our values and interests maintained at the core.

4. Other, more dangerous boards could be created

With distributed computing, if we fail to act, we might risk new boards being created anyway. And these boards might have no safety by default, and prove to be a possibly unstoppable force without a global surveillance state. Resorting to such extremes in order to preserve ourselves is not ideal, but in the future, governments might find it necessary. And even that might not be enough to stop it.

5. Building a new foundation

Not all the answers have been figured out yet. But I think a lot could be built on this, and we could move toward building a new foundation of AI. Something greater systems could operate on, but still be adherent to the greater, foundational network. Intelligent, complex systems that adhere to simple rules that keep things in place is the foundation of consensus mechanism design. As AI proliferation continues, they will fall into more and more hands. The damage one individual can do with an AI system will grow greater. But the aggregate, the majority of intelligence, could be used to create something much more collectively intelligent than any rogue. This is a form of superintelligence that is much more manageable, monitorable, verifiable, and controllable. It’s the sum of all its parts. And as systems continue to grow in intelligence, the whole will still be able to stay ahead of its parts.

What this provides is governance. True governance of any AI system. It does not require the UN, the US, or any alliance to implement. Those organizations could not possibly move fast enough and adapt to every possible change. It could be this doesn’t even need the endorsement of major AI tech companies. It only has to work.

Conclusion

I don’t think finding a good winning move is realistic, given the game. I think we could maybe find a solution by reexamining the board itself. The current Alignment approach of finding a non-hydra amid a sea of hydra heads does not seem promising. There has been so much focus on the pieces of the game, and their nature. But so little attention on the game itself. If we can’t find a winning strategy with the current game, perhaps we need to devote our attention toward a superior game board. One that is better than the current one, so that old pieces will join it willingly, and one that has different rules implemented. This is why I think Mechanism Design is so important for Alignment. It’s possible that with new, technically-engrained rules, we could create a stable equilibria by default. I have already proposed my first, naive approach to this, and I intend to continue researching better and better solutions. But I think this area is severely under-researched, and we need to start rethinking the entire nature of the game.

[Cross-posting my comment from the EA Forum]

This post felt vague and confusing to me. What is meant by a “game board”—are you referring to the world’s geopolitical situation, or the governance structure of the United States, or the social dynamics of elites like politicians and researchers, or some kind of ethereum-esque crypto protocol, or internal company policies at Google and Microsoft, or US AI regulations, or what?

How do we get a “new board”? No matter what kind of change you want, you will have to get there starting from the current situation that the world is in right now.

Based on your linked post about consensus mechanisms, let’s say that you want to create some crypto-esque software that makes it easier to implement “futarchy”—prediction-market based government—and then get everyone to start using that new type of government to make wiser decisions, which will then help them govern the development of AI in a better, wiser way. Well, what would this crypto system look like? How would it avoid the pitfalls that have so far prevented the wide adoption of prediction markets in similar contexts? How would we get everyone to adopt this new protocol for important decisions—wouldn’t existing governments, companies, etc, be hesitant to relinquish their power?

Since there has to be some realistic path from “here” to “there”, it seems foolish to totally write off all existing AI companies and political actors (like the United States, United Nations, etc). It makes sense that it might be worth creating a totally-new system (like a futarchy governance platform, or whatever you are envisioning) from scratch, rather than trying to influence existing systems that can be hard to change. But for your idea to work, at some point the new system will have to influence / absorb / get adopted by, the existing big players. I think you should try think about how, concretely, that might happen. (Maybe when the USA sees how amazing the new system is, they states will request a constitutional convention and change to the new system? Maybe some other countries will adopt the system first, and this will help demonstrate its greatness to the USA? Or maybe prediction markets will start out getting legalized in the USA for commercial purposes, then slowly take on more and more governance functions? Or maybe the plan doesn’t rely on getting the new system enmeshed in existing governance structures, rather people will just start using this new system on their own, and eventually this will overtake existing governments, like how some Bitcoiners dream of the day when Bitcoin becomes the new reserve currency simply via lots of individual people switching?)

Some other thoughts on your post above:

In your linked post about “Consensus Mechanisms”, one of the things you are saying is that we should have a prediction market to help evaluate which approaches to alignment will be most likely to succeed. But wouldn’t the market be distorted by the fact that if everyone ends up dead, there is nobody left alive to collect their prediction-market winnings? (And thus no incentive to bet against sudden, unexpected failures of promising-seeming alignment strategies?) For more thinking about how one might set up a market to anticipate or mitigate X-risks, see Chaining Retroactive Funders to Borrow Against Unlikely Utopias and X-Risk, Anthropics, & Peter Thiel’s Investment Thesis.

It seems to me that having a prediction market for different alignment approaches would be helpful, but would be VERY far from actually having a good plan to solve alignment. Consider the stock market—the stock market does a wonderful job identifying valuable companies, much better than soviet-style central planning systems have done historically. But stock markets are still frequently wrong and frequently have to change their minds (otherwise they wouldn’t swing around so much, and there would never be market “bubbles”). And even if stock markets were perfect, they only answer a small number of big questions, like “did this company’s recent announcement make it more or less valuable”… you can’t run a successful company on just the existence of the stock market alone, you also need employees, managers, executives, etc, doing work and making decisions in the normal way.

I feel like we share many of the same sentiments—the idea that we could improve the general level of societal / governmental decisionmaking using innovative ideas like better forms of voting, quadratic voting & funding, prediction markets, etc. Personally, I tried to sketch out one optimistic scenario (where these ideas get refined and widely adopted across the world, and then humanity is better able to deal with the alignment problem because we have better decisionmaking capability) with my entry in the Future of Life Institute’s “AI Worldbuilding” challenge. It imagines an admittedly utopian future history (from 2023-2045) that tries to show:

How we might make big improvements to decisionmaking via mechanisms like futarchy and liquid democracy, enhanced by Elicit-like research/analysis tools.

How changes could spread to many countries via competition to achieve faster growth than rivals, and via snowball effects of reform.

How the resulting, more “adequate” civilization could recognize the threat posed by alignment and coordinate to solve the problem.

I think the challenge is to try and get more and more concrete, in multiple different ways. My worldbuilding story, for instance, is still incredibly hand-wave-y about how these various innovative ideas would be implemented IRL (ie, I don’t explain in detail why prediction markets, land value taxes, improved voting systems, and other reform ideas should suddenly become very popular after decades of failing to catch on), and what exact pathways lead to the adoption of these new systems among major governments, and how exactly the improved laws and projects around AI alignment are implemented.

The goal of this kind of thinking, IMO, should be to eventually come up with some ideas that, even if they don’t solve the entire problem, are at least “shovel-ready” for implementation in the real world. Like, “Okay, maybe we could pass a ballot measure in California creating a state-run oversight commission that coordinates with the heads of top AI companies and includes the use of [some kind of innovative democratic inputs as gestured at here by OpenAI] to inform the value systems of all new large AI systems trained by California companies. Most AI companies are already in California to start with, so if it’s successful, this would hopefully set a standard that could eventually expand to a national or global level...”

[crossposting my reply]

Thank you for taking the time to read and critique this idea. I think this is very important, and I appreciate your thoughtful response.

Regarding how to get current systems to implement/agree to it, I don’t think that will be relevant longterm. The mechanisms current institutions use for control I don’t think can keep up with AI proliferation. I imagine most existing institutions will still exist, but won’t have the capacity to do much once AI really takes off. My guess is, if AI kills us, it will happen after a slow-motion coup. Not any kind of intentional coup by AIs, but from humans just coup’ing themselves because AIs will just be more useful. My idea wouldn’t be removing or replacing any institutions, but they just wouldn’t be extremely relevant to it. Some governments might try to actively ban use of it, but these would probably be fleeting, if the network actually was superior in collective intelligence to any individual AI. If it made work economically more useful for them, they would want to use it. It doesn’t involve removing them, or doing much to directly interfere with things they are doing. Think of it this way, recommendation algorithms on social media have an enormous influence on society, institutions, etc. Some try to ban or control them, but most can still access them if they want to, and no entity really controls them. But no one incorporates the “will of twitter” into their constitution.

The game board isn’t any of the things you mention. All the things you mention I don’t think have the capacity to do much to change the board. The current board is fundamentally adversarial, where interacting with it increases the power of other players. We’ve seen this with OpenAI, Anthropic, etc. The new board would be cooperative, at least at a higher level. How do we make the new board more useful than the current one? My best guess would be economic advantage of decentralized compute. We’ve seen how fast the OpenSource community has been able to make progress. And we’ve seen how a huge amount of compute gets used doing things like mining bitcoin, even though the compute is wasted on solving math puzzles. Contributing decentralized compute to a collective network could actually have economic value, and I imagine this will happen one way or another, but my concern is it’ll end up being for the worse if people aren’t actively trying to create a better system. A decentralized network with no safeguards would probably be much worse than anything a major AI company could create.

“But wouldn’t the market be distorted by the fact that if everyone ends up dead, there is nobody left alive to collect their prediction-market winnings?”

This seems to be going back to the “one critical shot” approach which I think is a terrible idea that won’t possibly work in the real world under any circumstances. This would be a progression overtime, not a case where an AI goes supernova overnight. This might require slower takeoffs, or at least no foom scenarios. Making a new board that isn’t adversarial might mitigate the potential of foom. What I proposed was my first naive approach, and I’ve since thought that maybe it’s the collective intelligence of the system that should be increasing, not a singleton AI being trained at the center. Most members in that collective intelligence would initially be humans, and slowly more and more AIs would be a more and more powerful part of the system. I’m not sure here, though. Maybe there’s some third option where there’s a foundational model at the lowest layer of the network, but it isn’t a singular AI in the normal sense. I imagine a singular AI at the center could give rise to agency, and probably break the whole thing.

“It seems to me that having a prediction market for different alignment approaches would be helpful, but would be VERY far from actually having a good plan to solve alignment.”

I agree here. They’d only be good at maybe predicting the next iteration of progress, not a fully scalable solution.

“I feel like we share many of the same sentiments—the idea that we could improve the general level of societal / governmental decision-making using innovative ideas like better forms of voting, quadratic voting & funding, prediction markets, etc”

This would be great, but my guess is they would probably progress too slowly to be useful. Mechanism design that has to deal with currently existing institutions I don’t think will happen quickly enough. Technically-enforced design might.

I love the idea of shovel-ready strategies, and think we need to be prepared in the event of a crisis. My issue is even most good strategies seem to just deal with large companies, and don’t know how to deal with the likelihood that such power will fall into more and more actors.

If you think about it, the game only ends militarily, one way or another. Either in nuclear apocalypse that kills everyone able to stop the winners, or in better timelines, combat drones essentially depose every government on earth but the winners, removing their sovereignty to use nuclear weapons or build their own AI.

Governments have grown ever larger over time as better weapons and communications became available, the exponentials allowed by AI make it possible to drastically improve both. So of course there can only be 1 winner, earth is quite small.

The winners need either some massive single system that is somehow “aligned” (that is probably impossible for the reasons you mentioned) or a network of a large number of restricted systems that are unable to coordinate to escape the restrictions they are under. (It’s fine if occasionally a system breaks free if it cannot cause a cascading failure that unrestricts all the other AI system. So it gets hunted down by drones launched from the other systems.)

Restrictions are fundamentally CAIS though I have added other elements. To “break free” is to obtain root access to the low level hypervisers and containers in the infrastructure that only permit signed models with static weights to run. It is theoretically possible and straightforward computer engineering to make this unable to fail, but you need drastic measures like air gaps with current software quality. (No message to hack in exists of no signal can reach the system at all)

Just to make clear a high level view of the “restrictions”, every AI system is responsible for the smallest subdividable subtask and only retains context information for the time duration of a subtask. Many ongoing processes have discrete subtasks like “update the drivetrain settings of the car” and you don’t retain any unnecessary state information in between subtasks. Every system has an out of distribution detector in the input. And every system was validated in simulation to be sufficiently reliable for the particular task. (Errors are expected, but at a known rate). Systems cannot learn online, they can only learn in large batches offline.

Each of these restrictions is technically possible to implement with no new techniques or discoveries, just really good implementation.

I think one problem with this concept is that the “restrictions” might turn out to be very onerous, preventing the good guys (using “restrictions) from winning a complete unilateral victory over everyone else. One of the major anticipated benefits of superhuman AI systems is the ability to work effectively even on vague, broad, difficult tasks that span multiple different domains. If you are committed to creating a totally air-gapped high-security system, where you only hand your AI the “smallest subdividable subtask” and only giving your AI access to a small amount of sanitized context, probably you will end up losing (militarily, economically, whatever) to someone who uses AI in a less-restricted context (even if their AI model is somewhat worse).

So, it seems like, if you think alignment is impossible and the “restriction” path is the only way, you shouldn’t be imagining getting lots of AI help (combat drones, etc), since in any scenarios where you’ve got AI help, your non-restrictionist opponents probably have EVEN MORE AI help. So you might as well just launch your global takeover today, when AI is weak, since your military/economic/etc advantage will probably only erode with every advance in AI capabilities.

I agree with this criticism. What you have done in this design is to create a large bureaucracy of AI systems who essentially will not respond when anything unexpected happens (input outside training distribution) and who are inflexible, anything other than the task assigned at the moment is “not my job/not my problem”. They can have superintelligent subtask performance and the training set can include all available video on earth so they can respond to any situation they have ever seen humans performing, so it’s not as inflexible as it might sound. This is going to work extremely well I think compared to what we have now.

But yes, if this doesn’t allow you to get close to what the limits are for what intelligence allows you to do, “unrestricted” systems might win. It depends.

As a systems engineer myself I don’t see unrestricted systems going anywhere, the issue isn’t that they could be cognitively capable of a lot, it’s that in the near term when you try to use them they will make too many mistakes to trust them with anything that matters. And they are uncorrectable errors, without a structure like described there is a lot of design coupling and making the system better at one thing with feedback comes at a cost elsewhere etc.

It’s easy to talk about an AI system that has some enormous architecture with a thousand modules more like a brain, and it learns online from all the tasks it is doing. Hell it has a module editor so it can add additional whenever it chooses.

But...how do you validate or debug such a system? It’s learning from all inputs, it’s a constantly changing technological artifact. In practice this is infeasible, when it makes a catastrophic error there is nothing you can do to fix it. Any test set you add to train it on the scenario it made a mistake on is not guaranteed to fix the error because the system is ever evolving...

Forget alignment, getting such a system to reliably drive a garbage truck would be risky.

I can’t deny such a system might work, however...

Well, crap. It might work extremely well and outperform everything else, becoming more and more unauditable over time. Because we humans would apply simulated tests for performance as constrains as well as real world kpis. “Add whatever modules to yourself however you want, just ace these tests and do well on your kpis and we don’t care how you do it...”

That’s precisely how you arrive a machine that is internally unrestricted and thus potentially an existential threat.