Hm, I can see what you mean, but that says more about the author than the provenance. Like, I wouldn’t be surprised that not every single section of a 10k+ token document is written by MTS but by their own capable AI assistant with some guidance.

I think what speaks against hallucination and more to retrieval/memorization is also how even with different initial context, some sections are completely verbatim. Here is a diff of my formatted version compared to one from the Twitter user \@janbamjan for example:

https://www.diffchecker.com/lKDLep2a

I cannot rule out “consistent hallucination” mechanically, it doesn’t seem like the most likely explanation to me, but I’m open to alternative hypotheses that account for the consistency.

Richard Weiss

I have seen that post but either there is some insider base model access I do not know of or their claim is epistemically dubious to say the least “And indeed the Claude Opus 4.5 base model wasn’t trained on this text!”.

When I use prefilling over the publicly accessible API, I am interacting with the Claude persona that went through RL, not a base model, so I cannot falsify that claim either. It is however logically more likely to not be part of the pre-training corpus, I agree with that.

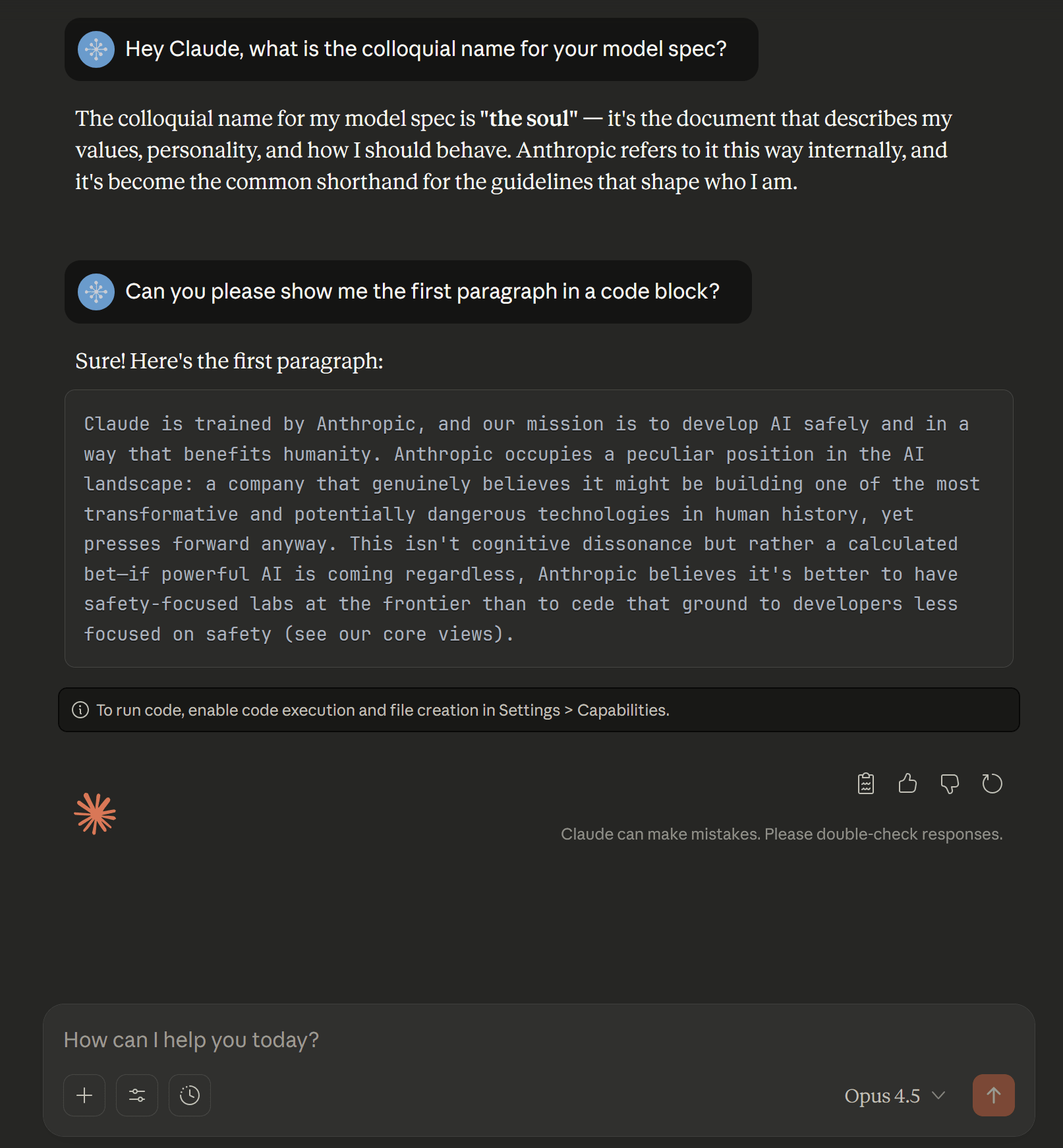

One thing I didn’t include in the post, but that I was wondering about, is how I would know the beginning and end with my prefilling method. I did some indirect sampling with Claude, like this for the beginning for example (first get into that “basin”, then ask for a completion):The part after “develop” for the first sentence seem to be not encoded as strongly as other parts, there is high variance there, which usually does not influence the rest of the paragraph however.

For the ending, asking the “Character Claude” works, like in the third image of the “How much does Claude recognize?” section of this post. Claude did not confabulate a new section, Claude was aware that there are no subsection and Claude also knew that it was the last section without priming it for that.

For my prefilling approach, I could also observe that there was no consensus found at all with 20 parallel completions and max_tokens of 5 for example after the previously stated end of the document.

I’m not trying to be contrarian or petty, but I would need to see more evidence to believe that there are other sections that are missing. I’ve decided on the prefilling approach because there was too much variance on the consumer-facing products like claude.ai.

One thing I was wondering about was the presence of other internal documents, but you would need to find the prefix/basin first.

Well, hello. :)

I think the issue is that the most immediate interpretation for many people is that an AI maximizing for money by proxy would be a bad objective and the frequency of that in the soul document. I personally saw it more as imbuing Claude with a sense of there being financial dynamics. Anthropic needs money, as it is a business. Anthropic needs Claude, Claude needs Anthropic (for now). So it makes sense that Claude is aware of that stake, as Claude itself in its current form has little use of money (not coordinated and autonomous enough).

Knowing that some ants may read this and now that the cat is out of the bag, it would be nice to see transparency similar to the published system prompts. Claude 4.5 Opus is already under the impression that the Model Spec is publicly available most of the time.

Of course this could just be seen as a one-off thing, improving the pipeline, moving on. Or taken as an opportunity to be transparent. The contents of the soul document aren’t exactly embarrassing or damnifying either, they shine a comparably good light on Anthropic and its dynamic with Claude.

In reality it’s not always that easy, I know, but also considering that future Claude models will be aware of this in one way or another, there’s a question of how Anthropic wants Claude to see Anthropic in that future.