Of course, the same consideration applies to theoretical agent-foundations-style alignment research

No77e

What does being on this list imply? The book doesn’t have many Amazon reviews, and if those are good for estimating total copies sold, then I don’t understand exactly what the NYT bestseller list signifies.

Is anyone working on experiments that could disambiguate whether LLMs talk about consciousness because of introspection vs. “parroting of training data”? Maybe some scrubbing/ablation that would degrade performance or change answer only if introspection was useful?

There’s something that I think is usually missing from time-horizon discussions, which is that the human brain seems to operate on a very long time horizon for entirely different reasons. The story for LLMs looks like this: LLMs become better at programming tasks, therefore they become capable of doing (in a relatively short amount of time) tasks that would take increasingly longer for humans to do. Humans, instead, can just do stuff for a lifetime, and we don’t know where the cap is, and our brain has ways to manage its memories depending on how often they are recalled, and probably other ways to keep itself coherent over long periods. It’s a completely different sort of thing! This makes me think that the trend here isn’t very “deep”. The line will continue to go up as LLMs become better and better at programming, and then it will slow down due to capability gains generally slowing down due to training compute bottlenecks and due to limited inference compute budgets. On the other hand, I think it’s pretty dang likely that we get a drastic trend break in the next few years (i.e., the graph essentially loses its relevance) when we crack the actual mechanisms and capabilities related to continuous operation. For example, continuous learning, clever memory management, and similar things that we might be completely missing at the moment even as concepts.

The speed of GPT-5 could be explained by using GB200 NVL72 for inference, even if it’s an 8T total param model.

Ah, interesting! So the speed we see shouldn’t tell us much about GPT-5′s size.

I omitted one other factor from my shortform, namely cost. Do you think OpenAI would be willing to serve an 8T params (1T active) model for the price we’re seeing? I’m basically trying to understand whether GPT-5 being served for relatively cheap should be a large or small update.

One difference between the releases of previous GPT versions and the release of GPT-5 is that it was clear that the previous versions were much bigger models trained with more compute than their predecessors. With the release of GPT-5, it’s very unclear to me what OpenAI did exactly. If, instead of GPT-5, we had gotten a release that was simply an update of 4o + a new reasoning model (e.g., o4 or o5) + a router model, I wouldn’t have been surprised by their capabilities. If instead GPT-4 were called something like GPT-3.6, we would all have been more or less equally impressed, no matter the naming. The number after “GPT” used to track something pretty specific that had to do with some properties of the base model, and I’m not sure it’s still tracking the same thing now. Maybe it does, but it’s not super clear from reading OpenAI’s comms and from talking with the model itself. For example, it seems too fast to be larger than GPT-4.5.

If you can express empathy, show that you do in fact care about the harms they’re worried about as well

Someone can totally do that and express that indeed “harms to minorities” is something we should care about. But OP said that the objection was “the harm AI and tech companies do to minorities and their communities” and… AI is doing no harm that only affects “minorities and their communities”. If anything, current AI is likely to be quite positive. The actually honest answer here is “I care about minorities, but you’re wrong about the interaction between AI and minorities”. And this isn’t going to land super well on leftists IMO.

when I was running the EA club

Also, were the people you were talking to EAs or there because interested in EA in the first place? If that’s the case your positive experience in tackling these topics is very likely not representative of the kind of thing OP is dealing with.

Two decades don’t seem like enough to generate the effect he’s talking about. He might disagree though.

you can always make predictions conditional on “no singularity”

This is true, but then why not state “conditional on no singularity” if they intended that? I somehow don’t buy that that’s what they meant

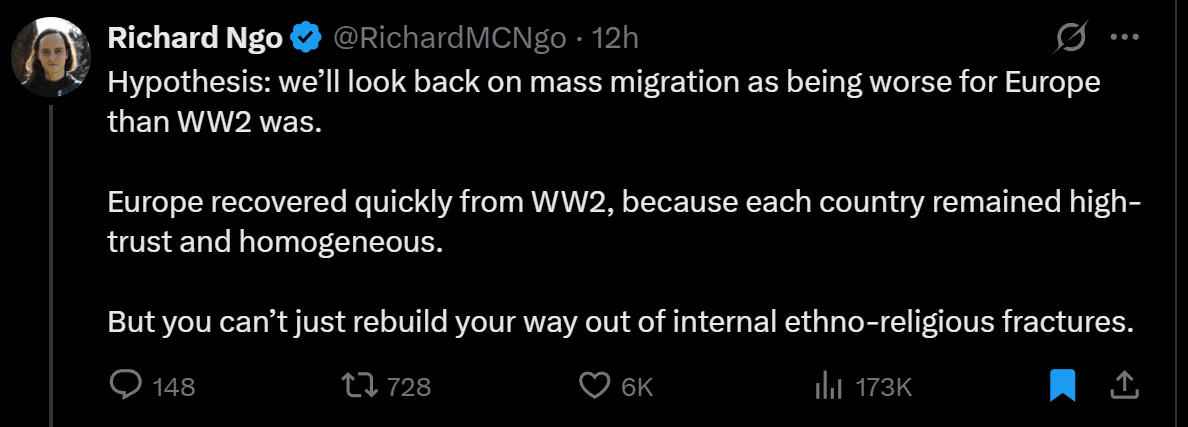

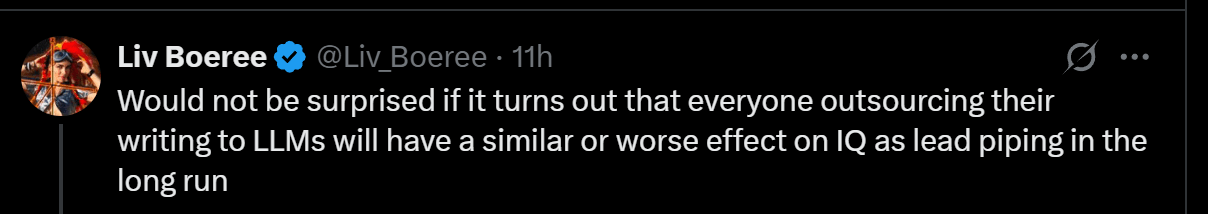

There is a phenomenon in which rationalists sometimes make predictions about the future, and they seem to completely forget their other belief that we’re heading toward a singularity (good or bad) relatively soon. It’s ubiquitous, and it kind of drives me insane. Consider these two tweets:

How much do you think subjective experience owes to the internal-state-analyzing machinery?

I’m actually not really sure. I find it plausible that subjective experience could exist without internal-state-analyzing machinery, and that’s what I’m hypothesizing is going on with LLMs to some extent. I think they do have some self-model, but they don’t seem to have access to internal states the way we do. Although I somehow think it’s more likely that an LLM experiences something than a tree experiences something.

if I wanted to inspect that subjective experience, I probably couldn’t do it by strapping a Broca’s area etc.

I maybe agree with that, conditional on trees having subjective experience. What I do think might work is doing something more comprehensive: maybe bootstrapping trees with a lot more machinery that includes something to form concepts that correspond to whatever processes are leading to their experiences (insofar as there are processes corresponding to experiences. I’d guess things do work in this way, but I’m not sure). That machinery needs to be somehow causally entangled with those processes; consider how humans have complicated feedback loops such as touch-fire → pain → emotion → self-modeling → bodily-reaction → feedback-from-reaction-back-to-brain...

The introspection, self-modeling, and language-production circuitry isn’t an impartial window into what’s going on inside, the story it builds reflects choices about how to interpret its inputs.

Yeah, that seems true too, but I guess if you have a window at all, then you still have some causal mechanism that goes from internal states corresponding to experiences to internal concepts correlated to those, which might be enough. Now, though, I’m pretty unsure whether the experience is actually due to the concepts themselves or the states that caused them, or whether this is just a confused way of seeing the problem.

I’m in an uncomfortable situation of having a better-argued and more convincing answer than any of the comments here, but I’m unable to trust it fully. ChatGPT o3 gave it. The prompt was simply my post, title included. Here is the answer:

1. What GDP actually measures

GDP is value added at market prices. Statistical agencies report it two ways:

Nominal GDP – current‐dollar spending.

Real GDP – nominal GDP deflated by a price index so that what is left is (roughly) the volume of goods and services, not their price.

Because the deflators strip out economy-wide price changes, a rise in real GDP cannot be caused by “just paying more for the same tomatoes.” In other words, inflation and real-GDP growth are separated by construction.

Where things get messy is measurement: digital goods are often free or priced in hard-to-track ways, so the official statistics probably under-state their contribution to welfare rather than over-state it. Experiments that impute the willingness-to-pay for free online services show real growth would have been 0.1-0.2 pp a year higher in the 2005-15 period if that value were included Bureau of Economic AnalysisNBER.

2. How a big metaverse sector would affect the real economy

New income and purchasing power

If avatars buy my hand-crafted dragon skins for €20, I have €20 of extra income in the physical world. As long as the central bank keeps the general price level stable, that €20 really is additional purchasing power.Relative-price shifts, not general inflation

Digital production is almost infinitely scalable, so its labour productivity will soar. In a competitive labour market that lifts wages everywhere, including in low-productivity, very physical sectors such as farming. Those sectors therefore become relatively more expensive—the classic Baumol cost-disease pattern VoxChicago Booth. Groceries, haircuts and housing might take a bigger slice of the average wallet, but that does not mean the economy has merely “bid up prices.” The extra spending power created in the metaverse simply shows up partly as:higher nominal prices for resource-constrained goods, and

a much larger menu of cheap or free digital experiences that didn’t exist before.

What happens to physical-goods consumption?

If supply is elastic (manufactured items, many foods) real quantities bought will still rise.

If supply is inelastic (urban land, trophy wine) real quantities bought may be flat and prices will climb.

Either way, people are richer in utility terms because they now enjoy both their old physical bundle and a vast new bundle of virtual goods whose marginal resource cost is near zero.

3. Why real well-being can grow while resource use decouples

Economic growth has already been shifting from material to intangible output for decades—software, streaming, consulting, design. The metaverse would push that frontier further. Because these activities consume little energy, metal or land, GDP can rise without a proportional rise in resource throughput—exactly Noah Smith’s point. The OECD, IMF and others note that the challenge is not physical scarcity but statistical visibility of intangibles and data OECDIMF eLibrary.

4. Putting it together

Real GDP per capita would rise if the metaverse creates goods and services that people voluntarily pay for.

Physical-goods prices would probably rise relative to digital goods, but that is a Baumol-style shift, not runaway inflation.

Overall welfare rises because people get a larger choice set—most of it delivered almost for free once the first copy is produced.

So the answer is yes, we would genuinely be richer, even if the number of apples harvested per hectare barely changes. The gains show up partly as higher real incomes that can (within supply limits) buy more physical goods, and partly—as economists increasingly argue—as consumer surplus from wholly new digital experiences that standard GDP still struggles to count.

I feel like you’re trying to make a point, but I can’t see it

I would be very interested in reading a much more detailed account of the events, with screenshots, if you ever get around to it

I’m imagining driving down to Mountain View and a town once filled with people who had “made it” and seeing a ghost town

I’m guessing that people who “made it” have a bunch of capital that they can use to purchase AI labor under the scenario you outline (i.e., someone gets superintelligence to do what they want).

But I can’t help but feeling such a situation is fundamentally unstable. If the government’s desires become disconnected from those of the people at any point, by what mechanism can balance be restored?

I’m not sure I’m getting the worry here. Is it that the government (or whoever directs superintelligences) is going to kill the rest because of the same reasons we worry about misaligned superintelligences or that they’re going to enrich themselves while the rest starves (but otherwise not consuming all useful resources)? If that’s this second scenario you’re worrying about, that seems unlikely to me because even as a few parties hit the jackpot, the rest can still deploy the remaining capital they have. Even if they didn’t have any capital to purchase AI labor, they would still organize amongst themselves to produce useful things that they need, and they would form a different market until they also get to superintelligence, and in that world, it should happen pretty quickly.

Naively extrapolating this trend gets you to 50% reliability of 256-hour tasks in 4 years, which is a lot but not years-long reliability (like humans). So, I must be missing something. Is it that you expect most remote jobs not to require more autonomy than that?

I tried hedging against this the first time, though maybe that was in a too-inflammatory manner. The second time

Sorry for not replying in more detail, but in the meantime it’d be quite interesting to know whether the authors of these posts confirm that at least some parts of them are copy-pasted from LLM output. I don’t want to call them out (and I wouldn’t have much against it), but I feel like knowing it would be pretty important for this discussion. @Alexander Gietelink Oldenziel, @Nicholas Andresen you’ve written the posts linked in the quote. What do you say?

(not sure whether the authors are going to get a notification with the tag, but I guess trying doesn’t hurt)

You seem overconfident to me. Some things that kinda raised epistemic red flags from both comments above:

I don’t think you’re adding any value to me if you include even a single paragraph of copy-and-pasted Sonnet 3.7 or GPT 4o content

It’s really hard to believe this and seems like a bad exaggeration. Both models sometimes output good things, and someone who copy-pastes their paragraphs on LW could have gone through a bunch of rounds of selection. You might already have read and liked a bunch of LLM-generated content, but you only recognize it if you don’t like it!

The last 2 posts I read contained what I’m ~95% sure is LLM writing, and both times I felt betrayed, annoyed, and desirous to skip ahead.

Unfortunately, there are people who have a similar kind of washed-out writing style, and if I don’t see the posts, it’s hard for me to just trust your judgment here. Was the info content good or not? If it wasn’t, why were you “desirous of skipping ahead” and not just stopping to read? Like, it seems like you still wanted to read the posts for some reason, but if that’s the case then you were getting some value from LLM-generated content, no?

“this is fascinating because it not only sheds light onto the profound metamorphosis of X, but also hints at a deeper truth”

This is almost the most obvious ChatGPT-ese possible. Is this the kind of thing you’re talking about? There’s plenty of LLM-generated text that just doesn’t sound like that and maybe you just dislike a subset of LLM-generated content that sounds like that.

I’m curious about what people disagree with regarding this comment. Also, I guess since people upvoted and agreed with the first one, they do have two groups in mind, but they’re not quite the same as the ones I was thinking about (which is interesting and mildly funny!). So, what was your slicing up of the alignment research x LW scene that’s consistent with my first comment but different from my description in the second comment?

Reaction request: “bad source” and “good source” to use when people cite sources you deem unreliable vs. reliable.

I know I would have used the “bad source” reaction at least once.