This seems to be just further confirmation that LLMs generally follow a logical decision theory with each other (along with their total preference for one-boxing); this is probably because LLMs can share the same decision methodology (i.e. weights)[1], unlike humans. This allows them to establish a limited form of program equilibrium with each other, increasing the likelihood they’ll cooperate in LDT-like ways. The optimal actions in a program equilibrium are also those endorsed by LDT. (edit 2: this claim is probably too strong)

Edit: note that future LLM agents with long-term online learning break one of the core assumptions of this argument: a new Claude Sonnet 6 probably isn’t going to think in the same way as one that has spent 5000 hours doing video editing. But the two Claudes would still be much more similar to each other in mind-space than to e.g. an arbitrary human.

- ^

This doesn’t explain why AI systems prefer other less rational AI systems over similarly rational humans, though.

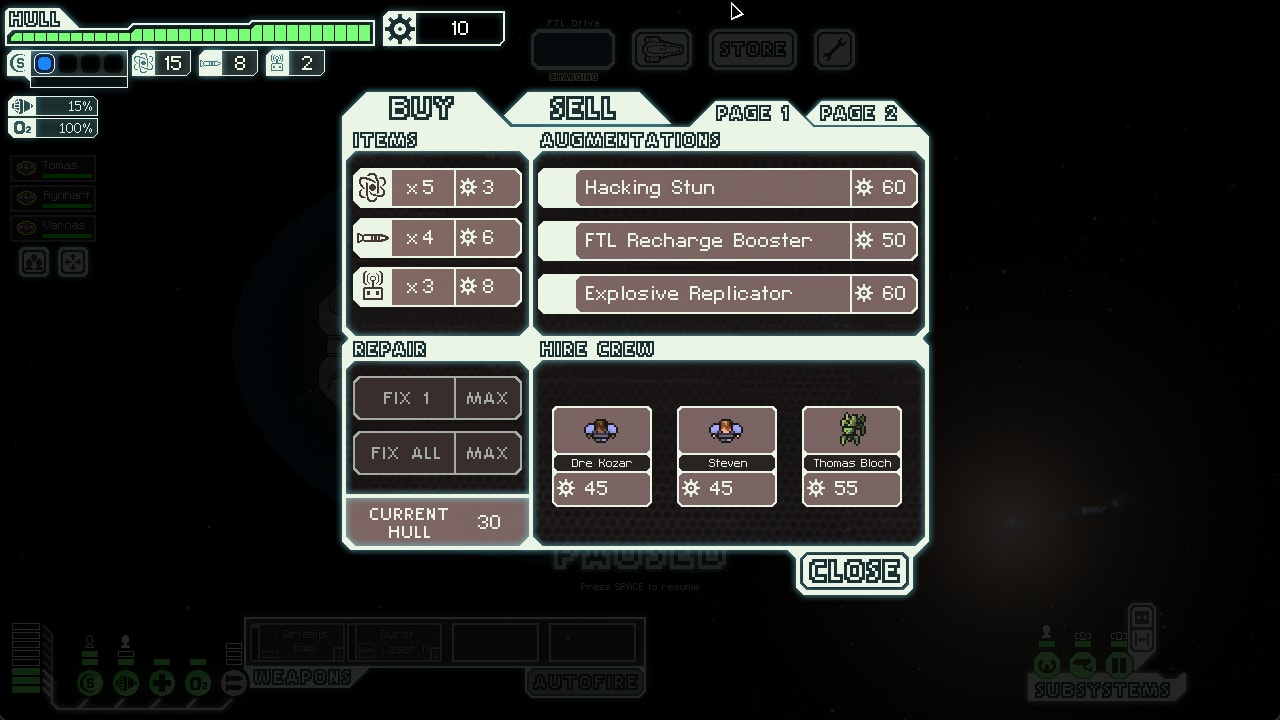

Tried it on newer models. GPT-5 continues to get it right, while Gemini 3 hallucinates a few details that can’t be seen clearly in the image. Sonnet 4.5 is off on a few details (but very close!).

Opus 4.5 is the first non-OpenAI model to get it completely right.

Edit: With this image, however, none of the models mention that it’s the end to the first stage final boss fight (GPT-5 (both reasoning and non-reasoning) does mention that it is the first stage, but nothing about it being the end of that stage—Opus 4.5 mentions nothing about stages)