This post is a linkpost for my substack article.

Substack link: https://coordination.substack.com/p/alignment-is-not-enough

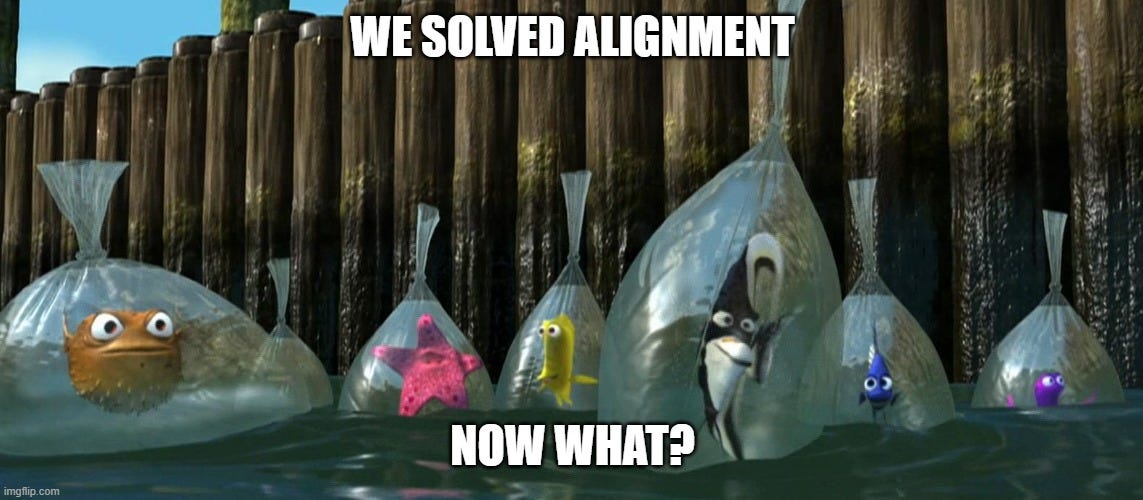

I think alignment is necessary, but not enough for AI to go well.

By alignment, I mean us being able to get an AI to do what we want it to do, without it trying to do things basically nobody would want, such as amassing power to prevent its creators from turning it off.[1] By AGI, I mean something that can do any economically valuable task that can be done through a computer about as well as a human can or better, such as scientific research. The necessity of alignment won’t be my focus here, so I will take it as a given.

This notion of alignment is ‘‘value-free’’. It does not require solving thorny problems in moral philosophy, like what even are values anyway? On the other hand, strong alignment is about an AI that does what humans collectively value, or would value if they were more enlightened, and likely involves solving thorny problems of moral philosophy. For the most part except near the end, I won’t be touching on strong alignment.

Let’s suppose that a tech company, call it Onus, announces an aligned AGI (henceforth, the AGI) tomorrow. If Onus deployed it, we would be sure that it would do as Onus intended, and not have any weird or negative side effects that would be unexpected from the point of the view of Onus. I’m imagining that Onus obtains this aligned AGI and a ‘‘certificate’’ to guarantee alignment (as much as is possible, at least) through some combination of things like interpretability, capability limitations, proofs, extensive empirical testing, etc. I’ll call all of these things an alignment solution.

Some immediate questions come up.

Should Onus be the sole controllers of the AGI?

How should we deal with conflict over control of the AGI?

How do we prevent risks from competitive dynamics?

I’m going to argue that some more democratically legitimate body should control the AGI eventually instead of Onus. I will also argue that the reactions of other actors means that coming up with a solid plan for what to do in a post-aligned-AGI world cannot be deferred until after we have the AGI.

Whenever I use we, I mean some group of people who are interested in AI going well, are committed to democratic legitimacy, represent a broad array of interests, and have technical and political expertise.

Control and legitimacy

Should Onus be the sole controllers of the AGI?

I think the answer is very clearly no. Onus is a tech company with shareholder obligations, shareholders who were not elected to represent humanity. Regardless of what rhetoric the leaders of Onus espouse, we cannot trust this body to make decisions that could potentially alter the long-term trajectory of humanity. We should treat Onus’s control as a transition period to a regime where we have a more legitimate body control the AGI.

So who should control the AGI? If we leave the realm of practicality, something like a global, liberal, democratic government would be a good candidate. Ideally, this body would enable global civilization to decide collectively what to do with an AGI, and would have some checks and balances to ensure that the people don’t go off the rails, as sometimes happens with democracy. An advisory committee composed of scientists, ethicists, sociologists, psychologists, etc might be a good idea.

What sort of body might actually be achievable? I’m just throwing ideas out here, but maybe we can get the big states like China and the US on board for a kind of AGI security council (AGISC). The idea is that the AGI could only be used collectively if the body unanimously agrees to each application. If there is no agreement, the AGI lies unused. You could enforce this unanimity by locking the AGI away on the most secure servers and giving the members of the AGISC separate parts of a secret key, or something like this. There are clearly some things that would be unanimous, like cancer research. Some thing, like military applications, would be less likely to make it through. This body already seems more legitimate than Onus, since its legitimacy is derived from that of existing states. At the same time, there is often still some disconnect between international politics and what domestic citizens want.

I’m not particularly attached to my proposal above; the point is that we want to be moving more in the direction of a body that has legitimacy.

We cannot afford to defer the planning of such a body until after we create an aligned AGI. First, the creation of such a body is going to require a lot of time. There are a lot of details to be hashed out, and it remains to be seen if it’s possible to get the most powerful states on board. We wouldn’t want a repeat of the League of Nations. There are tons of logistical details too, like how exactly would Onus transfer control of the AGI safely?

Second, we wouldn’t have the luxury of time once an aligned AGI has been created. Onus will have to make consequential decisions about how to deal with conflict over controlling the AGI, which is the subject of the next section.

Conflict over control

2. How should we deal with conflict over control of the AGI?

Onus can’t simply sit on their heels once they have the AGI. If the AGI is indeed a powerful general-purpose technology, it would likely grant enormous economic and strategic advantages. It is almost certain that other actors would try to gain control of it, including but not limited to

Powerful state actors like the US and Chinese governments;

Non-state actors like terrorist groups;

Other companies.

Onus will likely have to endure cyberattacks at the very least, and will have to be more successful than some large, well-funded corporations. Even if they manage to secure the AGI from cybertheft, if Onus is based in the US, it seems plausible that the US government could drum up a national security reason to seize the AGI anyway.[2]

Even if Onus somehow manages to guard the AGI against seizure (perhaps by keeping the AGI secret), somebody else might develop an aligned AGI too! Given that OpenAI and DeepMind often seem to try to one-up each other with capability advances, the lead time for Onus could be as short as a few months. If another independent tech company with AGI comes into the picture, it would be more difficult to secure the AGI against undesirable proliferation.

The point is that we want to avoid a situation where the decision of who should control the AGI will have been made for us. It’s not just that a terrible group might end up in control of the AGI and cause mass havoc, whether it’s the US/Chinese militaries waging illegitimate wars, terrorist groups launching bioweapons, or an unstable world with multiple AGI-powered actors. These consequences would surely not be great.

But I want to point at the fact itself of losing collective decision-making power over as consequential a decision as who should control an AGI. It’s bad enough that Onus is the sole controller to begin with. It would be even worse if an AGI were controlled by a body that, upon deliberation, we should not have given control to. We would likely not be able to wrest control away from the US government, for example, if it got its hands on an AGI. It would be unjust to subject all of humanity to the arbitrariness of a world-altering, democratically illegitimate decision.[3]

Getting everybody on board the alignment train

3. How do we prevent risks from competitive dynamics?

Even supposing that we have a good plan to transition from Onus to a more democratically legitimate body, an additional problem arises from the competitive dynamics of other companies trying to develop AGI too.

I’m going to sketch two broad types of alignment solutions. The first type is a ‘‘solve it once and for all’’ solution. This type of solution works perfectly no matter the (deep learning) model you apply it on, for as capable a model as you want. In other words, there is no trade-off between the capability of a model and how aligned it is. It’s unclear whether such a solution exists.

The second type of alignment solution is more like, “the model is aligned as long as it can’t do XYZ and we have measures ABC to ensure that it can’t do XYZ”. In other words, we can only certify alignment up to some model capability threshold. If the model were to somehow push above that capability threshold, we would not be able to guarantee alignment, and might have an existential risk on our hands. This type of alignment solution seems more plausible at least for the moment, given the current state of alignment research.

If Onus’s alignment solution is more like the second type, there is no guarantee that other companies competing to develop AGI would use it, even if Onus released it. Each company has an incentive to make their model more capable in order to capture more market share and increase their profits. Pushing models further may invalidate the alignment guarantee (again, to the extent that such a guarantee is possible) of Onus’s alignment solution. This pushing might be ok if improved alignment solutions are developed too, but I see at least two additional issues.

It’s easy to be careless. Especially when windfall profits are at stake, there will be a big incentive to move fast and beat out the competition. An improved alignment technique might have a fatal flaw that is not apparent when reasoning is profit-motivated.

There may not be an alignment solution of the second type that works for models that are capable enough. For example, models that are good enough at being deceptive might not yield to interpretability tools. We may just have to really figure out agent foundations.

It seems like a bad idea to leave the result of these competitive dynamics to chance, so what should we do? If it is public knowledge that Onus has the AGI, one forceful solution would be to get everybody, governments and companies included, to stop their AGI projects. This proposal is incredibly difficult and runs the risk of being democratically illegitimate itself. I don’t think I’d want Onus taking unilateral action, for example, to ensure that nobody else can have an AGI. We would have to convince key officials of the importance of some measure, figure out what we should do, and find ways to enforce it, perhaps through compute surveillance (which would be a tough sell too!). In any case, if the lead time of Onus is just a few months, there would be little time after the development of AGI to figure out how to resolve competitive dynamics.

Some objections

We can get the AGI to figure things out for us

One argument against why we cannot defer the question I’ve discussed is that if we have an AGI, we can just get it to figure out the best democratically legitimate body that should control it and how to get to that point. If the AGI is much more capable than humans, it should be able to figure things out much more quickly. It should also at the same time be able to prevent itself from being stolen, such as by shoring up cybersecurity. So an AGI would both help us to solve the problem at hand and make it much less time-sensitive.

The first problem is that people are likely to be suspect of any plan coming out of Onus and/or the AGI. In addition to general public mistrust of tech companies, I think the weirdness of an AGI coming up with a plan would be a big initial barrier to overcome. Maybe the provenance of an idea shouldn’t matter, but I think it often does, for better or for worse.

Maybe the AGI is able to come up with ways to overcome suspicion and get key people to follow along with the plan. I think it’s possible, but depends on how capable the initial AGI is, and how high the ceiling is for convincing people. Although the first aligned AGI will probably be better than humans at convincing other humans about things, I don’t know if it’ll be a god at convincing people through legitimate means like rational argument. It might be quite effective at deceiving people to go along with a plan, but this whole scenario seems complex enough that my heuristic against deception for apparently justified ends kicks in.

The second problem is that for the AGI to make the problem of who should control the AGI less time-sensitive, it would probably have to engage in violence or the credible threat thereof. How would an AGI avoid seizure by the US government otherwise? But such a use of force would be wildly illegitimate and not a norm we should set. For human overseers of the AGI, the first use of force makes it easier to justify subsequent uses.

It’s not hard for Onus to avoid major unilateral action for a while

Even if Onus is a democratically illegitimate body, it should be easy for the people there to avoid major unilateral action with the AGI. Assume that Onus is able to avoid seizure of the AGI in a peaceful manner and convince people to follow whatever plan it comes up with. Maybe the AGI could come up with good ways to achieve these ends. At that point, the people at Onus could just wait things out, so what problem is there?

I think this idea sounds good, but is implausible. I’m assuming that Onus runs basically top-down, with a CEO or perhaps the board of directors calling the shots. With this governance structure, I fear the temptation to bring about one’s own vision of the good would be too strong. These people would probably not have been selected as leaders because they were able to represent a broad array of interests and exercise power with restraint. They were more likely selected because they were able to pursue single-mindedly a narrow set of interests much more effectively than others could. It’s not necessarily that these people are evil.[4] Rather, they seem unlikely to me to act in a restrained way with newfound power. That’s strike one.

Strike two is that the corrupting influence of power (and what power the AGI would bestow!) renders benign action implausible. The allure to do more than sit and wait would be nigh irresistible. Imagine that you have the AGI in front of you. You see all the suffering in the world. You believe it’s your moral obligation to do something about the suffering with your newfound power. Because really, wouldn’t it be?

You start small. You ask the AGI to develop vaccines or treatments for the 100 deadliest diseases. It succeeds, but you cannot actually get those treatments used because of inertia from existing institutions. You need to institute some changes to the health system. If you don’t, then what was the point of coming up with vaccines? People are suffering, and you’re sure that change would be good. You get the AGI to come up with a plan and cajole officials in power to accept it. Parts of this plan involve some minor threats, but it’s all for the greater good. The plan succeeds.

You grow emboldened. It seems to you that a major source of adverse health outcomes is wealth inequality. If there were more redistributive policies, people would be able to afford health care, or it would be freely provided in any case. The only way to enact this change is through government. You’re just a citizen who happens to have more intelligence at their disposal than others, you reason, so you get involved in government. You lobby for more political influence. Maybe you manage to get you preferred candidate into the executive, where you’re able to pull a bunch of strings. Better you and the AGI than some incompetent others, anyway. An international crisis occurs. Since you have the AGI and you’re basically in the executive, you decide to resolve the crisis as you see fit and create some new global institutions. You have remade vast parts of the world in your image.

One objection to this story is that at any point, you could have asked the AGI to help you deliberate about what actions would be bad for the bulk of humanity. Even if you have remade the world in your image, would it really be that bad with an AGI that was an expert moral deliberator?

Even if we had an expert moral deliberator, the answer is still yes to me. Democracy is a fundamental value. Acting unilaterally with untrammeled power thwarts democracy. But maybe we could get the expert moral deliberator to interact with everybody and convince them. That would be more legitimate. However, I’m not sure we could or would build such a moral deliberator. Let’s recall strong alignment.

strong alignment is about an AI that does what humans collectively value, or would value if they were more enlightened, and likely involves solving thorny problems of moral philosophy.

I don’t know how difficult strong alignment would be relative to weak alignment. Even if it were easy, I’m not confident that people building AI systems would actually build in strong alignment. With ChatGPT and Anthropic’s chatbot, the trend seems to be to have chatbots refrain from taking one position or another, especially when there is a lot of moral ambiguity. Without strong alignment, the moral advice of the AGI would likely sound much less decisive.

Conclusion

Let me summarize. Democratic legitimacy is the principle undergirding my belief that Onus should not be the sole controllers of an AGI. We also need to prevent the risks from competitive dynamics in a way that is democratically legitimate. Empirical beliefs about the reactions of other actors suggest to me that there would be little time to figure out solutions for these issues before the development of AGI. In response to some objections, I laid out my beliefs on the corrupting influence of power.

I think most disagreements with this piece probably stem from the importance of democratic legitimacy, whether power would actually corrupt, and the capabilities of an AGI. To conclude, here are some things that would contribute to changing my mind about the urgency of addressing the questions I have raised relative to work on alignment.

Comprehensive and good-faith efforts by top AI companies, in collaboration with governments and civil society, to address the questions raised here, such as by broadening the board of directors to include a diverse array of public interests or publicly committing to an externally vetted plan to implement alignment solutions, even if they reduce capabilities, and to hand over the AGI at some point.

Convincing evidence that strong alignment is possible and that AI developers would want to build such models. Evidence of the first could be having future models figure out some central problems in moral philosophy, with the results widely accepted.

Evidence that one company has a lead time of at least several years (or, the expected amount of time it would take to create a more democratically legitimate body to control the AGI) over others in the development of AGI.

In the absence of the above, I think more people should be working on figuring out who should control an aligned AGI, how we can transition control to that more democratically legitimate body as peacefully as possible, and how to prevent the worst of competitive dynamics.

Acknowledgements

Thanks to Lauro Langosco for bring up the point about competitive dynamics between companies developing AGI. I first heard of safety-performance trade-offs from David Krueger. Thanks as well to Max Kaufmann and Michaël Trazzi for proofreading this post.

- ^

I do think that when distilled to this core, alignment is basically unobjectionable, aside from a general objection to building powerful AI systems that can be misused and create perverse incentives.

- ^

The US government has engaged in constitutionally questionable actions before. Take PRISM, for example.

- ^

We probably can’t get everybody in the world to vote on who should control an AGI, and it probably wouldn’t be desirable in any case in our world today. We can get democratic legitimacy through other means, like through deliberation between democratic representatives and experts.

- ^

But they might very well be! We should not discount the risks from bad actors so lightly.

Three sentences earlier:

So, I’m confused about this idea that we’re going to build an AI that does what you want and doesn’t do bad things that nobody would want, without needing to make any progress on thorny philosophical problems related to what counts as bad things nobody would want.

My main-line expectation is that if it seems like we have produced an AI that does all that good stuff without needing to have solved hard problems, and that AI causes large changes to the world, then bad things that nobody would want will happen despite superficial reassurances that that’s unlikely.

I think we should treat companies and states as transitional, as is human control. The long term future should be a friendly superintelligence. Suppose onus make the AI. They keep the AI, working on the superintelligence. A foomed superintelligence utterly transforms the world, companies and money are irrelevant.

If the AI is in the hands of the governments, we get awkward, worst of both worlds kludges dreamed up based on political reasoning by non-experts.

If the AI is in the hands of a team of smart ethical people that pragmatically have a lot of slack, then good things can happen.

This could be a company. Any company with AGI could easily make a few billion on the side to amuse shareholders. It isn’t like the human programmers are compelled to turn the universe into banknotes. The group could be a charity. It could be government funded. It could be all the experts burning personal savings to get together and work on the AI. So long as the decisions are technical choices, not political compromises.

Hmm, I feel like there’s some misunderstanding here maybe?

What you’re calling “strong alignment” seems more like what most folks I talk to mean by “alignment”. What you call “alignment” seems more like what we often call “corrigibility”.

You’re right that corrigibility is not enough to get alignment on its own (i.e that “alignment” is not enough to get “strong alignment”), but it’s necessary.

I have an opposite impression. “Alignment” is usually interpreted as “do whatever a person who gave the order expected”, and what author calls “strong alignment” is aligned AGI ordered to implement CEV.

I think this is because there’s an active watering down of terms happening in some corners of AI capabilities research as a result of trying to only tackle subproblems in alignment and not being abundently clear that these are subproblems rather than the whole thing.

I don’t see how we could have a “the” AGI. Unlike humans, AI doesn’t need to grow copies. As soon as we have one, we have legion. I don’t think we (humanity as a collective) could manage one AI, let alone limitless numbers, right? I mean this purely logistically, not even in a “could we control it” way. We have a hard time agreeing on stuff, which is alluded to here with the “value” bit (forever a great concept to think about), so I don’t have much hope for some kind of “all the governments in the world coming together to manage AI’ collective (even if there was some terrible occurrence that made it clear we needed that— but I digress).

I would argue that alignment per se is perhaps impossible, which would prevent it from being a given, as it were.