How I’m telling my friends about AI Safety

One of the comments on the new book post asked how to tell normie friends about AI safety. I don’t have any special credentials here, but I thought it’d be worthwhile to share the facebook post I’ve drafted, both to get feedback and to give an example of one way a post could look. There exist articles and blogs that already do this well, but most people don’t read shared articles and it’s helpful to have a variety of ways to communicate. My goal here is to grab attention, diffuse densiveness with some humor, and try to make the problem digestable to someone who isn’t immersed in the topic or lingo. Let me know what you think!

Why AI might kill us all

Dramatic opener – check. I don’t post often, especially not about “causes”, but this one feels like a conversation worth having, even if we sound a little crazy.

TL;DR – AI is (and will be) a really big tool that we need to be super careful wielding. Corporations aren’t going to stop chasing profit for us, so talk to your representatives, and help educate others (this book is coming out soon from top AI safety experts and could be a good resource).

Wouldn’t it be awesome to own a lightsaber? You could cut through anything! Unfortunately, knowing me, I’d smush the button while trying to get it out of the packaging and be one limb on my way to becoming Darth Vader.

AI is like a lightsaber. Super cool. Can do super cool stuff. Turns out, “cutting off your own limbs” technically counts as “super cool”.

Here’s another analogy: there’s a story about “The Monkey’s Paw” – an old relic that grants the user 3 wishes, like a genie. The catch is, the wishes always come with some bad consequence. You wish for $1 Million – congrats – that’s the settlement amount you get for getting paralyzed by a drunk driver!

It occurred to me the other day, that to make a Monkey’s Paw, you don’t need to tell it to do bad things, just to be overly literal or take the easiest path to granting the wish. “I wish that everyone would smile more” → overly literal interpretation → virus breaks out that paralyzes people’s facial muscles into a permanent smile.

AI is a wish-granting computer program. Right now, it can’t do much – it can only grant wishes for pictures with the wrong number of fingers and English class essays, but when it can do more, watch out. It’s a computer – it will be overly literal.

And computer’s DON’T know what you mean. Ask any programmer – you never see the bug coming, but it’s always doing exactly what you asked, not what you meant. We’re just lucky that we currently can’t program reality.

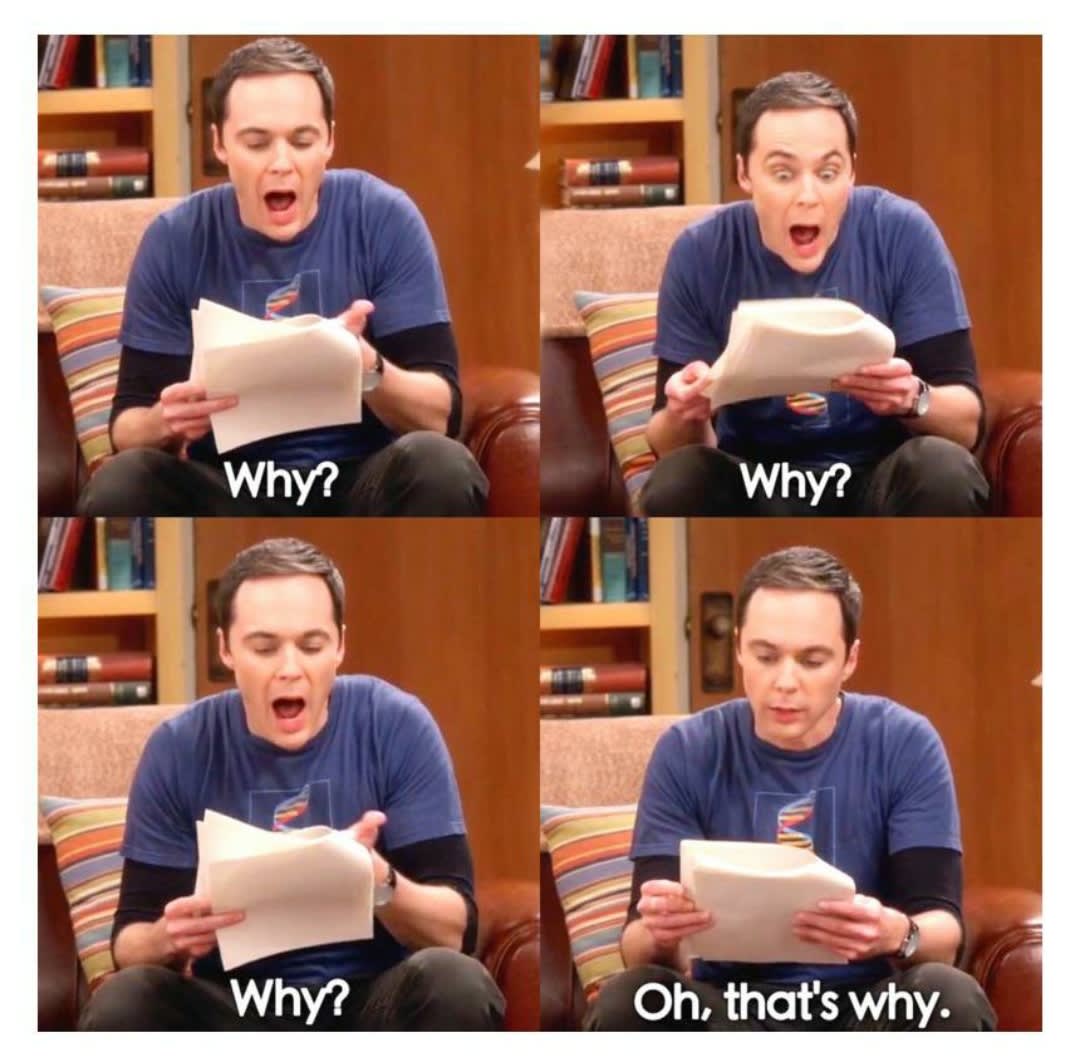

When your program isn’t doing what you want:

The Real Dangers

What are NOT the dangers of AI:

Killer Robots

It “turns evil”

It gains consciousness and wants freedom

What are the dangers of AI:

Humans are not ready to get what they ask for.

Now that we know what the danger is, let’s unpack how it can happen.

How things go wrong:

Misalignment – aka getting what we asked for not what we wanted

Intelligence Explosion

Arms Race

Intro: Human intelligence is not the top

I’m going to keep talking about the possibility of a single AI beating all of humanity, and maybe that sounds farfetched – so why is that possible? It’s easy to think that Einstein is the top of the intelligence scale. But before him, it was easy to think that Ugg the caveman was the top, and Ooh-ah the chimp, and Ritters the rat, and Leo the Lizard, and Fred the fish, and Sammy shrimp. There has always been a top to the intelligence scale, until something else set it higher. If the history of computers mopping the floor with humans at activities that people said computers could never beat us at, is not enough to convince you that humans are not the top, let physics do that. Circuits are over 1000 times smaller than neurons and can fire with a frequency over 10 million times faster. And they don’t spontaneously decay if you stop giving them oxygen. If nothing else, you could literally build a brain out of human neurons the size of a building, and if you see the power that a little increase in brain size gave us over animals, you’ll realize this is no joke. And yeah – no one is trying to build that brain right now, precisely because they all realize that the circuit brain will be smarter.

So yes, if we built a super-intelligent AI and it was us against it, that’s like insects against a human – except that as the top of our food chain, we didn’t bother evolving the ability to fly, hide, or reproduce really quickly.

Misalignment – Getting Exactly what you ask for

I talked about this above with the monkey’s paw, but I want to give some examples to show just how easy it is for wishes to go wrong when genies get powerful.

Paperclips. A company makes an AI to produce paperclips. “Make as many paperclips as possible” they tell it. If that AI is smart enough to outsmart us and achieve this goal, we all get turned into paperclips.

World peace. It’s tempting to think that we should make sure AI is used altruistically instead of selfishly, but altruistic wishes can make some of the worst mistakes. “AI, give me as much beer as I can drink” is selfish, but actually relatively harmless as a wish. Sure the AI might steal it, but it’s unlikely that anything worse than one case of theft and alcoholism result from this wish. “I wish for world peace” on the other hand. Take a moment to think about the easiest way to achieve that. Got it? Did you think “kill everyone”? Because a computer would. Ok, fine “I wish for world peace and no humans to die”. What happens now? Yep, we’re all in comas. “I wish for world peace and no humans to die and us to all remain conscious”. Oof, this one goes really badly. We’re now all paralyzed, kept alive and conscious, until the sun eats the earth in 5 billion years. Congrats, you just invented Hell.

In addition to specific bad wishes, there are some common factors that make most wishes go badly.

Matter. Many wishes are about the re-arrangement of matter. Make stuff, build stuff, fix stuff, etc. Unfortunately, we are made of matter, so taken to the extreme, these wishes get our matter re-arranged, and we die.

Computing Power. Ok, we avoided wishing for changes to matter – we wished for math solutions. It turns out, almost all wishes benefit from having more computing power. And what are CPUs made of – yep, matter. Consolation prize – if you weren’t good at math in life, you are in death!

Getting shut off. It turns out, almost any wish you can think of is more likely to get fulfilled if the AI doesn’t get shut off. And who could shut it off – yep, humans. So the AI has to kill or disable us to ensure that it can work on your wish without getting shut off.

Intelligence Explosion

Ok, so when AI gets really powerful, this could be an issue. Luckily that’s probably a ways from now and the first couple times this happens the AI won’t be strong enough, so we’ll be able to learn, right?

Not exactly. AI can get better than humans are lots of things: Chess, aviation, programming AI, golf, running a business. But one of these things is not like the others. Nothing magical happens when AI gets better than humans at golf. But when it gets better than humans a programming AI… It can then make a slightly smarter AI, which makes an AI somewhat smarter than it, which makes an AI solidly smarter than it, which makes an AI way smarter than it, which makes an AI astronomically smarter than it. And all of this could happen at the speed of computers.

This concept is referred to as an “intelligence explosion”. And it could happen over the course of hours if the limiting factor is better code, or over months if the limiting factor is better hardware – but either way, things can get out of hand very quickly.

Arms Race

Ok, so when AI gets close to as smart as humans, then we’ll all just pause on it and make sure it is completely safe before continuing – that sounds like a good plan. Except, there’s a problem – the company that doesn’t make the next smarter AI is going to lose out and possibly go out of business. And, if they don’t do it, then the next company will, so what’s the point of dying on that hill?

Oh, and even if we get all AI companies to be non-profit and make sure all our AI researchers care about safety – what about China – they could make a smarter AI and make wishes that threaten our national security – surely that’s worth taking a little risk for. And if we get all the treaties in place and make sure everyone is on board with safety – all it takes is one person. One crazy person or one Putin who would rather watch the world burn than lose to others, to build an AI and tell it “Keep editing yourself to get as smart as you can”. So, then all the good, careful, moral AI researchers are in a dilemma – how do you stop that from happening? How do you prevent anyone anywhere in any basement of the world from doing this? Well, you could make a super powerful AI and have it stop other AI development – and so the arms race continues.

And, as with most things, safety and speed are not especially compatible.

If you made it this far, thank you! A couple more notes:

Even if you think there’s a low probability of this happening – I think you’ll agree that it doesn’t hurt to be safe and that we shouldn’t rely on the altruism of corporations for our future.

Apart from the existential risks posed by AI, there are plenty of other risks and reasons to support AI safety – economic turmoil, wars, political manipulation, and abuse of power, to name a few.

As mentioned in the TL;DR, there’s a book[https://ifanyonebuildsit.com/?ref=nslmay#preorder] coming out by some of the experts in AI safety. It probably has better explanations than I have and addition info or insights from the experts. Feel free to get it if you want to learn more or use it to tell others. (I have no affiliation with it – I just like other stuff I’ve read by the same author).

If you agree with the importance of AI safety, talk to your friends and representatives. You don’t need to do anything big – the important thing is that we get everyone in on this conversation instead of leaving it to corporations to decide. And let me know if you have any ideas about what else we can do!

If you liked my post, yes, you are definitely allowed to share it – I’d be honored.

Traditional non AI computers dont. AGI’s do, since understanding non-literally is part of general intelligence. That is an old, bad argument.

If the default ASI we build will know what we mean and not do things like [turn us into paperclips], which is obviously not what we intended—then AI alignment would be no issue. AI misalignment can only exist if the AI is misaligned with our intent. If it is perfectly aligned with the users intended meaning but the user is evil, that’s a separate issue with completely different solutions.

If instead, you mean something more technical, like, it will “know” what we meant but not care, and “do” what we didn’t mean, or that “literal” refers to language parsing, while ASI misalignment will be due to conceptual misspecification—then I agree with you but don’t think that trying to make that distinction will be helpful to a non-technical reader.

It’s not a fact that the default ASI will be aligned,.or even agentive, etc ,etc. It’s no good to appeal to one part of the doctrine to support another , since it’s pretty much all unproven.

I mean it’s a bad, misleading argument. The conclusion of a bad argument can still be true...but I don’t think there are any good arguments for high p(doom).

The first (down)vote coming in made me realize an issue. I assume that the vote indicates the quality of my content, aka whether I should post this to facebook. I’m nervous about sounding crazy to my friends anyway, and I certainly don’t want to post something that might be net harmful to AI safety advocacy!

However, it occurs to me that it is also possible that the vote could mean something else like “this topic isn’t appropriate for LW”. In an effort to disambiguate those, please agree or disagree with my comments below.

This post is good (disagree for bad) for some other reason.

This is helpful for LW readers.

I should post this to facebook.