There’s an interesting preprint out by Liu, Iqbal, and Saxena, Opted Out, Yet Tracked: Are Regulations Enough to Protect Your Privacy?. They describe an experiment they ran to figure out whether your choices on consent dialog boxes actually do anything. The idea is that if they work you expect to see consistently lower bids in the “opt out” than “opt in” case. While I think the experiment wasn’t quite right, with a few tweaks it would be really informative.

The basic design makes sense:

Limit to publishers who run client-side auctions (“header bidding”) so you can see the bids.

Visit some sites on a range of topics so advertisers can start estimating your interests and decide to market to you. (And also a control group that skips this step.)

Visit a publisher and see how much advertisers bid if you opt in vs opt out.

The main issue I see is that their implementation of (2) was probably not good enough to get advertisers excited about bidding specifically for their views:

3.3.1 Simulating Interest Personas. Since advertisers bidding behavior is different for different user interests, we simulate 16 user interest personas to capture a wide spectrum of bidding behavior. User personas are based on 16 Alexa top websites by categories lists. To simulate each persona, we initialize a fresh browser profile in an OpenWPM instance, on a fresh EC2 node with a unique IP, iteratively visit top-50 websites in each category, and update browser profile after each visit. Our rationale in simulating personas is to convince advertisers and trackers of each persona’s interests, so that the advertisers bid higher when they target personalized ads to each persona. In addition to the above-mentioned 16 personas, we also include a control persona, i.e., an empty browser profile. Control persona acts as a baseline and allows us to measure differences in bidding behavior.

The sixteen personas they used were “Adult, Art, Business, Computers, Games, Health, Home, Kids, News, Recreation, Reference, Regional, Science, Shopping, Society, and Sports”.

These are pretty bland, and I wouldn’t expect advertisers knowing you’d visited some sites in those categories to change their behavior much. This study would have been much better if they’d used some sites with very strong commercial intent and high profit margins, like mattresses, car sales, or credit cards. Here’s a test you can run at home:

Open a new private browsing window and make sure third party cookies are enabled.

Search for either “sports” or “mattresses” and open the top three results.

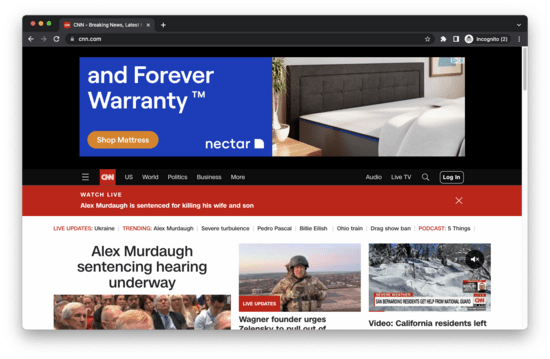

Open cnn.com or another site with pretty normal ads and see what you get.

When I do this with “sports” I get ads that don’t seem to have anything to do with sports. When I do it with “mattresses” I get a ton of big (high bid!) ads for mattresses:

So I’d be very interested to see a repeat of this study where instead of using generic Alexa top-16 categories they used specific high-value categories, and with a screenshot step to check whether ads were likely informed by those categories.

I’d also like to see a different control persona, where instead of using an empty browser profile they visited several sites with minimal commercial implications. The problem is I’m worried that their control is getting lower bids because it looks so obviously like a bot, not because it has no personalization history.

(Disclosure: I used to work on ads at Google; speaking only for myself. I don’t work in the ad industry anymore and have no plans to return.)

Did you send this to the authors? Most academics would jump at a simple tweak for a new publication.

Yup! Sent them an email with a short summary and a link.