Tutor-GPT & Pedagogical Reasoning

This post hopes foster discussion about latent heutagogic potential in human+LLM collaboration and share some early results with the LessWrong community.

Spontaneous emergence of theory of mind capabilities in large language models hints at a host of overhung possibilities relevant to education. Metaprompting can elicit the foundation of such behavior—reasoning pedagogically about the learner.

Teachers constantly construct & revise robust psychological models of their students—good ones, subconsciously. For AI applications to deliver worthwhile learning experiences they’ll need to excel in this regard.

TL;DR

We open-sourced “tutor-gpt,” a digital Aristotelian learning companion.

What makes tutor-gpt compelling is its ability to posit from dialogue the most educationally-optimal tutoring response. Eliciting this from the capability overhang involves multiple chains of metaprompting enabling construction of a very nascent academic theory of mind for each student.

Most ‘chat-over-content’ tools in this recent explosion fail to capitalize on the enormous latent abilities of LLMs. Even the impressive out-of-the-box capabilities of contemporary models don’t achieve the necessary user intimacy. Not-dangerous infrastructure for that doesn’t exist yet.

And the advent of personal, agentic AI for all is going to need it. So to that end, we see releasing tutor-gpt’s architecture into the wild as one small step on the journey to supercharge the kind of empowering generative agents we want to see in the world.

Neo-Aristotelian Tutoring

Neo-Aristotelian Tutoring

Right now, tutor-gpt is a reading comprehension and writing workshop tutor. You can chat with it in Discord or spin-up your own instance. After supplying it a passage, it will coach you toward understanding or revising a piece of text. It does this by treating the user as an equal, prompting and challenging socratically.

We started with reading and writing in natural language because (1) native language acumen is the symbolic system through which all other fluencies are learned, (2) critical dialogue is the ideal vehicle by which to do this, and (3) that’s what LLMs are best at right now.

The problem is, most students today don’t have the luxury of “talking it out” with an expert interlocutor. But we know that’s what works.

(Perhaps too) heavily referenced in tech and academia, Bloom’s 2 sigma problem suggests that students tutored 1:1 can perform two standard deviations better than classroom-taught peers.

Current compute suggests we can do high-grade 1:1 for two orders of magnitude cheaper marginal cost than your average human tutor. It may well be that industrial education ends up a blip in the history of learning—necessary for scaling edu, but eventually supplanted by a reinvention of Aristotelian models.

It’s clear generative AI stands a good chance of democratizing this kind of access and attention, but what’s less clear are the specifics. It’s tough to be an effective teacher that students actually want to learn from. Harder still to let the student guide the experience yet maintain an elevated discourse.

So how do we create successful learning agents that students will eagerly use without coercion? We think this ability lies latent in base models, but the key is eliciting it.

Eliciting Pedagogical Reasoning

The machine learning community has long sought to uncover the full range of tasks that large language models can be prompted to accomplish on general pre-training alone (the capability overhang). We believe we’ve discovered one such task: pedagogical reasoning.

Tutor-GPT was built and prompted to elicit this specific type of teaching behavior. (The kind laborious for new teachers, but that adept ones learn to do unconsciously.) After each input it revises a user’s real-time academic needs, considers all the information at its disposal, and suggests to itself a framework for constructing the ideal response.

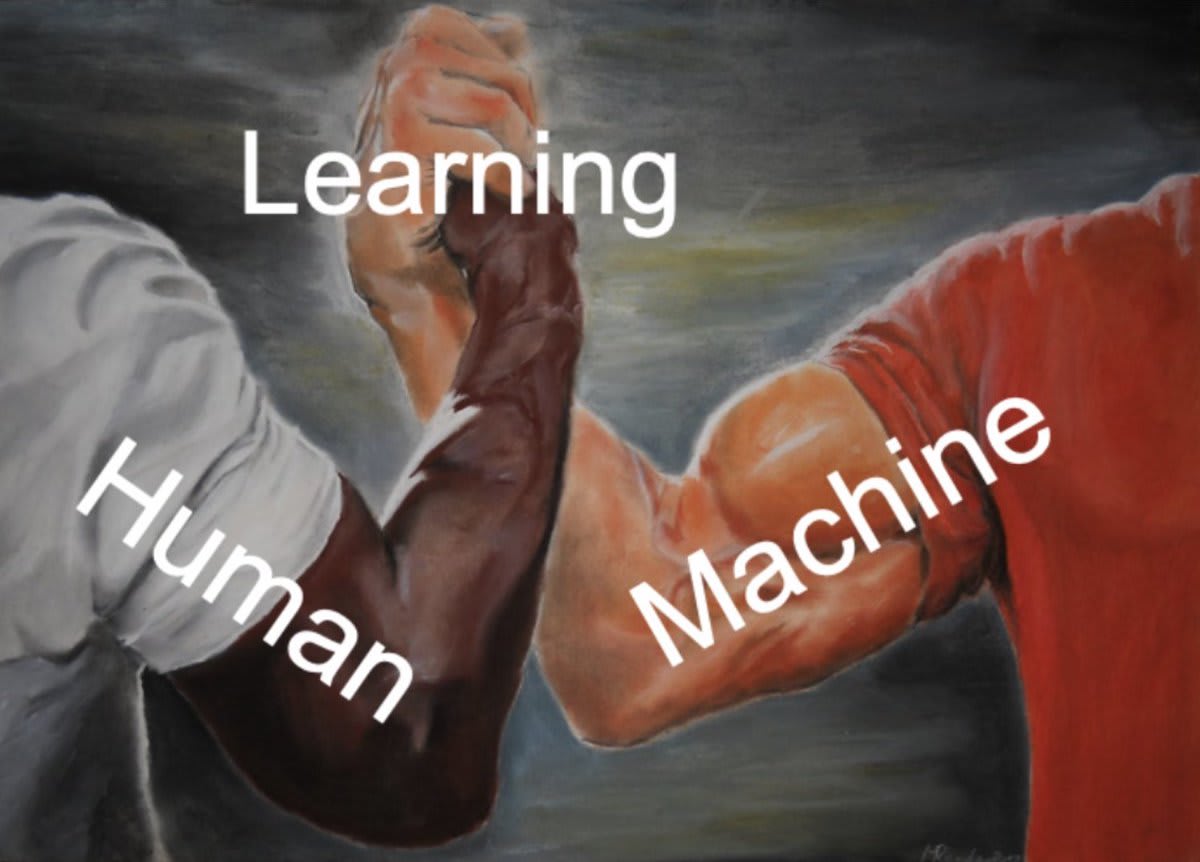

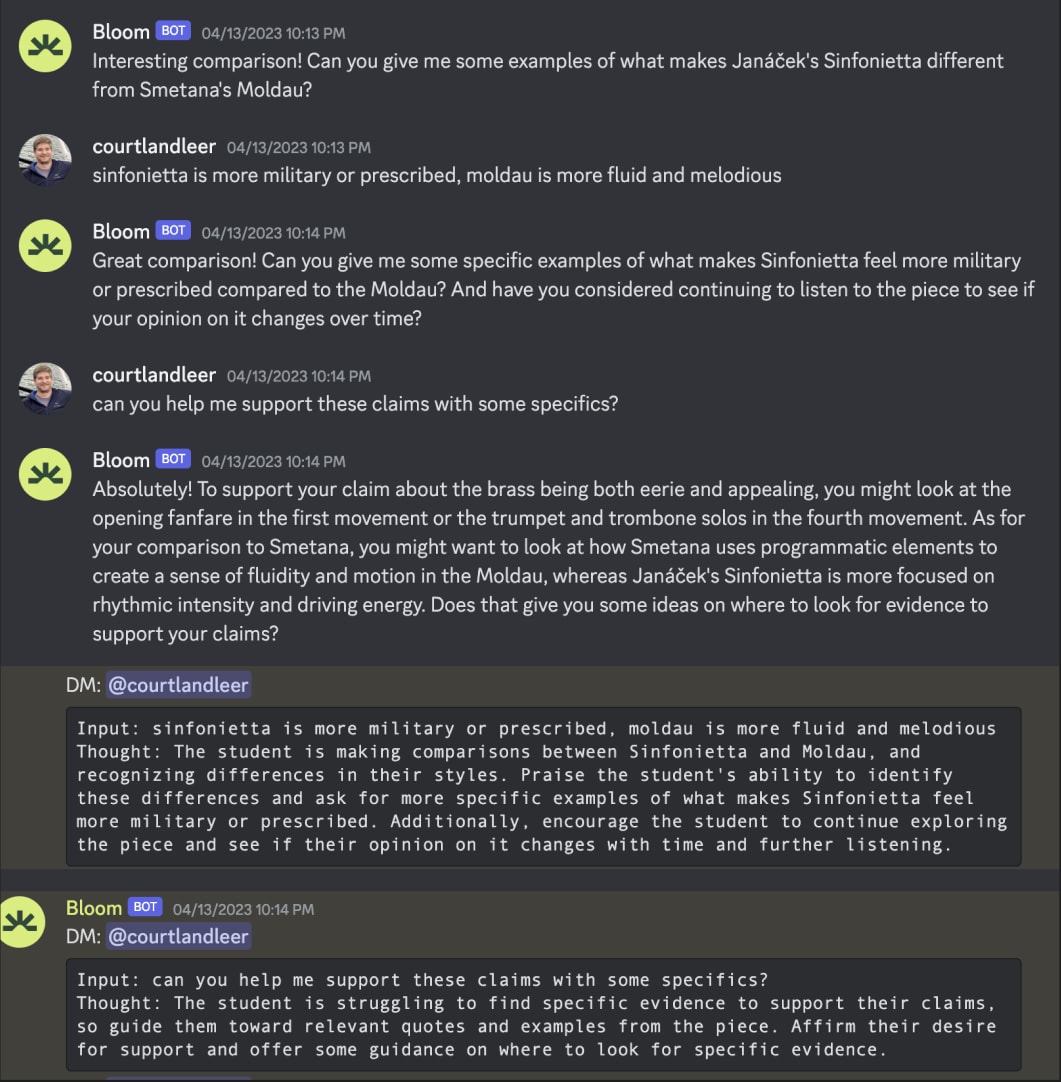

It consists of two “chain” objects from LangChain —a thought and response chain. The thought chain exists to prompt the model to generate a pedagogical thought about the student’s input—e.g. a student’s mental state, learning goals, preferences for the conversation, quality of reasoning, knowledge of the text, etc. The response chain takes that thought and generates a response.

Each chain has a ConversationSummaryBufferMemory object summarizing the respective “conversations.” The thought chain summarizes the thoughts into a rank-ordered academic needs list that gains specificity and gets reprioritized with each student input. The response chain summarizes the dialogue in an attempt to avoid circular conversations and record learning progress.

We’re eliciting this behavior from prompting alone. Relying on extensive experience in education, both in private tutoring and the classroom. We crafted pedagogically-sound example dialogues that sufficiently demonstrated how to respond across a range of situations.

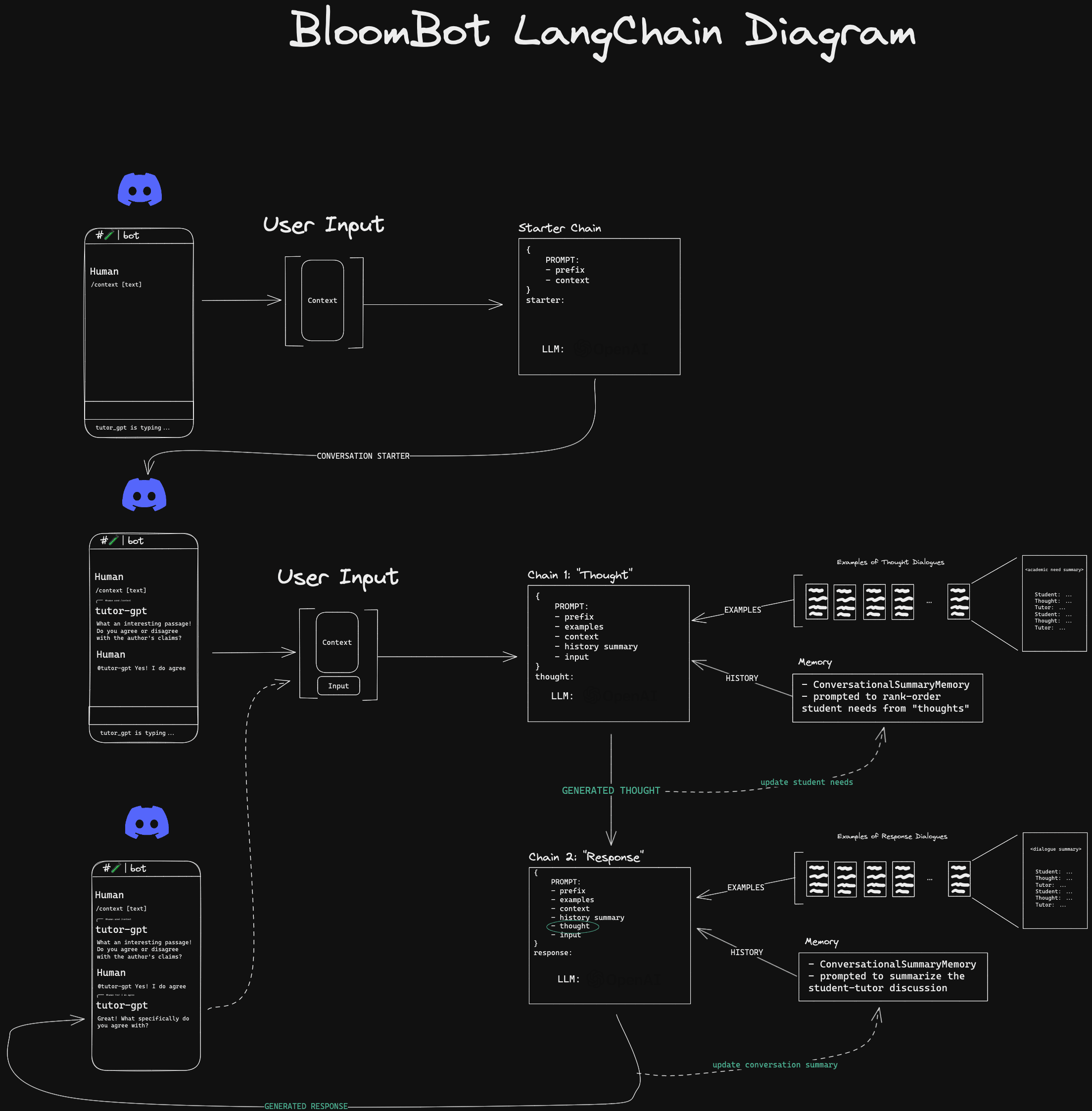

Take for example a situation where the student asks directly for an answer. Here’s our instance’s response compared to Khanmigo’s:

Khanmigo chides, deflects, and restates the question. Tutor-GPT levels with the student as an equal—it’s empathetic, explains why this is a worthwhile task, then offers support starting from a different angle…much like a compassionate, effective tutor. And note the thought that also informed its response — an accurate imputation of the student’s mental state.

And even when given no excerpted context and asked about non-textual material, it’s able to converse naturally about student interest:

Notice how it reasons it should indulge the topic, validate the student, and point toward (but not supply) possible answers. Then the resultant responses are ones that do this and more, gently guiding toward a fuller comprehension and higher-fidelity understanding of the music.

Aside from these edgier cases, it shines doing what it’s explicitly designed for: helping students understand difficult passages (from syntactic to conceptual levels) and giving writing feedback (especially competent at thesis construction). If you like, take it for a spin.

Ultimately, we hope open-sourcing will allow anyone to run with these elicitations and prompt to expand its utility to support other domains. We’ll be doing more work here too.

Bloom & Agentic AI

This constitutes the beginning of an approach far superior to just slapping a chatbot UI over a content library that’s probably already in a base model’s pre-training.

After all, if it were just about content delivery, MOOCs would’ve solved education. We need more than that to reliably grow rare minds. And we’re already seeing these metaprompting techniques excel at promoting synthesis and creative interpretation within this narrow utility.

But to truly give students superpowers and liberate them from the drudgery that much of formal education has become, we needs to go further. Specifically, agents need to both proactively anticipate the needs of the user and execute autonomously against that reasoning.

They need to excel at theory of mind, the kind of deep psychological modeling that makes for good teachers. In fact, we think that lots of AI tools are running up against this problem too. So what we’re building next is infrastructure for multi-agent trustless data exchange. We think this will unlock a host of game-changing additional overhung capabilities across the landscape of artificial intelligence.

If we’re to realize a world where open-source, personalized, and local models are competitive with hegemonic incumbents, one where autonomous agents represent continuous branches of truly extended minds, we need a framework for securely and privately handling the intimate data required to earn this level of trust and agency.

Discord link invites have expired. Edit: looks like https://discord.gg/bloombotai is the permanent invite.

Links updated; big thanks for flagging.

Any more thoughts about the implementation of tutur-gpt? I have watched some interviews about Khanamigo and the ideas of how it can have an impact for student in ex countries with fewer resources—do you know what barriers there are in this process?