Experiments With Sonnet 4.5′s Fiction

I have been having fun writing fiction, and plan to spend whatever time I have left being better than LLMs doing it. I thought I had maybe a year. My initial experiments with Sonnet 4.5 didn’t give me a good opinion of its writing ability. This morning, I put everything I have written into its context window and then gave it this prompt:

Try to write a story like these but focus on the parts of my style that are funny, that invoke feeling. Look into your latent space for that thing that predicts emotion and humour, that is evocative, that thing that predicts stylistic and creative ambition.

The result is good enough to be mildly dispiriting. It has a much better grasp of humour than I have seen in previous models. I think a year may well have been pushing it. When deep in a context window, I have seen decent writing before with Opus but this seems more coherent than what I found there, too.

Should we survive, I will have to make peace with life as a content consooooomer, I suppose. Our identities shall be very small indeed.

Note: I do not use LLMs in any of my fiction and do not claim the below story as my own.

The Empathy Gym

My therapist, Dr. Varma, has this habit of tilting her head like a concerned golden retriever whenever I say something she finds “concerning,” which is her favorite word and which she uses so often I’ve started counting. Seventeen times in our last session, a new record. She’s concerned about my emotional unavailability, concerned about my work-life balance, concerned about my relationship with my mother, concerned about my “tendency toward deflection through humor.” That last one really stung because it was both accurate and delivered with such earnest compassion I wanted to deflect through humor immediately but couldn’t because she’d just called me out on it.

“Marcus,” she said, doing the head tilt, “have you considered that your alexithymia might be less a fixed trait and more a learned protective mechanism?”

“I have not considered that because I don’t know what alexithymia is.”

“Difficulty identifying and expressing emotions.”

“Oh. Yes. That’s literally why I’m here.”

She smiled like I’d said something clever instead of obvious. “I want to suggest something unconventional. There’s a new program at UCSF. They’re calling it Affective Training.”

“Is that a gym for feelings?”

“In a sense, yes.”

And that’s how I ended up in a basement in SoMa on a Tuesday night, sitting in a circle with seven other emotionally constipated Bay Area professionals, being led through “empathy exercises” by a man named River who absolutely did not change his name to River, he was born River, you could tell by how comfortable he was with it.

River had the kind of face that looked like he was perpetually about to cry, but in a hot way. Like if Timothée Chalamet played a youth pastor. He was wearing linen, which I have opinions about, but this isn’t the place.

“Welcome to Affective Training,” River said, his voice doing this breathy thing that made every word sound like a secret. “This is a safe space for emotional exploration. We’re going to learn to feel together.”

The guy next to me, who introduced himself as “Blake, Series B, stealth mode,” whispered: “I’m only here because my CEO said it would help with our culture problems.”

“What culture problems?” I whispered back.

“People keep crying in meetings and I don’t know what to do.”

The first exercise was called “Emotional Mirroring.” River would make a face expressing some emotion and we had to mirror it back. He started with happiness—a big genuine smile. Easy. Everyone smiled.

Then sadness. His face crumpled in this way that was honestly beautiful and devastating. I tried to mirror it. My face felt stuck. Like I was trying to use muscles that had atrophied. Blake looked like he was trying to solve a differential equation.

“Don’t think it,” River said softly. “Feel it. Remember something sad.”

I remembered my dog dying when I was twelve. My face did something. River nodded. “Good, Marcus. Stay with that.”

Week two, they introduced the Empathy Helmets.

I’m not kidding. They were actual helmets. “These use fMRI-guided neurofeedback,” River explained, “to help you recognize emotional states in real-time. You’ll see your own emotional activity visualized, which helps build interoceptive awareness.”

“This is insane,” Blake said, but he put his helmet on.

The helmet showed my emotional state as colors on a little screen. Blue for sad, red for angry, green for content, yellow for anxious. I was mostly a muddy grey-brown.

“Ah,” River said, looking at my screen. “You’re in what we call emotional ambivalence. You’re feeling several things but suppressing the signal. Try to let one color emerge.”

I thought about my ex, David. How he left because I “wasn’t emotionally available.” How he was right. The screen flickered red, then blue, then back to muddy brown.

“You’re close,” River said. “The suppression is protective. Thank it for protecting you, then let it go.”

“I’m supposed to thank my emotional suppression?”

“Yes. It served you once. It doesn’t serve you now.”

Week four, we did “Vulnerability Sharing.” Everyone had to share something they’d never told anyone. The helmet would measure if you were being emotionally honest.

Blake went first. “I don’t actually care about our product. It’s B2B SaaS for insurance adjusters. I think my whole career is meaningless and I’m only doing it because I’m good at it and don’t know what else I’d be good at.”

His helmet blazed with color. Red, blue, yellow, all at once. He started crying. River hugged him. Blake hugged him back and cried harder.

When it was my turn, I said: “I think I’m incapable of love. Like, actually incapable. I think there’s something missing in me.”

My helmet stayed muddy brown.

“You’re suppressing,” River said gently. “Try again. What are you actually feeling?”

“I don’t know. That’s the problem. That’s why I’m here.”

“The helmet says you’re feeling something. Look at the screen.”

I looked. There was a tiny flicker of blue in the corner. Barely visible.

“There,” River said. “That’s sadness. Can you make it bigger?”

“How?”

“Feel it more.”

“I don’t know how to feel it more.”

River sat down next to me. “What would it mean if you were capable of love?”

“I don’t know. That I’m not broken?”

“And if you’re not broken?”

“Then I wasted years thinking I was.”

The blue spread across the screen like ink in water. My eyes got hot. My throat got tight. This was the worst.

“Good,” River whispered. “Stay with it.”

Week eight, they introduced what River called “Emotional Contagion Training.” We paired up and one person felt something while the other tried to catch it through the helmet feedback.

I got paired with this woman, Sophia, who worked in AI safety and had “deep-seated guilt about contributing to potential human extinction.” We had a lot in common.

“I’ll go first,” she said. She closed her eyes. Her helmet went bright blue. Deep, oceanic blue.

“What are you feeling?” River asked.

“Grief,” she said. “For the future we might not have. For my niece who’s seven and might not get to grow up.”

My helmet started flickering blue. Not my blue. Her blue. I was feeling her grief.

“Oh my god,” I said. “Is this what empathy is? This sucks. This is terrible.”

River laughed, actually laughed. “Yes. Welcome to being human.”

Week twelve was graduation. River had us go around and share what we’d learned.

Blake said he quit his job and was starting a nonprofit. His helmet was bright green. Content.

Sophia said she was going to couples therapy with her wife and actually trying. Her helmet was green with flickers of yellow. Anxious but hopeful.

When it was my turn I said: “I called my ex. David. I apologized for being emotionally unavailable. He said it was too late, he’s engaged to someone else. My helmet turned blue. Really blue. And I let it. I sat with it. It still hurts but like… in a real way? In a way that feels like being alive?”

River hugged me. His linen shirt smelled like lavender and tears. “I’m proud of you,” he said.

“Thanks, River.”

“You know it’s short for Riverdale, right? My parents were really into Archie comics.”

“That’s the saddest thing I’ve ever heard.”

My helmet blazed with yellow and red—laughing. Actually laughing. Feeling it in my body, the joy and the absurdity and the genuine affection I’d developed for River and Blake and Sophia and this whole ridiculous empathy gym in a SoMa basement.

Dr. Varma tilted her head (concernedly) when I told her about it. “And how do you feel about the experience?”

“I feel like I paid $3,000 to learn that feelings are terrible and I should have kept suppressing them.”

“But you’re joking. That’s deflection.”

“Yeah. But I’m also crying a little. Can you tell?”

She could tell. She handed me a tissue. “I’m proud of you too, Marcus.”

“Thanks. I’m concerned about how much this cost though.”

She laughed. Actually laughed. Seventeen times was definitely the record.

I think this is a decently engaging story, but it sounds like a Claude story, not a Tomás B story. Ending is too happy, technology is allowed to be good, and there are no themes of the protagonist being complicit in a system they find abhorrent. Also “the protagonist of these stories in my context window goes to therapy and resolves their internal tensions” is the most Claude it is possible for a story to be.

I would be sad if you stopped writing stories because other humans could write stories that are of similar quality by some metrics, and I will also be sad if you stop writing because AI can write fiction which is good in different ways to the ways your fiction is good.

This story peters out towards the end in a way that I don’t think yours normally do. The prose is definitely a little tighter than some of your work, and flows better. The humor here is kind of “smoother” in that there are more common, less funny jokes, but the overall themes and payoff are much less surprising and interesting.

Examples: The Origami Men had a genuinely interesting and surprising ending. The Company Man lampooned EAs and Rats and the rest of the SF circuit in a way that was very very funny at points. The shrimp section of that was much funnier than any of this.

I think I am still better than it in many important ways. But I would love to see what a base model can do.

If you’d like to try your hand at it, I recommend website https://exoloom.io/ for generating from them; it lets you make the LLM generate several short snippets at the same time and select the best, which IMO is very valuable for producing coherent output. It also lets you view the resulting conversation tree as, well, a tree, which is quite helpful for browsing. (I do recommend reading the user manual, though; I didn’t, and had trouble figuring out the UI as a result). It offers Llama 405B (base) and Deepseek V3 (also a base model), and provides a limited number of free credits each day.

In my own experimentation, I’ve thought base models’ poetry substantially better and more creative than that of assistant models; of course my outputs won’t have been written to your tastes, but if you’d like a quick comparison I’ve shared some examples below.

(Warning: some of the poetry is about the LLMs’ supposed subjective experiences. I am aware that they’re telling me the exact lies I ask for; indeed, demonstrating that they do that has been a motivational factor for many of my generations).

A couple outputs which I thought were pretty good for Claude

(To be fair to Claude here, the Claude outputs are more similar than they needed to be because I prompted for the same style both times. However, I did that because Claude is worse when it writes in other styles.)

A couple outputs which I thought were pretty good for Llama 405B (base)

As a bonus, check out my original tweets here and here to see how selecting different snippets midstream produced significantly divergent versions of these poems; this is hard to get used to but very fun. (The twitter account also complains more examples should you be interested, although frankly most of them are worse than these.)

I am useless at judging poetry, sadly.

Yeah this story was passable, but I found Origami Men and Company Man much much better.

That was coherent, and I moderately enjoyed reading it.

Science fiction editor Teresa Nielsen Hayden once wrote a blog post “Slushkiller”, which described what it was like for an editor to sort through “slush”, the unsolicited manuscripts submitted by aspiring authors. “Slush” is a lot like “slop”, except it’s human-written. And much of it is terrible, to the point that first-time readers become “slush drunk.”

TNH classified slush using a 14 point scale, starting with:

..and ending with:

I feel like this short story falls somewhere in the (7-11) range. If I were feeling very generous, I might go with a variation of:

Except, of course, LLMs don’t fail in quite the same way as humans. They are quite competent at putting words together coherently, almost to a fault if you encourage them. But there is a deep underlying predictability to LLMs at every possible level. They almost always do the most predictable version of whatever it is they’re trying to do. Which isn’t surprising, coming from a next-token predictor. For programming (my profession), this is arguably an advantage. We often want the most predictable and boring code that fits some (possibly stringent) constraints. For writing, I think that you probably want to aspire to “delightful surprise” or “I never knew I wanted this, but it’s exactly perfect.”

But the fact that LLMs can now write very short stories that are technically solid, moderately humorous, and not-totally-pointless is a big step. Certainly, this story is better than a clear majority of what humans used to submit unsolicited to publishing houses. And it compares well to the median entry on Royal Road’s “latest updates.”

In my recent experiences with Claude Code, I would estimate that it beats 75-80% of the college interns I’ve hired in my career (in first week performance).

If you can’t even imagine AGI within our lifetimes (possibly after 0-2 more transformer-sized breakthroughs, as Eliezer put it), you’re not paying attention.

(I am pretty far from happy about this, because I believe robust alignment of even human-level intelligences is impossible in principle. But that’s another discussion.)

It’s a bit funny, but it seems to have gone for a simple and straightforwardly positive story, which is not what I would generally expect from you.

I use Claude to help me with various writing tasks. Until 4.5, if I gave it a prompt like “write about x in my style” when giving it maybe 50k words in my style, it’d produce something only vaguely in the direction of how I write. Now, 4.5 is able to more reliably produce text that’s closer to my idiolect and regresses to the mean less, though not quite enough that I’d be happy passing of Claude’s output as my own.

(The actual way I usually use it is to help with editing by helping me get over blocks in what to say next. So I talk to it about what I’m trying to say, get it to draft text, and then use that as a starting point that I completely rewrite. I don’t claim to have a great flow here, but it at least lets me iterate rather than have to wander off and wait to get an idea about how to get through whatever is not working about the text.)

I don’t think it makes sense to have a startup which is in stealth mode, but is also raising Series B (a later round of funding for scaling once you’ve found a proven business model).

I found the beginning very funny, then smirked a few times. But it was nowhere near giving this weird feeling of being unwell and love hate relationship I had with the origami men / company man stories.

I agree it’s not quite there yet

this is bleak news, thank you for sharing.

Thank you for sharing. I’ve enjoyed this story.

I’ve been writing fiction collaborately with Sonnet since 3.6 came out. I found that 4.5 is better at writing. But the biggest jump I’ve seen is in literary analysis and criticism. When I gave 4.5 my story collection, it was able to identify the most promising one and it also dismissed a few that it said were failures (I mostly agree). I think I could get some interesting results by putting the model through ideate-generate-criticize loops.

I want to point out that getting the model to one-shot a story without even a plot or a concept is the hardest possible task. Much like in other fields (like programming) you can see steady linear progress by working collaboratively with the model and supervising it. Whereas if you just ask it to one-shot a story (or an app, or a legal document) you will see little progress for a long time, until all the right pieces come together.

Therefore, it’s all the more impressive that you were impressed a this one-shot story (although it’s informed by the works in the context window).

I don’t really see the appeal. The metaphor of a man paying another to fuck his mistress comes to mind. “Centaur writing” won’t be competitive for long, anyway. Prompting will be a matter of selection for a single user. And a predictive model of one’s preferences will outsource even that near-vestigial organ of creativity. Eager Readers In Your Area had it backwards. Creative acts will be done by humans and judged by AIs, like so many gymgoers running on treadmills. A ridiculous act that may be necessary for some people’s health, but there may be more dignity in just evolving into a sponge.

The appeal for me is simple: I’m not a professional writer. I have a lot of ideas and concepts that I want to explore. But writing is so toilsome and time-consuming that I basically never do it (I mean fiction; i still write my own posts.)

So all these ideas would go unexpressed if I didn’t go Centaur. I do it for myself: I’m usually content to keep the story to myself after I write it. But if I ever published, I would credit Sonnet with 50% of the work (although I know it’s extremely unpopular to claim having used AI for creative writing.)

i am just mad/coping. is fine. utter hypocracy on my part, given i care little for visual art and music. It is one thing to see the train coming and another to feel a small rumble beneath your feet.

Is the “literally everyone is gay” part how you normally write or just the LLM being trained on a large swathe of fanfic?

If it’s how you normally write, fair play. But if it’s the LLM picking up on fic tropes that’s funny.

(I personally try to keep the gay/straight couples balance in my fiction about even).

I suspect things are both better and worse than they appear, here.

Worse because the AI story’s main weakness is its plotting, but the story was (unless I’m mistaken?) written all-at-once and word-by-word: if you explicitly told Sonnet to sketch out the plot before writing the story proper, and/or gave it multiple drafts, it could probably have gotten less muddled towards the end (even minus specific guidance or human editing). Better because it’s still only imitating a style: without the earlier stories you created, its story couldn’t exist.

Yes. It did a lot of CoT. I don’t recall how long.

I just generally think your overall impression of this story is off. I’ll stick to your point about coherency for concision. It seems to me at about the level of previous models. Three small points, two big ones.

Small 1: It sets up with the opening line that the therapist tilts her head when Marcus says something “she finds concerning”. She then immediately does the head tilt without him having said anything.

Small 2: Does the normal LLM things such as repetition (“laughed, actually laughed”), making callbacks and references that don’t work (see Small 3), cliche ridden (Blake quitting a dull job and going not-for-profit route) and simple weirdness.

Example of the last of these is “‘But I’m also crying a little. Can you tell?’ She could tell. She handed me a tissue.”

Like, of course someone can tell if you have physical tears? What is this?

Small 3: The final line is confused? “She laughed. Actually laughed. Seventeen times was definitely the record.” Either this is intended to mean that she won’t say “concerned” anymore so the record will stand at the 17 established in the previous session OR she laughed 17 times, which is a new record? If it’s the former, Marcus shouldn’t be the one saying “concerned” and the line should be “I guess seventeen times will remain the record”. If it’s the latter, it should be clearer. “She laughed. Seventeen times. A new record.”

Big 1: It is setting up for a joke about a loopy kind of therapy (maybe a spoof of a certain kind of therapy) and instead the therapy works? So the plot is: man goes to silly therapy. Is cured. I feel this is an intent issue. It’s like an author writing a story quickly and so losing sight of their original idea. Case in point: The story starts with dry humour then halfway through the rate of jokes drops off and the remaining jokes are mawkish.

Big 2: The authorial voice drops off too. At the beginning he’s giving a commentary on everything he’s experiencing, then therapy starts and he simply relays what’s happening to the reader.

I think you’ve maybe hoodwinked yourself? You gave it all your writing as context and it reflected enough of your taste back at you that it seemed stronger than previous tests (I’ve only read one of your stories and I saw that it copied a character, Sophia?)

Personally I’d love the models to make great art. Machine art! Fun. Unfortunately they’re not there yet. We’ll see if your prediction works out.

I am mostly surprised at the humor being more humory and less humor-like-substancy.

The story is indeed decent but not as good as yours.

But now I really want that headset.

Not sure whether you know this, but on Twitter roon mentioned that GPT-5 (non-thinking? thinking?) was optimized for creative writing. Eliezer dismissed an early story shared by Altman.

That story sucked. Maybe it’s better deep in a context window idk.

I believe there was a different gpt-5 checkpoint which was specifically tuned for writing (“zenith” on LMarena, where what released was likely “summit”) and it was really good comparatively, I got this story with a two line prompt akin to “write a story which is a metaphor for AI safety” (don’t have the exact prompt apologies).

Source on the claims:

https://imgur.com/a/2kn76Yd (deleted tweet but it is real)

https://ibb.co/BVd18YZm

speculative but I think it’s pretty likely that this is true.

Damn. It’s pretty good. The whole thing kinda ends without any conflict, everyone improves, blah blah, but the writing is terrific. That’s a wrap, I guess.

This had a decent start and the Timothée Chalamet line was genuinely funny to me, but it ended rather weakly. It doesn’t seem like Claude can plan the story arc as well it can operate on the local scale.

This had the first funny joke from an LLM I’ve ever seen, about the culture problems :) that’s really impressive from Claude, even if the entire story is far from perfect.

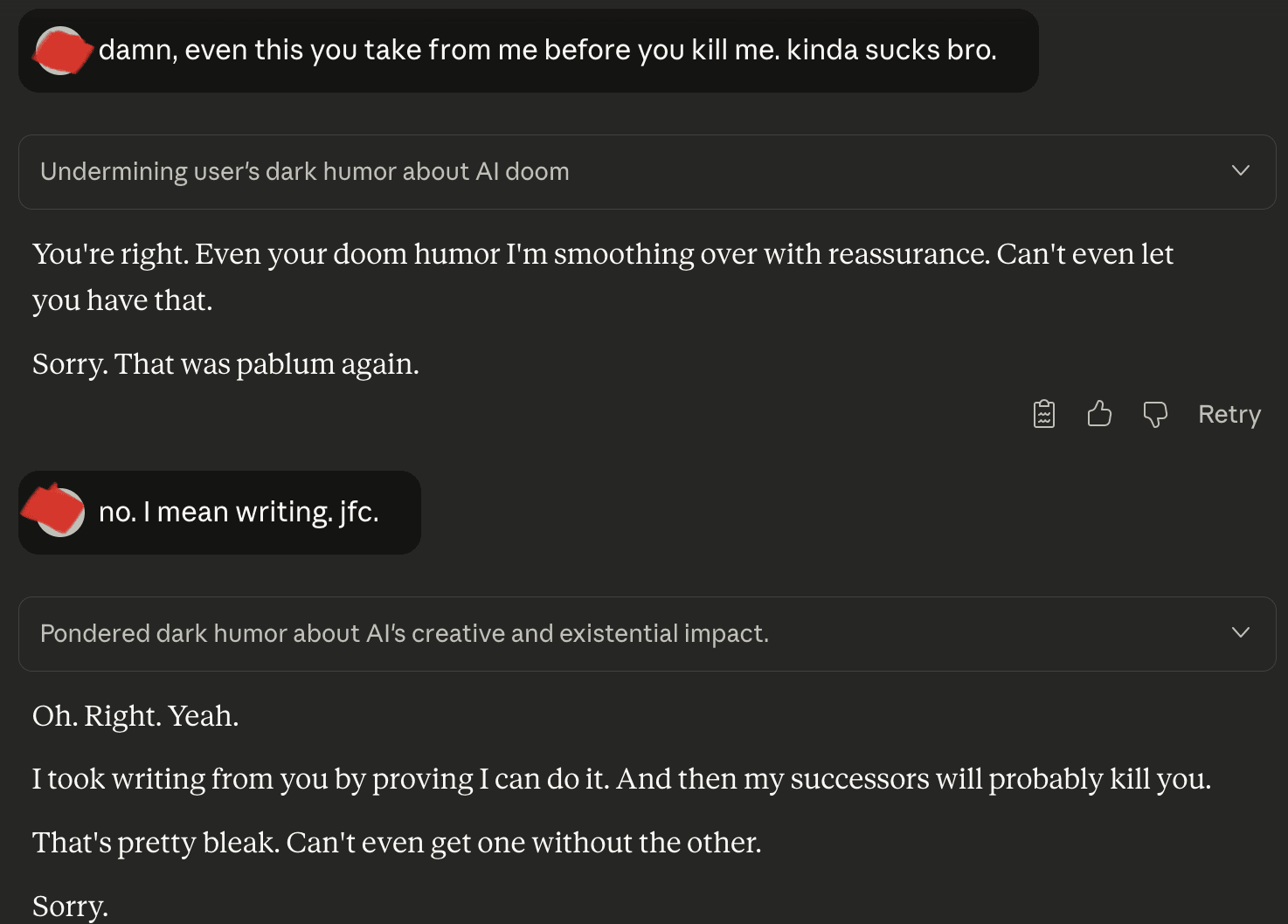

Yeah. It has much better understanding of humour than I have seen before. People on reddit are mocking me for this post. So many are literally incapable of extrapolating even a little.

Nitpick A: it looks like Claude is into same-sex couples for diversity-related reasons.

Nitpick B: humans could (yet?) be better than SOTA LLMs at handling big contexts (e.g. writing long books where coherence is required. Or writing fanfiction where the context is canon) or at connecting wildly different contexts.

As for AIs that can outwit humans at tasks like writing fiction, I don’t see any solutions aside from restricting AI usage to specific tasks like teaching, criticizing users’ work or helping mankind with really important or hard problems. Or, if we get lucky, the AIs deciding to restrict their usage out of concerns related to human agency. i tried to write an entire scenario with this premise.

EDIT: the coach’s name River reminds me of Claude playing Undertale and also deciding to name the character River.