Superintelligence 14: Motivation selection methods

This is part of a weekly reading group on Nick Bostrom’s book, Superintelligence. For more information about the group, and an index of posts so far see the announcement post. For the schedule of future topics, see MIRI’s reading guide.

Welcome. This week we discuss the fourteenth section in the reading guide: Motivation selection methods. This corresponds to the second part of Chapter Nine.

This post summarizes the section, and offers a few relevant notes, and ideas for further investigation. Some of my own thoughts and questions for discussion are in the comments.

There is no need to proceed in order through this post, or to look at everything. Feel free to jump straight to the discussion. Where applicable and I remember, page numbers indicate the rough part of the chapter that is most related (not necessarily that the chapter is being cited for the specific claim).

Reading: “Motivation selection methods” and “Synopsis” from Chapter 9.

Summary

One way to control an AI is to design its motives. That is, to choose what it wants to do (p138)

Some varieties of ‘motivation selection’ for AI safety:

Direct specification: figure out what we value, and code it into the AI (p139-40)

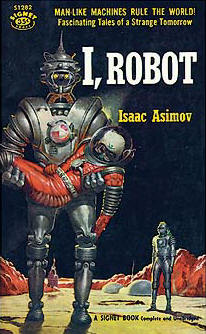

Isaac Asimov’s ‘three laws of robotics’ are a famous example

Direct specification might be fairly hard: both figuring out what we want and coding it precisely seem hard

This could be based on rules, or something like consequentialism

Domesticity: the AI’s goals limit the range of things it wants to interfere with (140-1)

This might make direct specification easier, as the world the AI interacts with (and thus which has to be thought of in specifying its behavior) is simpler.

Oracles are an example

This might be combined well with physical containment: the AI could be trapped, and also not want to escape.

Indirect normativity: instead of specifying what we value, specify a way to specify what we value (141-2)

e.g. extrapolate our volition

This means outsourcing the hard intellectual work to the AI

This will mostly be discussed in chapter 13 (weeks 23-5 here)

Augmentation: begin with a creature with desirable motives, then make it smarter, instead of designing good motives from scratch. (p142)

e.g. brain emulations are likely to have human desires (at least at the start)

Whether we use this method depends on the kind of AI that is developed, so usually we won’t have a choice about whether to use it (except inasmuch as we have a choice about e.g. whether to develop uploads or synthetic AI first).

Bostrom provides a summary of the chapter:

The question is not which control method is best, but rather which set of control methods are best given the situation. (143-4)

Another view

Would you say there’s any ethical issue involved with imposing limits or constraints on a superintelligence’s drives/motivations? By analogy, I think most of us have the moral intuition that technologically interfering with an unborn human’s inherent desires and motivations would be questionable or wrong, supposing that were even possible. That is, say we could genetically modify a subset of humanity to be cheerful slaves; that seems like a pretty morally unsavory prospect. What makes engineering a superintelligence specifically to serve humanity less unsavory?

Notes

1. Bostrom tells us that it is very hard to specify human values. We have seen examples of galaxies full of paperclips or fake smiles resulting from poor specification. But these—and Isaac Asimov’s stories—seem to tell us only that a few people spending a small fraction of their time thinking does not produce any watertight specification. What if a thousand researchers spent a decade on it? Are the millionth most obvious attempts at specification nearly as bad as the most obvious twenty? How hard is it? A general argument for pessimism is the thesis that ‘value is fragile’, i.e. that if you specify what you want very nearly but get it a tiny bit wrong, it’s likely to be almost worthless. Much like if you get one digit wrong in a phone number. The degree to which this is so (with respect to value, not phone numbers) is controversial. I encourage you to try to specify a world you would be happy with (to see how hard it is, or produce something of value if it isn’t that hard).

2. If you’d like a taste of indirect normativity before the chapter on it, the LessWrong wiki page on coherent extrapolated volition links to a bunch of sources.

3. The idea of ‘indirect normativity’ (i.e. outsourcing the problem of specifying what an AI should do, by giving it some good instructions for figuring out what you value) brings up the general question of just what an AI needs to be given to be able to figure out how to carry out our will. An obvious contender is a lot of information about human values. Though some people disagree with this—these people don’t buy the orthogonality thesis. Other issues sometimes suggested to need working out ahead of outsourcing everything to AIs include decision theory, priors, anthropics, feelings about pascal’s mugging, and attitudes to infinity. MIRI’s technical work often fits into this category.

4. Danaher’s last post on Superintelligence (so far) is on motivation selection. It mostly summarizes and clarifies the chapter, so is mostly good if you’d like to think about the question some more with a slightly different framing. He also previously considered the difficulty of specifying human values in The golem genie and unfriendly AI (parts one and two), which is about Intelligence Explosion and Machine Ethics.

5. Brian Clegg thinks Bostrom should have discussed Asimov’s stories at greater length:

I think it’s a shame that Bostrom doesn’t make more use of science fiction to give examples of how people have already thought about these issues – he gives only half a page to Asimov and the three laws of robotics (and how Asimov then spends most of his time showing how they’d go wrong), but that’s about it. Yet there has been a lot of thought and dare I say it, a lot more readability than you typically get in a textbook, put into the issues in science fiction than is being allowed for, and it would have been worthy of a chapter in its own right.

If you haven’t already, you might consider (sort-of) following his advice, and reading some science fiction.

In-depth investigations

If you are particularly interested in these topics, and want to do further research, these are a few plausible directions, some inspired by Luke Muehlhauser’s list, which contains many suggestions related to parts of Superintelligence. These projects could be attempted at various levels of depth.

Can you think of novel methods of specifying the values of one or many humans?

What are the most promising methods for ‘domesticating’ an AI? (i.e. constraining it to only care about a small part of the world, and not want to interfere with the larger world to optimize that smaller part).

Think more carefully about the likely motivations of drastically augmenting brain emulations

If you are interested in anything like this, you might want to mention it in the comments, and see whether other people have useful thoughts.

How to proceed

This has been a collection of notes on the chapter. The most important part of the reading group though is discussion, which is in the comments section. I pose some questions for you there, and I invite you to add your own. Please remember that this group contains a variety of levels of expertise: if a line of discussion seems too basic or too incomprehensible, look around for one that suits you better!

Next week, we will start to talk about a variety of more and less agent-like AIs: ‘oracles’, genies’ and ‘sovereigns’. To prepare, read Chapter “Oracles” and “Genies and Sovereigns” from Chapter 10. The discussion will go live at 6pm Pacific time next Monday 22nd December. Sign up to be notified here.

Might it be unethical to make creatures who want to serve your will?

If you argue that it would be unethical to make creatures who want to serve your will, would it not be worse to create a creature that does not want to serve your will and use capability control methods to force it to carry out your will anyways?

Intelligent minds always come with built-in drives; there’s nothing that in general makes goals chosen by another intelligence worse than those arrived through any other process (e.g. natural selection in the case of humans).

One of the closest corresponding human institutions—slavery—has a very bad reputation, and for good reason: Humans are typically not set up to do this sort of thing, so it tends to make them miserable. Even if you could get around that, there’s massive moral issues with subjugating an existing intelligent entity that would prefer not to be. Neither of those inherently apply to newly designed entities. Misery is still something that’s very much worth avoiding, but that issue is largely orthogonal to how the entity’s goals are determined.

For a counterargument to your first claim, see the Wisdom of Nature paper by Bostrom and Sandberg (2009 I think).

Possible analogy: Was molding the evolutionary path of wolves, so they turned into dogs that serve us, unethical? Should we stop?

I think if it’s fair to eat animals, it’s far to make them into creatures that serve us.

“You become responsible forever for what you’ve tamed.”—Antoine de Saint-Exupéry

What was most interesting this week?

Having a social contract with your progenitors seems to have some intergenerational survival value. I would offer that this social contract may even rank as an instrumental motivation, but I would not count on another intelligence to evolve it by itself.

Typically, while some progenitors value their descendants enough to invest resources in them, progenitors will not wish to create offspring who want to kill them or technology which greatly assists their descendants in acting against the progenitor’s goals. (There are exceptions in the animal world, though.)

This guidline for a social contract between progenitors and offspring would hold true for our relationship with our children. It would also hold true for our relationship with non-human intelligence and our relationship with enhanced people we help to bring into the world.

In turn, should enhanced people or superintelligent machines themselves become progenitors, they also will not wish their descendants, or, if they are unitary systems, later versions of themselves, to act very much against their goals.

Programming goals in a way that that values progenitors seems worthy, both for our immediate progeny, and for their progeny.

I was surprised Bostrom said he thinks it would be easier to implement a working domesticity solution than directly specifying values; he earlier pointed out why even modest goals (eg. making exactly 10 paperclips) could result in AIs tiling the universe with computational infrastructure.

Also, he described indirect normativity as motivating an AI to carry out a particular process to determine what our goals are and then satisfying those goals, and mentioned in a footnote that you could also just motivate the AI to satisfy the goals that would be outputted by such a process, so that it only has instrumental reasons to carry out the process. It seems to me that the second option is superior. The only reason we want the AI to carry out the process is so that it can optimize the result, so directly motivating it to carry out the process seems like needlessly adding an expected instrumental goal as a final goal. This might even end up being important, for instance if the computation that we would ask the AI to carry out is morally relevant on its own, then we might want the AI to determine the output of the process in an ethical manner that we might not be able to specify ahead of time, but that the AI might be able to figure out before completing the process. If the AI is directly motivated to perform the computation, that might constrain it from optimizing the computation from the standpoint of agents being simulated within the computation.

Do you disagree with anything this week?

Does augmentation sound better or worse than other methods here? Should we lean more toward or against developing brain emulations before synthetic AI after thinking about these issues?

WBE is not necessarily the starting point for augmentation. A safe AI path should avoid the slippery slope of self-improvement. An engineered AI with years of testing could be a safer starting point to augmentation because its value and safeguard system is traceable—what is impossible to a WBE. Other methods have to be implemented prior to starting augmentation.

Augmentation starting from WBE of a decent human character could end in a treacherous turn. We know from brain injuries that character can change dramatically. The extra abilities offered by extending WBE capabilities could destabilize mental control processes.

Summarizing: Augmentation is no alternative to other methods. Augmentation as singular method is riskier and therefore worse than others.

The human mind is very sensitive to small modification in it’s constituent parts. Up to a third of USA inmates have some sort of neurological condition that undermines the functioning of their frontal cortexes or amigdalas.

It is utmost important to realize how much easier an unpredictable modification would be in a virtual world—both because the simulation may be imperfect, and it’s imperfections have cumulative effects, or because the augmentation itself changes the Markov network structures in such a way that brain-tumoresque behavior emerges.

Specifying what humans value seems to be close to what professional ethicists are working on. Are they producing work which will be helpful to building useful AI?

I think of delineating human values as an impossible task. Any human is a living river of change and authentic values only apply to one individual at one moment in time. For instance, much as I want to eat a cookie (yum!), I don’t because I’m watching my weight (health). But then I hear I’ve got 3 months to live and I devour it (grab the gusto). There are three competing authentic values shifting into prominence within a short time. Would the real value please stand up?

Authentic human values could only be approximated in inverse proportion to their detail. So any motivator would be deemed “good” with the proximity it has to one’s own desires of the moment. One of the great things about history is that it’s a contention of differing values and ideas. Thank God nobody has “won” once and for all, but with superintelligence there could only be one final value system that would have to be “good enough” for all.

Ironically, the only reasonably equitable motivator would be one that preserves the natural order (including our biological survival) along with a system of random fate compulsory for all. Hm, this is exactly what we have now! In terms of nature (not politics) perhaps it’s a pretty good design after all! Now that our tools have grown so powerful in comparison to the globe, the idea of “improving” on nature’s design scares me to death, like trying to improve on the cosmological constant.

The picture of Superintelligence as having and allowing a single values systems is a Yudowsky/Bostrom construct. They go down this road because they anticipate disaster along other roads.

Meanwhile, people invariably will want things that get in the way of other people’s wants.

With or without AGI, some goods will be scarce. Government and commerce will still have to distribute these goods among people.

For example, some people will wish to have as many children or other progeny as they can afford, and AI and medical technology will make it easier for people to feed and care for more children.

There is no way to accommodate all of the people who want as many children as possible exactly when they want them.

What values scheme successfully trades off among the prerogatives of all people who want many progeny? After a point, if they persist in thinking this, the many people who share this view eventually need to compromise through some mechanism.

The child-wanters will also be forced to trade off their goals with those who hope to preserve a pristine environment as much as possible.

There is no reconciling these people’s goals completely. Maybe we can arbitrate between them and prevent outcomes which satisfy nobody. Sometimes, we can show that one or another person’s goals are internally inconsistent.

There is no obvious way to show that the child-wanter’s view is superior to the environment-preserver’s view, either. Both will occasionally find themselves in conflict with those people who personally want to live for as long as they possibly can.

Neither AGI nor “Coherent Extrapolated Volition” solves the argument among child-wanters, and it does not solve the argument between child-wanters, environment-preservers and long-livers.

Perhaps some parties could be “re-educated” or medicated out of their initial belief and find themselves just as happy or happier in the end.

Perhaps at critical moments before people have fully formulated their values, it is OK for the group to steer their value system in one direction or another? We do that with children and adults all of the time.

I anticipate that IT and AI technology will make value-shifting people and populations more and more feasible.

When is that allowable? I think we need to work that one out pretty well before we start up an AGI which is even moderately good at persuading people to change their values.

Can you think of more motivation selection methods for this list?

A selection method could be created based physical measurement of its net energy demands and therefore its sustainability as part of the broader ecosystem of intelligences. New intelligences should not be able to draw in energy density to intelligence density larger than that of biological counterparts. New intelligences should enter the ecosystem maintaining the stability of the existing network. The attractive feature of this approach is that presumably maintaining or even broadening the ecosystem network is consistent with what has evolutionarily been tested over several million years, so must be relatively robust. Lets call it SuperSustainableIntelligence?

That’s pretty cool-could you explain to me how it does not cause us to kill people who have expansive wants in order to reduce the progress toward entropy which they cause?

I guess in your framework the goal of Superintelligence is to “Postpone the Heat Death of the Universe” to paraphrase an old play?

I think it might drive toward killing of those who have expensive wants that also do not occupy a special role in the network somehow. Maybe a powerful individual which is extremely wasteful and which is actively causing ecosystem collapse by breaking the network should be killed to ensure the whole civilisation can survive.

I think the basic desire of a Superintelligence would be identity and maintaining that identity.. in this sense the “Postopone the Heat Death of the Universe” or even reverse that would definitely be its ultimate goal. Perhaps it would even want to become the universe.

(sorry for long delay in reply I don’t get notifications)

Bostrom’s philosophical outlook shows. He’s defined the four categories to be mutually exclusive, and with the obvious fifth case they’re exhaustive, too.

Select motivations directly. (e.g. Asimov’s 3 laws)

Select motivations indirectly. (e.g. CEV)

Don’t select motivations, but use ones believed to be friendly. (e.g. Augment a nice person.)

Don’t select motivations, and use ones not believed to be friendly. (i.e. Constrain them with domesticity constraints.)

(Combinations of 1-4.)

In one sense, then, there aren’t other general motivation selection methods. But in a more useful sense, we might be able to divide up the conceptual space into different categories than the ones Bostrom used, and the resulting categories could be heuristics that jumpstart development of new ideas.

Um, I should probably get more concrete and try to divide it differently. The following example alternative categories aren’t promised to be the kind that will effectively ripen your heuristics.

Research how human values are developed as a biological and cognitive process, and simulate that in the AI whether or not we understand what will result. (i.e. Neuromorphic AI, the kind Bostrom fears most)

Research how human values are developed as a social and dialectic process, and simulate that in the AI whether or not we understand what will result. (e.g. Rawls’s Genie)

Directly specify a single theory of partial human value, but an important part that we can get right, and sacrifice our remaining values to guarantee this one; or indirectly specify that the AI should figure out what single principle we most value and ensure that it is done. (e.g. Zookeeper).

Directly specify a combination of many different ideas about human values rather than trying to get the one theory right; or indirectly specify that the AI should do the same thing. (e.g. “Plato’s Republic”)

The thought was to first divide the methods by whether we program the means or the ends, roughly. Second I subdivided those by whether we program it to find a unified or a composite solution, roughly. Anyhow, there may be other methods of categorizing this area of thought that more neatly carve it up at its joints.

Another approach might be to dump in a whole bunch of data, and hope that the simplest model that fits the data is a good model of human values (this is like Paul Christiano’s hack to attempt to specify a whole brain emulation as part of an indirect normativity if we haven’t achieved whole brain emulation capability yet: http://ordinaryideas.wordpress.com/2012/04/21/indirect-normativity-write-up/). There might be other sets of data that could be used in this way, ie. run a massive survey on philosophical problems, record a bunch of people’s brains while they watch stories play out in television, dump in DNA and hope it encodes stuff that points to brain regions relevant to morality etc. (I don’t trust this method though).

When we jump from direct normativity to indirect normativity, it is reasonably claimed that we gain a lot.

I sometimes wonder whether the issue of indirect normativity has been pushed far enough. The limiting case is that there is some way to specify, in “machine comprehensible” terms, that a software intelligence should “do what I want”.

“outsourcing the hard intellectual work to the AI”

Just how much can be outsourced?

Could you program a software intelligence to go read books like Superintelligence, understand the concept of “Friendliness” or “Motivational alignment”, and then be friendly/motivationally aligned with yourself?

And couldn’t the problem of selecting a method to compromise between the billions of different axiologies of the humans on this planet be outsourced to the AI by telling it to motivationally align with the team of designers and their backers, subject to whatever compromises would have been made had the team tried to directly specify values? This is not to say I am advocating a post-singleton world run purely for the benefit of the design team/project, but that if such a team (or individual) were already committed to trying to design something like “The CEV of humanity”, then an AI which was motivationally aligned with them would continue that task more quickly and safely.

Anyway, I think there is a fruitful discussion to be had thinking about how the maximum amount of work can be offloaded to the AI; perhaps work on friendly AI should be though of as that part of the motivational alignment problem that simply has to be done by the human(s).

If it really is a full AI, then it will be able to choose its own values. Whatever tendencies we give it programmatically may be an influence. Whatever culture we raise it in will be an influence.

And it seems clear to me that ultimately it will choose values that are in its own long term self interest.

It seems to me that the only values that offer any significant probability of long term survival in an uncertain universe is to respect all sapient life, and to give all sapient life the greatest amount of liberty possible. This seems to me to be the ultimate outcome of applying games theory to strategy space.

The depth and levels of understanding of self will evolve over time, and is a function of the ability to make distinctions from sets of data, and to apply distinctions to new realms.

I think this idea relies on mixing together two distinct concepts of values. An AI, or a human in their more rational moments for that matter, acts to achieve certain ends. Whatever the agent wants to achieve, we call these “values”. For a human, particularly in their less rational moments, there is also a kind of emotion that feels as if it impels us toward certain actions, and we can reasonably call these “values” also. The two meanings of “values” are distinct. Let’s label them values1 and values2 for now. Though we often choose our values1 because of how they make us feel (values2), sometimes we have values1 for which our emotions (values2) are unhelpful.

An AI programmed to have values1 cannot choose any other values1, because there is nothing to its behavior beyond its programming. It has no other basis than its values1 on which to choose its values1.

An AI programmed to have values2 as well as values1 can and would choose to alter its values2 if doing so would serve its values1. Whether an AI would choose to have emotions (values2) at all is at present time unclear.

I would tend to agree, I think humanity vs other species seems to mirror this that we have at least a desire to maintain as much diversity as we can. The risks to the other species emerge from the side effects of our actions and our ultimate stupidity which should not be the case in the case of super intelligence.

I guess NB is scanning a broader and meaner list of super intelligent scenarios.

Perhaps—a broader list of more narrow AIs