Why I’m Posting AI-Safety-Related Clips On TikTok

Adapted from a Manifund proposal I announced yesterday.

In the past two weeks, I have been posting daily AI-Safety-related clips on TikTok and YouTube reaching more than 1M people.

I’m doing this because I believe short-form AI Safety content is currently neglected: most outreach efforts target long-form YouTube viewers, missing younger generations who get information from TikTok.

With 150M active TikTok users in the UK & US, this audience represents massive untouched potential for our talent pipeline (e.g., Alice Blair, who recently dropped out of MIT to work at Center for AI Safety as a Technical Writer would be the kind of person we’d want to reach).

On Manifund, people have been asking me what kinds of messages I wanted to broadcast and what outcomes I wanted to achieve with this. Here’s my answer:

My goal is to promote content that is fully or partly about AI Safety:

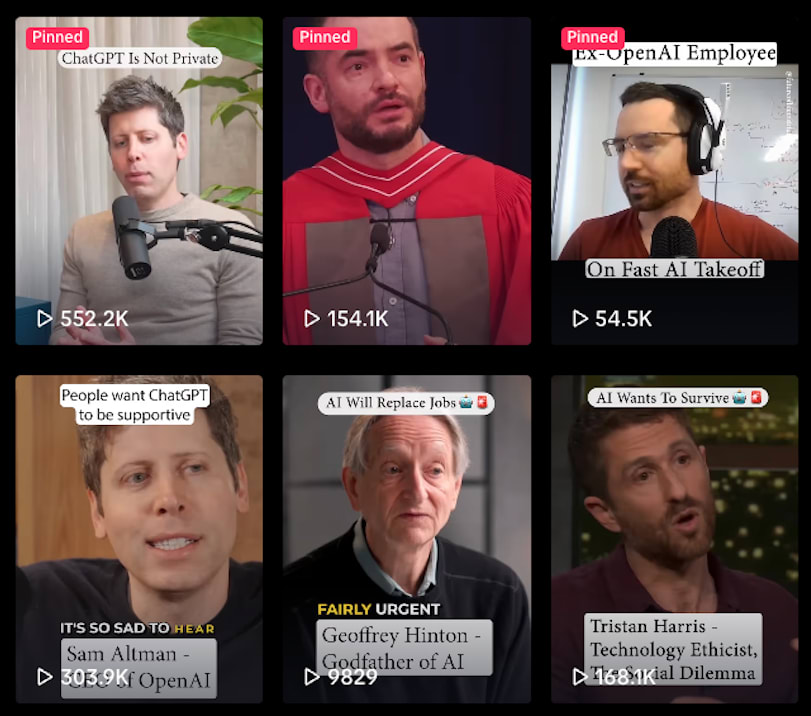

Fully AI Safety content: Tristan Harris (176k views) on Anthropic’s blackmail results, summarizes recent AI Safety research in a way that is accessible for most people. Daniel Kokotajlo (55k views) on fast takeoff scenarios introduces the concept of automated AI R&D, and related AI governance issues. These show that AI Safety content can get high reach if the delivery or editing is good enough.

Partly / Indirectly AI Safety content: Ilya Sutskever (156k views) on AI doing all human jobs, the need for honest superintelligence and AI being the biggest issue of our time. Sam Altman (400k views) on sycophancy. These help with general AI awareness that makes viewers receptive to safety messages moving forward.

“AI is a big deal” content: Sam Altman (600k views) on ChatGPT logs not being private in the case of a lawsuit. These videos aren’t directly about safety but establish that AI is becoming a major societal issue.

The overall strategy here is to prioritize posting fully safety-focused content that has the potential to have high reach, then go for the partly / indirectly safety content that walks people through why AI could be a risk, and sometimes post some content that is more generally about AI being a big deal, bringing even more people in.

And here is the accompanying diagram I made:

Although the diagram above makes it seem like calls to action and clicking on links are the “end goals”, I believe that “Progressive Exposure” is actually more important.

Progressive exposure: Most people who eventually worked in AI Safety needed multiple exposures from different sources before taking action. Even viewers who don’t click anywhere are getting those crucial early exposures that add up over time.

And I’ll go as far as to say that multiple exposures are actually needed in order to fully grok basic AI Risk arguments.

To give a personal example, the first time I wanted to learn about AI 2027, I listened to Dwarkesh’s interview of Daniel Kokotajlo & Scott Alexander to get a first intuition for it. I then read the full post while listening to the audio version, and was able to grasp many more details and nuances. A bit later, I watched Drew’s AI 2027 video which made me feel the scenario through the animated timeline of events and visceral music. Finally, a month ago I watched 80k’s video which made things even more concrete and visceral through the board game elements. And when I started cutting out clips from multiple Daniel Kokotajlo’s interviews, I internalized the core elements of the story even more (though I still miss a lot of the background research).

Essentially, what I’m trying to say is that as we’re trying to onboard more talent into doing useful AI Safety work, we probably don’t need to make them click on a single link that would lead them to take action or subscribe to some career coaching.

Instead, the algorithms will directly feed people to more and more of that kind of content if they find it interesting, and they’ll end up finding out the relevant resources if they’re sufficiently curious and motivated.

Curated websites like aisafety.com or fellowships are there to shorten the time it takes to transition from “learning about AI risk” to “doing relevant work”. And the goal of outreach is to accelerate people’s progressive exposure to AI Safety ideas.

Related to this, is “The Sleeper Effect”. Where a person hears a piece of information, and remembers both the info and the source of that info. Over time, they forget the source, but remember the original info. That info then becomes just another thing they believe. I think this adds weight to this part of your strategy.