For a while I’ve been reading LessWrong, and there’s something that doesn’t make much sense to me, which is the idea that it is possible to align an AGI/superintelligence at all. I understand that it’s probably not even a majority of discussion on LW that is optimistic about the prospect of AI alignment via technology as opposed to other means, but nonetheless, I think it’s skews the discussion.

I should humbly clarify here that I don’t consider myself in the league of most LW posters, but I have very much enjoyed reading the forum nonethless. The alignment question is a bit like the the Collatz conjecture, seductive, only the alignment question intuitively seems much harder than Collatz!

Alignment is a subject of intense ongoing debate on LW and other places, and of course the developers of the models, though they admit that it’s hard, are still optimistic about the possibility of alignment (I suppose they have to be).

But my intuition tells me something different; I think of superintelligence in terms of complexity, eg, it has a greater ability to manage complexity than humans, and to us it is very complex. In contrast, we are less complex to it and it may find us basic and rather easy to model / predict to a good degree of confidence.

I think complexity is a useful concept to think about, and it has an interesting characteristic, which is that it tends to escape your attempts to manage it, over time. This is why, as living organisms, we need to do continual maintenance work on ourselves to pump the entropy out of our bodies and minds.

Managing complexity takes ongoing effort, and the more complex the problem is, the more likely that your model of it will fall short sooner or later, so pretty much guaranteed, in the case of a superintelligence. And this is going to be a problem for AI safety, news to pretty much no-one I expect…

And this is just how life is, and I havn’t seen an example in any other domain that would suggest we can align a superintelligence. If we could, maybe we could first try to align a politician? Nope, we havn’t managed that either, and the problem isn’t entirely dissimilar. What about aligning a foreign nation state? Nope, there are only 2 ways, either there is mutual benefit to alignment, or there is a benefit to one party to behave well because the other has the circumstantial advantage.

I Googled “nature of complexity” just to see if there was anything that supported my intuition on the subject, and the first result is this page, which has a fitting quote:

“Complexity is the property of a real world system that is manifest in the inability of any one formalism being adequate to capture all its properties. It requires that we find distinctly different ways of interacting with systems. Distinctly different in the sense that when we make successful models, the formal systems needed to describe each distinct aspect are NOT derivable from each other.”

My layman’s understanding of what it is saying is can be summed up thusly:

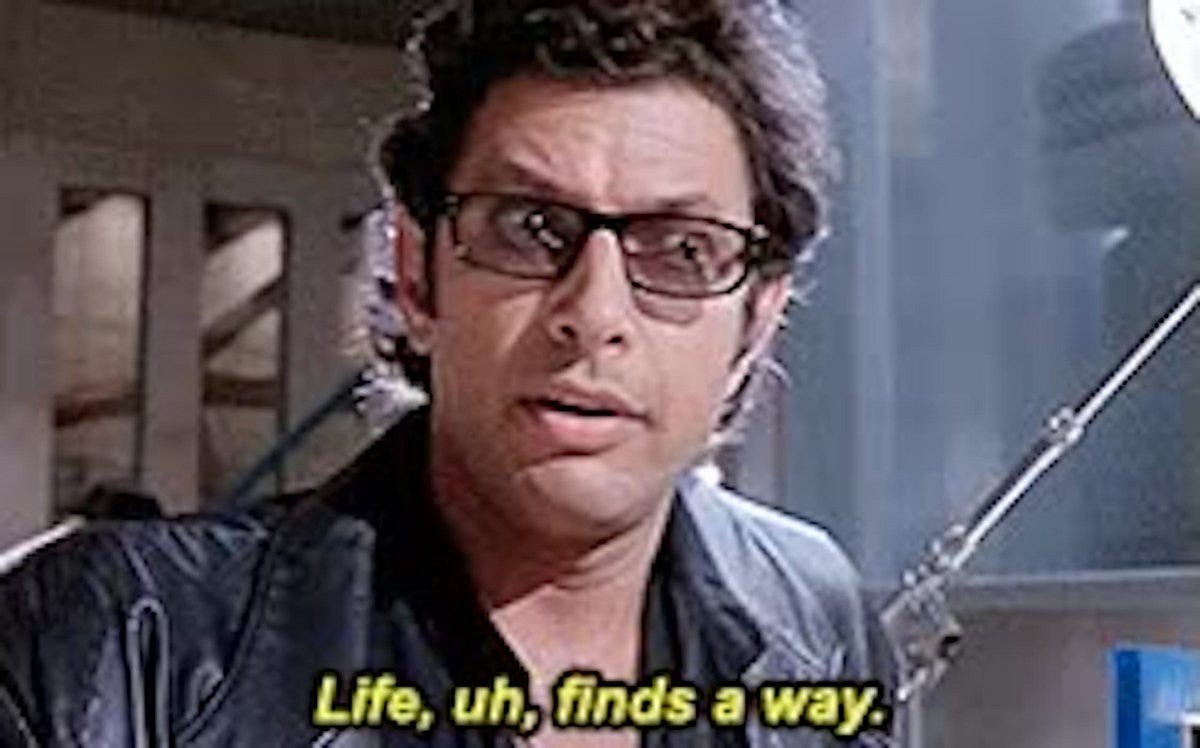

Complexity will find a way to escape your attempts to control it, via unforseen circumstances that require you to augment your model with new information.

So, I can’t find a reason to believe that we can align an AI at all, except via a sufficient circumstantial advantage. There is no silver bullet here. So if it were up to me (it’s not), I’d:

Replace the terminology of “AI alignment” with something else; deconstructability, auditability, but always keeping in mind that an AGI will win this game in the end, so don’t waste time talking about an “aligned AGI”.

Do the work of trying to analyze and mitigate the escape risks, be it via nanotechnology spread in the atmosphere or other channels.

Develop a strategy, perhaps a working group, to continually analyze the risk posed by distinct AI technologies and our level of preparedness to deal with these risks; it may be that an agentic AGI is simply an unacceptable risk, and the only acceptable approaches are modular, or auditable.

Try to find a strategy to socialize the AI, to make it actually care, if not about us then at least first about other AIs (as a first step). Create a system that slows it’s progress somehow. I don’t know, maybe look into entangling it’s parameters using quantum cryptography , or other kinds of distributed architectures for neural networks ¯\(°_o)/¯

Get people from all fields involved. AI researchers are obviously critical but ideally there should be a conversation happening at every level of society, academia, etc (bonus points: Make another movie about AGI, which can potentially be far scarier than previous ones, since the disruption is starting to become part of public consciousness in a more public way).

Maybe, just maybe, if we do all these things we can be ready in 10 years when that dark actor presses the button…

Edit: going to reference here some better formulated arguments that seem to support what I’m saying.

https://www.lesswrong.com/posts/AdGo5BRCzzsdDGM6H/contra-strong-coherence

I agree with ^, a general intelligence can realign itself. The point of agency is it will define utility as being whatever maximises it’s predictability / power over it’s own circumstances, not according to some pre-programming.

The phrase “aligned superintelligence” is pretty broad. At one end, is the concept of a superintelligence that will do everything we want and nothing we don’t, for some vague inclusive sense of “what we want”. At the other end is superintelligence that won’t literally kill everyone as a byproduct of doing whatever it does.

Obviously the first requires the second, but we don’t know how to ensure even the second. There is an unacceptably high risk that we will get superintelligence that does kill everyone, though different people have quite different magnitudes for exactly how unacceptably high risk it is.

The biggest issue is that if the superintelligence “wants” anything (in the sense of carrying out plans to achieve it), we won’t be able to prevent those. So a large part of alignment is either ensuring that it never actually “wants” anything (e.g. tool AI), or that we can somehow develop it in a way that it “wants” things that are compatible with our continued existence and ideally, flourishing. This is the core idea of “alignment”.

If alignment is misguided as a whole, then either superintelligence never occurs or humanity ends.

So the first one is an “AGSI”, and the second is an “ANSI” (general vs narrow)?

If I understand correctly… One type of alignment (required for the “AGSI”) is what I’m referring to as alignment which is that it is conscious of all of our interests and tries to respect them, like a good friend, and the other is that it’s narrow enough in scope that it literally just does that one thing, way better than humans could, but the scope is narrow enough that we can hopefully reason about it and have an idea that it’s safe.

Alignment is kind of a confusing term if applied to ANSI, because to me at least it seems to suggest agency and aligned interests, wheras in the case of ANSI if I understand correctly the idea is to prevent it from having agency and interests in the first place. So it’s “aligned” in the same way that a car is aligned, ie it doesn’t veer off the road at 80 mph :-)

But I’m not sure if I’ve understood correctly, thanks for your help...

This interesting post was sitting at −1 total karma with 5 votes at the time of reading, so I strongly upvoted. It contains a brief but cogent argument that seems novel on LW. Though it might have been made before in more abstract and wordy terms, I still think the novelty of brevity is valuable.

Thank you kind fellow !

I think your intuitions are essentially correct—in particular, I think many people draw poor conclusions from the strange notion that humans are an example of alignment success.

However, you seem to be conflating:

Fully general SI alignment is unsolvable (I think most researchers would agree with this)

There is no case where SI alignment is solvable (I think almost everyone disagrees with this)

We don’t need to solve the problem in full generality—we only need to find one setup that works.

Importantly, ‘works’ here means that we have sufficient understanding of the system to guarantee some alignment property with high probability. We don’t need complete understanding.

This is still a very high bar—we need to guarantee-with-high-probability some property that actually leads to things turning out well, not simply one which satisfies my definition of alignment.

I haven’t seen any existence proof for a predictably fairly safe path to an aligned SI (or aligned AGI...).

It’d be nice to know that such a path existed.

Thanks for your response! Could you explain what you mean by “fully general”? Do you mean that alignment of narrow SI is possible? Or that partial alignment of general SI is good enough in some circumstance? If it’s the latter could you give an example?

By “fully general” I mean something like “With alignment process x, we could take the specification of any SI, apply x to it, and have an aligned version of that SI specification”. (I assume almost everyone thinks this isn’t achievable)

But we don’t need an approach that’s this strong: we don’t need to be able to align all, most, or even a small fraction of SIs. One is enough—and in principle we could build in many highly specific constraints by construction (given sufficient understanding).

This still seems very hard, but I don’t think there’s any straightforward argument for its impossibility/intractability. Most such arguments only work against the more general solutions—i.e. if we needed to be able to align any SI specification.

Here’s a survey of a bunch of impossibility results if you’re interested.

These also apply to stronger results than we need (which is nice!).