This is a new paper relating experimental results in deep learning to human psychology and cognitive science. I’m excited to get feedback and comments. I’ve included some excerpts below.

Abstract

This paper is about the cognitive science of visual art. Artists create physical artifacts (such as sculptures or paintings) which depict people, objects, and events. These depictions are usually stylized rather than photo-realistic. How is it that humans are able to understand and create stylized representations? Does this ability depend on general cognitive capacities or an evolutionary adaptation for art? What role is played by learning and culture?

Machine Learning can shed light on these questions. It’s possible to train convolutional neural networks (CNNs) to recognize objects without training them on any visual art. If such CNNs can generalize to visual art (by creating and understanding stylized representations), then CNNs provide a model for how humans could understand art without innate adaptations or cultural learning. I argue that Deep Dream and Style Transfer show that CNNs can create a basic form of visual art, and that humans could create art by similar processes. This suggests that artists make art by optimizing for effects on the human object-recognition system. Physical artifacts are optimized to evoke real-world objects for this system (e.g. to evoke people or landscapes) and to serve as superstimuli for this system.

From “Introduction”

In a psychology study in the 1960s, two professors kept their son from seeing any pictures or photos until the age of 19 months. On viewing line-drawings for the first time, the child immediately recognized what was depicted. Yet aside from this study, we have limited data on humans with zero exposure to visual representations.

...

For the first time in history, there are algorithms [convolutional neural nets] for object recognition that approach human performance across a wide range of datasets. This enables novel computational experiments akin to depriving a child of visual art. It’s possible to train a network to recognize objects (e.g. people, horses, chairs) without giving it any exposure to visual art and then test whether it can understand and create artistic representations.

From “Part 1: Creating art with networks for object recognition”

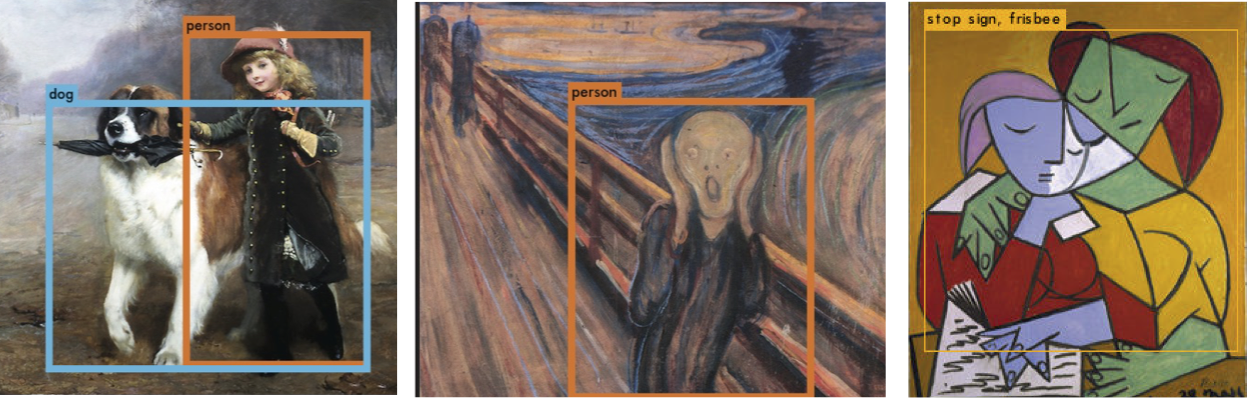

Figure 2. Outputs from testing whether a conv-net model can generalize to paintings. Results are fairly impressive overall. However, in Picasso painting on the right, the people are classified as “stop sign, frisbee”.

Figure 12. Diagram showing how Deep Dream and Style Transfer could be combined. This generates an image that is a superstimulus for the conv net (due to the Deep Dream loss) and has the style (i.e. low-level textures) of the style image. Black arrows show the forward pass of the conv net. Red arrows show the backward pass, which is used to optimize the image in the center by gradient descent.

Figure 13. Diagram showing how the process in Figure 12 can be extended to humans. This is the “Sensory Optimization” model for creating visual art. For humans, the input is a binocular stream of visual perception (represented here as “content video” frames). The goal is to capture the content of this input in a different physical medium (woodcut print) and with a different style. The optimization is not by gradient descent but by a much slower process of blackbox search that draws on human general intelligence.

Figure 14. Semi-abstract images that are classified as “toilet”, “house tick”, and “pornographic” (“NSFW”) by recognition nets. From Tom White’s “Perception Engines” and “Synthetic Abstractions” (with permission from the artist).

There’s a long history of development of artistic concepts; for example, the discovery of perspective. There’s also lots of artistic concepts where the dependence on the medium is highly significant, of which the first examples that come to mind are cave paintings (which were likely designed around being viewed by moving firelight) and ‘highly distorted’ ancient statuettes that are probably self-portraits.

So it seems to me like we should expect there to be a similar medium-relevance for NN-generated artwork, and a similar question of which artistic concepts it possesses and lacks. Perspective, for example, seems pretty well captured by GANs targeting the LSUN bedroom dataset or related tasks. It seems like it’s not a surprise that NNs would be good at perspective compared to humans, since there’s a cleaner inverse between the perceptive and the creation of perspective from the GAN’s point of view than the human’s (who has to use their hands to make it, rather than their inverted eyes).

That is, it seems that the similarities are actually pretty constrained to bits where the medium means humans and NN operate similarly, and the path of human art generation and the path of NN art generation look quite different in a way that suggests there are core differences. For example, I think most humans have pretty good facility with creating and understanding ‘stick figures’ that comes from training on a history of communicating with other humans using stick figures, rather than simply generalizing from visual image recognition, and we might be able to demonstrate the differences through a training / finetuning scheme on some NN that can do both classification and generation.

We might want to look for find concepts that are easier for humans than NNs; when I talk to people about ML-produced music, they often suggest that it’s hard to capture the sort of dependencies that make for good music using current models (in the same way that current models have trouble making ‘good art’ that’s more than style transfer or realistic faces or so on; it’s unlikely that we could hook a NN up to a DeviantArt account and accept commissions and make money). But as someone who can listen to lots of Gandalf nodding to Jazz, and who thinks there are applications for things like CoverBot (which would do the acoustic equivalent of style transfer), my guess is that the near-term potential for ML-produced music is actually quite high.

Great examples. I agree the physical medium is really important in human art: see my Section 1.3.1.

I like the point about hands vs. “inverted eyes”. At the same time, the GANs are trained on a huge number of photos, and these photos exhibit a perfect projection of a 3D scene onto a finite-size 2D array. The GAN’s goal is to match these photos, not to match 3D scenes (which it doesn’t know anything about). Humans invented perspective before having photos to work with. (They did have mirrors and primitive projection techniques.)

I agree that our facility with stick figures probably depends partly on the history of using stick figures. However, I think our general visual recognition abilities make us very flexible. For example, people can quickly master new styles of abstract depiction that differ from the XKCD style (say in a comic or a set of artworks). DeepMind has a cool recent paper where they learn abstract styles of depiction with no human imitation or labeling.

Currently humans play a major role in the interesting examples of neural art. Getting more artist-like autonomy is probably AI-complete, but I can imagine neural nets being more and more widely used in both visual art and music. I agree there’s great potential in neural music! (I suggest some experiments in my conclusion but there’s tons more that could be tried).

I’ve see some results here where I thought the consensus interpretation was “angle as latent feature”, such that there was an implied 3D scene in the latent space. (Most of what I’m seeing now with a brief scan has to do with facial rotations and pose invariance.) Maybe I should put scene is scare quotes, because it’s generally not fully generic, as the sorts of faces and rooms you find in such a database are highly nonrandom / have a bunch of basic structure you can assume will be there.

I like this exposition, but I’m still skeptical about the idea.

Since “art” is a human concept, it’s naturally a grab bag of lots of different meanings. It’s plausible that for some meanings of “art,” humans do something similar to searching through a space of parameters for something that strongly activates some target concept within the constraints of a style. But there’s also a lot about art that’s not like that.

Like art that’s non-representational, or otherwise denies the separation between form and content. Or art that’s heavily linguistic, or social, or relies on some sort of thinking on the part of the audience. Art that’s very different for the performer and the audience, so that it doesn’t make sense to talk about a search process optimizing for the audience’s experience, or otherwise doesn’t have a search process as a particularly simple explanation. Art that’s so rooted in emotion or human experience that we wouldn’t consider an account of it complete without talking about the human experience. Art that only makes sense when considering humans as embodied, limited agents.

So if I consider the statement “the DeepDream algorithm is doing art,” there is a sense in which this is reasonable. But I don’t think that extends to calling what DeepDream does a model for what humans do when we think about or create art. We do something not merely more complicated in the details, but more complicated in its macros-structure, and hooked into many of the complications of human psychology.

I agree there’s great variety and intellectual sophistication in art. My paper argues that the Sensory Optimization model captures *some* (not all) key properties of visual art. The model is simple, easy to experiment with (e.g. generating art-like images), and captures a surprising amount. That said, there are probably simple computational models that could do better and I’d be excited to see concrete proposals.

The paper does touch on some of your concerns. Feature Visualization can generate non-representational images (Section 1.2). I suspect these images could be made more aesthetic and evocative by training on datasets with captions that include human emotional and aesthetic responses (Section 2.3), and the same goes for art that’s strongly rooted in emotions (Section 2.3.3). Do you have examples in mind when you mention “human experience” and “embodiment” and “limited agents”? I don’t really address art where the artist has different knowledge/understanding than the audience and that’s an important topic for further work (Section 2.3.4 is related).

I agree that lots of art (including some painting) is “heavily linguistic, or social, or relies on … thinking on the part of the audience”. Having a computational model that can generate this kind of art is plausibly AGI-complete. Yet (as already noted) it’s likely we can do better than my current model.

(In general, I’m optimistic about what neural nets can create by Sensory Optimization and related techniques. Current neural nets have zero experience of the physical act of painting or drawing. They have no understanding of how animals or humans move and act in the world or of human values or interests. Yet even with zero prior training on visual art they can make pretty impressive images by human lights. I think this was surprising to most people both in and outside deep learning. I’m curious whether this was surprising to you.)

Regarding your last paragraph, I want to make some clarifications. I don’t express a view about whether Deep Dream makes art. I claim that by combining ideas from Deep Dream and Style Transfer with richer datasets we could create something close to a basic form of human visual art. I don’t claim that the creative process for humans is like optimization by gradient descent. Instead, humans optimize by drawing on their general intelligence (e.g. hierarchical planning, analytical reasoning, etc.).

Thanks for the reply :)

Sure, you can get the AI to draw polka-dots by targeting a feature that likes polka dots, or a Mondrian by targeting some features that like certain geometries and colors, but now you’re not using style transfer at all—the image is the style. Moreover, it would be pretty hard to use this to get a Kandinsky, because the AI that makes style-paintings has no standard by which it would choose things to draw that could be objects but aren’t. You’d need a third and separate scheme to make Kandinskys, and then I’d just bring up another artist not covered yet.

If you’re not trying to capture all human visual art in one model, then this is no biggie. So now you’re probably going “this is fine, why is he going on about this.” So I’ll stop.

For “human experience,” yeah, I just means something like communicative/evocative content that relies on a theory of mind to use for communication. Maybe you could train an AI on patriotic paintings and then it could produce patriotic paintings, but I think only by working on theory of mind would an AI think to produce a patriotic painting without having seen one before. I’m also reminded of Karpathy’s example of Obama with his foot on the scale.

For embodiment, this means art that blurs the line between visual and physical. I was thinking of how some things aren’t art if they’re normal sized, but if you make them really big, then they’re art. Since all human art is physical art, this line can be avoided mostly but not completely.

For “limited,” I imagined something like Dennett’s example of the people on the bridge. The artist only has to paint little blobs, because they know how humans will interpret them. Compared to the example above of using understanding of humans to choose content, this example uses an understanding of humans to choose style.

It was impressive, but I remember the old 2015 post the Chris Olah co-authored. First off, if you look at the pictures, they’re less pretty than the pictures that came later. And I remember one key sentence: “By itself, that doesn’t work very well, but it does if we impose a prior constraint that the image should have similar statistics to natural images, such as neighboring pixels needing to be correlated.” My impression is that DeepDream et al. have been trained to make visual art—by hyperparameter tuning (grad student descent).

Again, replicating all human art is probably AGI-complete. However, there are some promising strategies for generating non-representational art and I’d guess artists were (implicitly) using some of them. Here are some possible Sensory Optimization objectives:

1. Optimize the image to be a superstimulus for random sets of features in earlier layers (this was already discussed).

2. Use Style Transfer to constrain the low-level features in some way. This could aim at grid-like images (Mondrian, Kelly, Albers) or a limited set of textures (Richter). This is mentioned in Section 1.3.1.

3. If you want the image to evoke objects (without explicitly depicting them), then you could combine (1) and (2) with optimizing for some object labels (e.g. river, stairs, pole). This is simpler than your Kandinsky example but could still be effective.

4. In addition to (1) and (2), optimize the image for human emotion labels (having trained on a dataset with emotion labels for photos). To take a simplistic example: people will label photos with lots of green or blue (e.g. forest or sea or blue skies) as peaceful/calming, and so abstract art based on those colors would be labeled similarly. Red or muddy-gray colors would produce a different response. This extends beyond colors to visual textures, shapes, symmetry vs. disorder and so on. (Compare this Rothko to this one).

I agree with your general point about the relevance of theory of mind. However, I think Sensory Optimization could generate patriotic paintings without training on them. Suppose you have a dataset that’s richer than ImageNet and includes human emotion and sentiment labels/captions. Some photos will cause patriotic sentiments: e.g. photos of parades or parties on national celebrations, photos of a national sports team winning, photos of iconic buildings or natural wonders. So to create patriotic paintings, you would optimize for labels relating to patriotism. If there are emotional intensity ratings for photos, and patriotic scenes cause high intensity, then maybe you could get patriotic paintings by just optimizing for intensity. (Facebook has trained models on a huge image dataset with Instagram hashtags—some of which relate to patriotic sentiment. Someone could run a version of this experiment today. However, I think it’s a more interesting experiment if the photos are more like everyday human visual perception than carefully crafted/edited photos you’ll find on Instagram.)

Again, I expect a richer training set would convey lots of this information. Humans would use different emotional/aesthetic labels on seeing unusually large natural objects (e.g. an abnormally large dog or man, a huge tree or waterfall).

Some artworks depend on idiosyncratic quirks of human visual cognition (e.g. optical illusions). It’s probably hard for a neural net to predict how humans will respond to all such works (without training on other images that exploit the same quirk). This will limit the kind of art the Sensory Optimization model can generate. Still, this doesn’t undermine my claim that artists are doing something like Sensory Optimization. For example, humans have a bias towards seeing faces in random objects—pareidolia. By exploiting this, artists exploit an image that looks like two things at once. (The artist knows the illusion will work, because it works on his or her own visual system).

I think this first blogpost on Deep Dream and the original paper on Style Transfer already were already very impressive. The regularization tweak for Deep Dream is very simple and quite different from what I mean by “training on visual art”. (It’s less surprising that a GAN trained on visual art can generate something that looks like visual art—although it is surprising how well they can deal with stylized images.)