Physicalism: consciousness as the last sense

Follow-up to There just has to be something more, you know? and The two insights of materialism.

I have alluded that one cause for the common reluctance to consider physicalism — in particular, that our minds can in principle be characterized entirely by physical states — is an asymmetry in how people perceive characterization. This can be alleviated by analogy to how our external senses can supervene on each other, and how abstract manipulations of those senses using recording, playback, and editing technologies have made such characterizations useful and intuitive.

We have numerous external senses, and at least one internal sense that people call “thinking” or “consciousness”. In part because you and I can point our external senses at the same objects, collaborative science has done a great job characterizing them in terms of each other. The first thing is to realize the symmetry and non-triviality of this situation.

First, at a personal level: say you’ve never sensed a musical instrument in any way, and for the first time, in the dark, you hear a cello playing. Then later, you see the actual cello. You probably wouldn’t immediately recognize these perceptions as being of the same physical object. But watching and listening to the cello playing at the same time would certainly help, and physically intervening yourself to see that you can change the pitch of the note by placing your fingers on the strings would be a deal breaker: you’d start thinking of that sound, that sight, and that tactile sense as all coming from one object “cello”.

Before moving on, note how in these circumstances we don’t conclude that “only sight is real” and that sound is merely a derivate of it, but simply that the two senses are related and can characterize each other, at least roughly speaking: when you see a cello, you know what sort of sounds to expect, and conversely.

Next, consider the more precise correspondence that collaborative science has provided, which follows a similar trend: in the theory of characterizing sound as logitudinal compression waves, first came recording, then playback, and finally editing. In fact, the first intelligible recording of a human voice, in 1860, was played back for the first time in 2008, using computers. So, suppose it’s 1810, well before the invention of the phonoautograph, and you’ve just heard the first movement of Beethowen’s 5th. Then later, I unsuggestively show you a high-res version of this picture, with zooming capabilities:

If you’re really smart, and have a great memory, you might notice how the high and low amplitudes of that wave along the horizontal axis match up pretty well with your perception of how loud the music is at successive times. And if you zoom in, you might notice that finer bumps on the wave match up pretty well with times you heard higher notes. These connections would be much easier to make if you could watch and listen at the same time: that is, if you could see a phonautograph transcribing the sound of the concert to a written wave in real-time while you listen to it.

Even then, almost anyone in their right mind from 1810 would still be amazed that such an image, and the right interpretive mechanism — say, a computer with great software and really good headphones — is enough to perfectly reproduce the sound of that performance to two stationary ear canals, right down to the audible texture of horse-hair bows against catgut strings and every-so-politely restless audience members. They’d be even more amazed that fourier analysis on a single wave can separate out the individual instruments to be listened to individually at a decent precision.

But our modern experiences with audio recording, editing, and playback — the fact that we can control sound by playing back and manipulating abstract representations of sound waves — deeply internalizes our model of sound (if not hearing) as a “merely physical” phenomenon. Just as one person easily develops the notion of a single “cello” as they see, hear, and play cello at the same time, collaborative science has developed the notion of a single object or model called “physical reality” to have a clear meaning in terms of our external senses, because those are the ones we most easily collaborate with.

Now let’s talk about “consciousness”. Consider that you have experienced an inner sense of “consciousness”, and you may be lucky enough to have seen functional magnetic resonance images of your own brain, or even luckier to watch them while they happen. These two senses, although they are as different as the sight and sound of a cello, are perceptions of the same object: “consciousness” is a word for sensing your mind from the inside, i.e. from actually being it, and “brain” is a word for the various ways of sensing it from the outside. It’s not surprising that this will probably be that last of our senses to be usefully interpreted scientifically, because it’s apparently very complicated, and the hardest one to collaborate with: although my eyes and yours can look at the same chair, our inner senses are always directed at different minds.

Under Descartes’ influence, the language I’m using here is somewhat suggestive of dualism in its distiction between physical phenomena and our perceptions of them, but in fact it seems that some of our sensations simply are physical phenomena. Some combination of physical events — like air molecules hitting the eardrum, electro-chemical signals traversing the auditory nerves, and subsequent reactions in the brain — is the phenomenon of hearing. I’m not saying your experience of hearing doesn’t happen, but that it is the same phenomenon as that described by physics and biology texts using equations and pictures of the auditory system, just as the sight and sound of a cello are direct descriptions of the same object “cello”.

But when most people consider consciousness supervening on fundamental physics, they often end up in a state of mind that is better summarized as thinking “pictures of dots and waves are all that exists”, without an explicit awareness that they’re only thinking about the pictures. And this just isn’t very helpful. A brain is not a picture of a brain any more than it is the experience of thinking; in fact, in stages of perception, it’s much closer to latter, since a picture has to pass through the retina and optic nerve before you experience it, but the experience of thinking is the operation of your cerebral network.

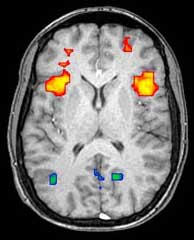

Indeed, the right interpretative mechanism — for now, a living human body is the only one we’ve got — seems enough to produce to “you” the experience of “thinking” from specific configurations of cells, and hence particles, that can be represented (for the moment with low fidelity) by pictures like this:

In our progressive understanding of mind, this is analogous to the simultaneous-watching-and-listening phase of learning: we can watch pictures of our brains while we “listen” to our own thoughts and feelings. If at some point computers allow us to store, manipulate, and re-experience partial or complete mental states by directly interfacing with the brain, we’ll be able to update our mind-is-brain model with the same sort of confidence as sound-is-longitudinal-compression-waves. Imagine intentionally thinking through the process of solving a math problem while a computer “records” your thoughts, then using some kind of component analysis to remove the “intention” from the recording (which may not be a separable component, I’m just speculating), and then playing it back into your brain in real-time so that you experience solving the problem without trying to do it.

Wouldn’t you then begin to accept characterizing thoughts as brain states, like you characterize sounds as compression waves? A practical understanding like that — the level of abstract manipulation — would be a deal breaker for me. And naively, it seems no more alien than the complete supervienience of sound or smell on visual representations of it.

This isn’t an argument that the physicalist conception of consciousness is true, but simply that it’s not absurd, and follows an existing trend of identifications made by personal experiences and science. Then all you need is heaps and loads of existing evidence to update your non-zero prior belief to the point where you recognize it’s got the best odds around. If they ever happen, future mind-state editing technologies could make “thoughts = brain states” feel as natural as playing a cello without constantly parsing “the sight of cello” and “the sound of cello” as separate objects.

Even as these abstract models become more precise and amenable than our intuitive introspective models, this won’t ever mean thought “isn’t real” or “doesn’t happen”, any more than sight, touch, or hearing “doesn’t happen”. You can touch, look at, and listen to a cello, yielding very different experiences of the exact same object. Likewise, if one demands a dualist perceptual description, when you think, you’re “introspecting at” your brain. Although that’s a very different experience from looking at an fMRI of your brain, or sticking your finger into an anaesthetized surgical opening in your skull, if modern science is right, these are experiences of the exact same physical object: one from the inside, two from the outside.

In short, consciousness is a sense, and predictive and interventional science isn’t about ignoring our senses… it’s about connecting them. Physics doesn’t say you’re not thinking, but it does connect and superveniently reduce what you experience as thinking to what you and everyone else can experience as looking at your brain.

It’s just that awesome.

To anyone who really suffers from doubt about the physical nature of consciousness (...only sometimes, and I’m not proud of it) this line of thinking is pretty exasperating. Yes, the easy problem is easy, and most responsible dualists are happy enough admitting that the mind operates through physical means detectable on an MRI and we gain information about our own mental states through physical neural function...

...none of which makes any difference to the question that non-physicalists actually worry about, the so-called hard problem. Even calling consciousness a “sense” is admitting the lack of a solution to the hard problem: senses are the modalities by which objects are perceived. So our inner states are the objects, consciousness is the modality, and then who’s doing the perceiving? More consciousness? Who’s perceiving that? Hofstadter’s solution of the strange loop is interesting but so nontechnical as to be useless.

Calling consciousness a sense, insofar as it’s not just an equivocation on the term “consciousness”, passes the recursive buck. The subjective level at which the senses bottom out remains just as poorly understood as before. This is more of an argument that thinking is purely physical (and a good one). I’m hoping you’ll get to what I think of as “consciousness” later on in the series.

On the other hand, thanks for that link to the voice recording made in 1860. Getting to hear the oldest accessible human sound in the world? Pretty neat.

A physical substrate, of course. Note that I am not using the word “brain”: we don’t know anything about what the substrate looks like from a materialistic point of view, except that it is somehow strongly related to the brain, and the fine-grained states and processes of the physical substrate should comprehensively explain our inner phenomenology.[1]

[1] This is a surprisingly strong condition. Fourier analysis on a waveform cannot comprehensively account for our auditory perceptions, because it doesn’t account for psychoacoustics. Spectrum analysis on a source of visible light cannot explain the perception of color, since e.g. people have different cone photoreceptors, etc.

You made this point in a previous post, I think. Not that I’m complaining—it’s well worth the emphasis.

But it lays the groundwork, I think. Comparing our grasp of the same object via different senses reminds us that we have different ways of apprehending a single item. Comparing our grasp of the same property via different senses would be even more instructive. Consider visual and tactile assessments of linearity. We consider these to be two ways of apprehending the same property. But it is just barely conceivable that we are wrong. Conceivably—although not with enough probability to warrant revising the way we now talk and think about linearity—future discoveries will lead us to separate linear(tactile) from linear(visual).

This is important to the “hard problem” because many non-physicalists have argued from the premise that there will always be a conceptual gap between physical and mental descriptions, to the conclusion that these descriptions pick out different properties. “Conceptual gap” here meaning just that is is conceivable that one description could apply and the other not apply. The premise, I think, is true, but the inference is invalid. Linearity is a single property that we can pick out with two concepts, tactile linearity and visual linearity, that have a (very thin—but that’s all we need!) conceptual gap between them. Linearity is therefore a counterexample to the alleged inference principle.

I was hoping to pre-empt this sort of confusion in my paragraph starting with

The point is that if physics is right, the distinction between senses and the “objects of sensation” is really just the distinction between the physical phenomenon of sensing and the penultimate causes of such phenomena, outside our bodies.

Think of it this way: of course senses are physical phenomena… you can sense them! At some point when you were a child, being able to sense something is what it meant for it to be physical. I think that’s still the right idea.

You’re talking about three things—object of sensation, sense, and mental representation of sensation. I’m talking about four things—object of sensation, sense, mental representation of sensation, and subject. I think we both agree on everything about the first three and that all of them are physical and inter-convertible, but I’m not sure whether you’re even acknowledging the existence of the fourth.

A friend goes to Africa and sees an elephant. He takes a photograph and sends it to you in .ZIP format via email. You download it, unzip it, and you see the image.

In this case, the elephant is the object of sensation. Your friend is the sense of vision. The photograph is the sensory representation of the elephant, the email is the optic nerve, and the unzipper is the visual cortex. The thesis of this post is that your sense of vision (within the metaphor) is equivalent to your sense of consciousness (outside the metaphor), and that’s fine, but then what’s the equivalent of you? (wow that metaphor came out badly)

And I know that the answer in a neurological sense is that different features of the elephant activate various forms of mental processing which result in actions like saying “Wow, great elephant photo” and the like, but that’s an answer that works equally well for humans and p-zombies. The philosophical answer that explains the subjective sensation is harder to come by.

Thanks for posing the question so clearly; and roughly speaking, yes, I see no need to separate the notion of “subject” and the last phases of the sensation process in the brain. I am the phenomenon of my perceptions and behavior. What else would I call “me”?

That you ask your question with an analogy is no coincidence: what leads one to ask for a subject is precisely a misapplication of metaphor. You are mapping an event chain of length N into an event chain of length N+1, and demanding that the first chain is therefore missing something: An object is like an object, a sense is a like a transmission about it, so what’s like the recipient of the transmission?

Well, no… at the end, at the visual cortex or wherever visual perception happens (as mind-state “playback” technology might help us verify), the perception is not a transmission… it’s the recipient of it. And “you” are just a collage of such recipients (and subsequent phenomena if you identify with your thoughts and actions too).

I hope that this series will eventually progress to an explanation of what it means for a perception to be its own recipient or for a subject to be a collage of such perceptions. It sounds promising, and I have lowered my probability that you are secretly a p-zombie (see the paragraph here starting with “He kind of took this idea and ran with it”), but I definitely want to know more.

There’s no causal loop… input is a previous brain-state, and output is a subsequent brain-state.

Subjective sensation of perceiving a photo is explained by an event in your brain of perceiving the photo in the same sense e-mail with a photo of an elephant is explained by the elephant. With e-mail and the elephant, the map-territory distinction is easy to make. With experience of perception and perception itself, it’s harder, because the same neurons in the same brain are involved in both, over overlapping periods of time. Change the elephant analogy to a crowd of people, all of which are taking pictures of each other and e-mailing them to each other’s phones.

Whenever I come across a conversation concerning the mind and it’s relation to physics I think of what a Cognitive science lecturer of mine had to say on the subject.

Fred Cummins

Analogy between hearing and consciousness as ways of learning about reality, extended to include playback… Absolutely beautiful!

This sequence is progressing well!

You correctly pointed out that we don’t yet have very strong evidence that our inner senses supervene on our external senses. While neuroscience is maturing, all we have is evidence of at least a partial relationship between the inner senses and the external senses, and a nonzero prior belief that supervenience may in fact obtain.

I think we have reason to have a very strong prior belief that supervenience obtains. Suppose we had an extra external sense that had, so far, evaded scientific explanation. Imagine being able to sense the presence of dark matter, or psychic auras or something. Based on our previous successes in analyzing sight, hearing, and so on, wouldn’t we be right to feel confident that this extra sense did in fact supervene on the other senses, and that we would eventually figure it out?

Voted up—liked the approach. But as always there are a few thing that I would rather look at differently. I think ‘brain states’ is a bit misleading and prefer ‘brain processes’. It doesn’t seem like the brain is a very static thing, passing from state to state. I also prefer to separate the ideas of thought and of awareness for clarity about which is being examined in any statement. This is nit-picking I know and does not take anything way from your argument.

I don’t know what this post is claiming. I have the feeling it’s supposed to favor something like Dennett’s stance on consciousness. But the example used, of music, doesn’t do what it’s supposed to do. We know how to reduce sound to physics. (It’s surprising that it can be reduced to a single waveform, but not that it can be reduced to physics.) We don’t know how to reduce our perception of sound to physics.

You’re right that the focus of the post is not to reduce our perception of sound to physics (it is rather to demystify thinking as just another perception), but it does briefly address this: the claim is that your perception of sound is an event in the brain. See my reply to Yvain … it seems we’re trading those today :)