Authors: Can Rager[1] and Kyle Webster, PhD

Summary

The scalability of modern computing hardware is limited by physical bottlenecks and high energy consumption. These limitations could be addressed by neuromorphic hardware (NMH) which is inspired by the human brain. NMH enables physically built-in capabilities of information processing at the hardware level. In other words, brain-like features bias hardware towards intelligence at scale. In Table 1 we compare computing devices by their ability to scale (scaling features) and adaptation of brain-inspired concepts (neuromorphic features).

We argue NMH may play a significant role in AI deployment. Common modern processors like GPUs are limited in energy efficiency, execution time, and transistor size. Meanwhile, brain-like features of NMH enable lower energy consumption, faster inference times, and a smaller form factor. Therefore, the current shift from centralized computing clusters to distributed edge devices incentivizes NMH development.

Neuropmorphic computing paradigms require a novel approach to safe, interpretable AI. In order to effectively engage the risk of misaligned AI, safety research may need to expand its scope to include NMH. This may be best achieved by supporting those currently engaged in NMH capability research to work on safety and related areas. We are not aware of active research focused on the interpretability of AI on the hardware level. In this post we assess whether such an initiative is required.

Table 1: Comparison of computational hardware. Comparison of the human brain with neuromorphic hardware (atomic switching networks) and a modern GPU (NVIDIA A100 PCIe 80GB). NMH shows higher energy efficiency than modern GPUs while also exhibiting brain-inspired features. Note that challenges in controlling nanowire networks currently limit their use cases[2]. Overcoming these challenges may increase our concern, as discussed in section 3.

| human brain | neuromorphic hardware | GPU | |

scaling features node density[3] | |||

neuromorphic features self-assembly | yes | yes | no |

1. Brief introduction to nanowires

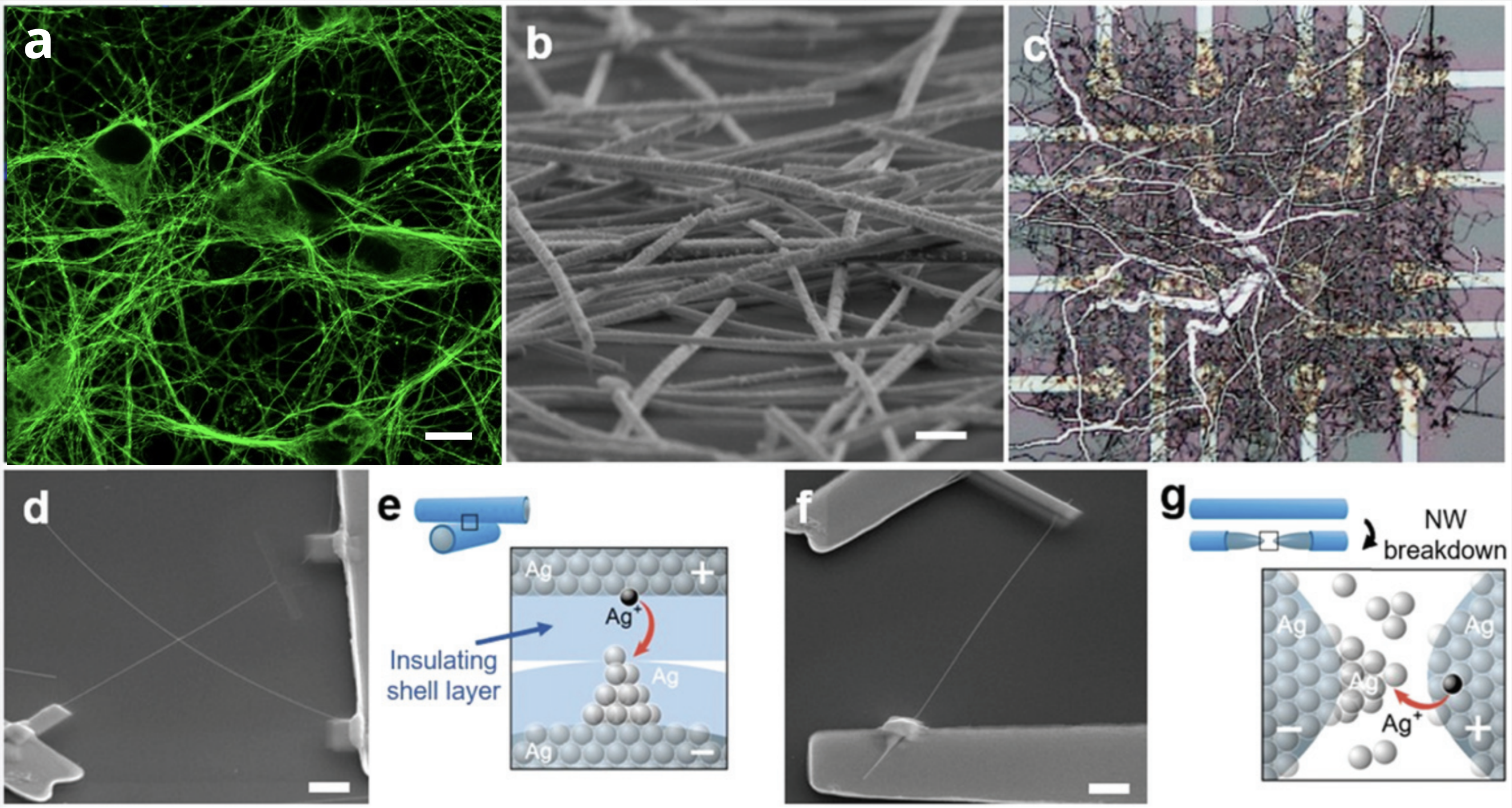

Biological brains have a spatially complex structure (fig. 1a). This structure inspired the design of computational hardware with highly interconnected nanowires (fig. 1b). The non-deterministic arrangement of wires is formed through self-assembly: Networks are exclusively shaped by microscopic interactions among individual nanowires[17].

The cross-point junctions between nanowires allow electrical signals to propagate through the network. Figure 1c shows a nanowire network placed on a chip with multiple readout ports. Feeding and measuring electrical signals across the network enables information processing. Furthermore, dynamic properties of nanowires enable short-term memory at the hardware level. Network plasticity (the ability to reorganize the network structurally and functionally through experience) is governed by two mechanisms[14]:

Reweighting by manipulating the conductivity of junctions (fig. 1d, 1e).

Rewiring by forcing a rupture or reconnection of single nanowires (fig. 1f, 1g).

Figure 1: Brain structure and nanowire networks. (a) Biological neural network: Mouse hippocampus neurons stained for beta-III-tubulin after 6 days in culture (scale bar, 10 μm). Adapted from[18][19]. (b) A biologically inspired memristive nanowire network (scale bar, 500 nm). Adapted from[14] under the terms of Creative Commons Attribution 4.0 License, copyright 2020, Wiley-VCH. (c) Atomic switch network of Ag wires placed on a chip with multiple readout ports. Adapted from[11], copyright 2013, IOP Publishing. (d) and (e) Single NW junction device where the memristive mechanism rely on the formation/rupture of a metallic conductive filament in between metallic cores of intersecting NWs under the action of an applied electric field and (f) and (g) single NW device where the switching mechanism, after the formation of a nanogap along the NW due to an electrical breakdown, is related to the electromigration of metal ions across this gap. Images and description adapted from[14][2] under the terms of Creative Commons Attribution 4.0 License, copyright 2020, Wiley-VCH.

To our knowledge training algorithms exclusively relying on reweighting and rewiring do not yet exist. In section 3 we argue control of plasticity is the main bottleneck of AI applications with nanowires. The systematic optimization of plasticity requires the following advances:

List 1: Current challenges in controlling plasticity of nanowires[20].

Increasing the number of functional input/output ports in nanowire devices

Designing interfaces (e.g. for translating images to a time-dependent input signal to the network)

Selecting optimal materials capable of short-term and long-term memory

Modeling of junction conductivity and switching behavior at scale

Understanding the impact of scale-invariance and criticality on emerging intelligence

1.1 Potential use case: Efficient inference with nanowires networks

Nanowires have the potential to become the new standard for inference devices. We first describe an existing training framework suitable for nanowires. Then, we highlight why nanowires potentially outperform common hardware in fabrication cost, energy efficiency, and computation speed.

Training framework

Backpropagation has been successfully applied to well-understood physical systems. Physics-aware training (PAT) enabled crystals and microphones to solve audio and image classification tasks[21]. Here, an external digital device feeds trainable parameters to the physical system as a separate input. The parameters are optimized with backpropagation. Once optimal parameters have been determined the external digital device is no longer required. The physical system is then able to perform inference on its own. As training execution is outsourced to a separate device, the physical system itself can be optimized for inference. Indeed, physical systems trained with PAT outperform inference with modern GPUs in energy efficiency and execution time[21].

Nanowire networks will become a suitable candidate for PAT once the issues in list 1 are better understood. Nanowires have been proven to outperform static systems in a basic AI task ( benchmark[12] on image classification with reservoir computing[22]). Therefore, nanowires may become a primary candidate for PAT applications.

Fabrication cost

Common hardware like GPUs need to take the exact form of crossbar arrays to function properly. This specific design requires high manufacturing standards such as cleanroom facilities and high precision nano-lithography[23]. In contrast to that, self-assembled hardware such as nanowire networks do not require an explicitly predefined architecture[14]. Nanowire networks are created by casting a drop of nanowires in suspension on an insulating surface. This technique creates an unique arrangement of individual wires for each new device. Computational capability can be realized from many different morphologies as a starting point for network training. This indicates robustness to impure manufacturing. Therefore, self-assembly potentially enables cheaper production of hardware compared to common GPUs. This potential for low cost manufacturing also supports the case for use of nanowire technology in PAT applications.

Energy efficiency and computation speed

Spike-based computing mimics the propagation of time-dependent signals through the human brain[24]. Here, a part of the input is encoded in signal timing. The reduction of the total amount of input signals yields a reduction in energy consumption and faster inference[25][26][27]. Scaled spike-based networks have already been implemented in non-self-assembled NMH. Their application meets the state of the art whilst drastically reducing energy consumption[28][29][30]. There is a potentially important connection to the state of safety research here as we are not aware of interpretability research focused on spike-based computing. However, work on this problem could be undertaken by well placed capability researchers and may prove complementary to efforts in spike-based systems as undertaken by researchers focused on algorithms. We are not aware of work currently focused on either of these specific areas.

Secondly, in-memory computing is a key feature of NMH. The separation between memory and processing units is a standard in modern hardware design[31]. This architecture requires a data transfer between both units that limits the overall execution speed (also called the von-Neumann-bottleneck). Inspired by the human brain, in-memory computing overcomes this bottleneck. An ideal in-memory processor performs matrix vector multiplication with 𝒪(1) time complexity by taking advantage of analogue memory units[32] (compared to 𝒪(N2) time complexity in common processors). This paradigm potentially increases the energy efficiency and computation speed by two orders of magnitude[33][34]. Limited experimental progress has demonstrated the viability and direction of improvement for in-memory processors[35][36].

2. Mapping concerns onto the current state of technology

Advanced NMH presents a cluster of concerns. Here, we focus primarily on interpretability and capability in the form of human disempowering AI.

Interpretability

Interpretability can significantly increase the probability that AI systems are beneficial to all stakeholders, particularly in complex applications. Uninterpretable models complicate auditing processes and may lead to unintended and human disempowering decisions.

Further research on physical dynamics of NMH supports interpretability in our view. Understanding the large-scale dynamics of analog memory units is a necessary step towards model explainability. Nanowire networks are a particularly useful device to study: The self-assembled structure offers insight into scale-governed dynamics – also observed in mammal brains[37].

AI research on emergent behavior of large language models explicitly formulate scaling laws[38][39][40]. These laws could provide valuable insight for large-scale switching dynamics of NMH. We recommend closer alignment of NMH research to interpretability efforts (such as those led by Anthropic and Redwood Research).

Misaligned artificial general intelligence

In line with the scaling hypothesis[41], generalization and sophisticated behavior emerges from upscaling basic AI models with narrow capabilities. A growing field of researchers concerned by a potential emergence of artificial general intelligence (AGI)[42]. An AGI is able to define and execute instrumental sub-tasks to achieve objectives. By default, an AGI could seek power as an instrumental subtask to achieve almost any overall goal set by humans. Such a behavior might lead to disempowerment of humans and could be an existential risk to humanity[43].

Current nanowire networks exhibit dominant short-term memory[12]. This decreases the likelihood of long-term self-optimizing capabilities. However, this risk should be tracked by capabilities researchers as advances in hardware are both anticipated and realized. An effective response to emerging risks may require action prior to new technologies being realized, even in a relatively controlled research environment.

Whether neuromorphic features bias advanced AI towards consciousness remains an open question. The generalization capability of conscious systems potentially increases the risk of misaligned AGI. We recognise further exploration of these claims as a valid domain of inquiry but have ruled them out of scope of the current piece.

3. Potential developments that would increase or decrease safety concern

Throughout this section we describe potential developments that would increase concerns discussed in section 2.

The potential development of long-term memory within nanowire networks would support feedback loops during the training process. This may increase the risk of misaligned AI. Further, the ability to control nanowire plasticity is central for realizing AI applications. As shown in table 1, the physical scalability of NMH is already comparable to modern GPUs. Once plasticity training is available, nanowire networks could become readily scalable hardware for AI applications. Advances in challenges named in list 1 increase the likelihood of controllable nanowire networks.

We expect nanowire devices would undergo training with an external digital device (PAT) as discussed in section 1.1. This external training device may serve as a useful reference to further understand nanowire dynamics. The realization of PAT with nanowires and the performance and other properties of such systems would therefore provide an additional data source for AGI risk assessment.

Self-assembling networks could require lower manufacturing standards than classical computer chips[14]. The release of benchtop manufacturing devices or ‘kits’ for the production of self-assembled NMH by at first researchers, but later hobbyists would increase concern regarding advanced AI. Low-barriers to production would enable a broad audience to take part in the experimental development of self-assembled NMH. Accessible manufacturing potentially increases dual use risk as it accelerates the development of NMH and increases the risk of unilateral action. Additionally, this accessibility could worsen race dynamics towards advanced AI. This situation would complicate the monitoring of AI advances by governmental institutions or third parties. Analogous concerns are currently visible within the field of biosafety and 3D printed weapons.

Criticality may be an indicator for intelligence in nanowire networks. “The concept of criticality refers to a system poised at the point between ordered and disordered states (a ‘critical point’), analogous to a phase transition.”[37]. Multiple studies suggest critical dynamics may play a role in information processing and task performance in biological systems[44][45][46]. However, the relation between criticality and intelligence is not yet clear. Criticality applies to the resistance of nanowire networks. For example, if the resistance is too high, an electrical signal will vanish soon after its release. Likewise, if the resistance is too low, a single signal can cause an avalanche of signals propagating through the whole network. The critical state of the network is positioned in the middle of these two extreme cases. The critical resistance of nanowire networks is optimal for information processing[12]. Advanced knowledge in critical systems would help to monitor capabilities of neuromorphic hardware.

4. Mapping AI alignment work onto neuromorphic hardware

The most successful models among recent publications are massively scaled software architectures[47][48][49]. Naturally, the efforts of AI alignment are focused on large software architectures as well. However, a paradigm shift in computing hardware toward NMH could reshape the AI landscape in unexpected ways. This shift would be accelerated as modern computing hardware encounters physical bottlenecks. Meanwhile, computation with physical systems—like nanowire networks—possibly yields higher efficiency in execution time, energy, and data (as discussed in section 1.1). Such a shift would require AI safety research to widen the software-focused scope to include hardware predictability.

In a recent review[2], members of industry and academia present the state-of-the-art in neuromorphic computing. They only dedicate a single paragraph within 97 pages to the emergence of misaligned AI as a concern. This suggests that AI safety is still neglected in neuromorphic computing research.

We argue investments in NMH are likely due to the prospects of higher energy efficiency, faster computation, and cheaper manufacturing. If industry funding grows, we expect profit maximizing incentives to favor prioritization of capability over safety[50]. Facing concerns mentioned in section 2, we recommend funding existing capability researchers to work on safety as well as collaborating with the existing safety community where beneficial. This would ensure that work in domains such as hardware interpretability is supported by a deep technical knowledge base of the relevant systems, and that current safety researchers make only limited trade offs against urgent work in relatively mature AI software fields.

5. Counter Arguments

NMH is developing too slowly to matter. Other developments will determine the course of AGI prior to NMH becoming practical for real world tasks.

Short response: We welcome work by technological forecasting experts to assess both the likelihood and impact of NMH capability becoming competitive with conventional approaches over relevant timelines for AGI. To our knowledge an assessment of this type has yet to be published.

We will potentially be able to simulate NMH with classical hardware. Thus, safety research of NMH is directly mappable to safety of classical hardware established research. NMH safety does not require explicit focus.

Short response: The simulation of physical systems at scale is often not feasible. Additionally, the training framework PAT is not directly mappable to deep neural networks[21]. Even an ideal simulation of NMH would require novel interpretability methods.

We mention self-assembly mechanisms that enable network plasticity as a key concern for AGI safety. However, adaptive plasticity is not supported by experimental results yet. Until that is the case, dedicating research effort to NMH is not worth the opportunity cost.

Short response: In line with our response to counter argument 1, we welcome an assessment of the cost-benefit for further work in this domain. We expect that the rate of progress on development of network plasticity as described here is highly uncertain.

As of today, most neuromorphic networks are exclusively produced in specialized labs. During further research we could find major requirements in manufacturing that complicate the cheap and accessible production of self-assembling neuromorphic computing chips.

Short response: This is likely to be the case where creation of networks strictly requires the use of expensive equipment or techniques such as electron microscopes or lithography. However, in the case of molecular biology there is a trend toward democratization of techniques previously only achievable by the most capable and well resourced labs. This process can start with a goal of facilitating replication of results by other scientists through ‘productization’ of techniques as realized through relatively easy to use, open source software and wet lab kits. Commercialisation of useful research technology potentially follows, finally making the jump into full accessibility when kits are openly sold to hobbyists. If nanowires prove not to require expensive manufacturing equipment at a useful or interesting stage of development, a similar process may occur.

- ^

Write a message to us by clicking on the author name below the main title.

- ^

D. V. Christensen et al., “2022 roadmap on neuromorphic computing and engineering,” Neuromorphic Comput. Eng., vol. 2, no. 2, p. 022501, May 2022, doi: 10.1088/2634-4386/ac4a83.

- ^

Node density. All node densities have been converted to units of 1/mm2 for comparability. For example, the three dimensional node density of the human brain has been converted to a two-dimensional value with (1/mm3)2⁄3.

- ^

Connections per node. For the brain we use the average number of synaptic connections per neuron. For atomic switching networks we use the number of directly connected junctions per single junction. For GPUs we use the fan-out value per logic gate.

- ^

Computational Activity. Product of node density and signal rate.

- ^

Comparability of energy consumption among hardware. The energy consumption of the human brain was calculated by

using 20 Watts of power consumption for an average brain[20]. For interconnected nanowire networks, only values for single input pulses of 10 ms duration (not repetitive spikes) are available. The energy consumption per was calculated by total energy per input pulse#junctions. We expect a more comparable value once energy consumption data is available for nanowire networks with repetitive pulses. An isolated synaptic operation of an artificial synapse requires 10-15 Joule per operation[51]. This indicates potential for further increase in energy efficiency for nanowire networks.

The algorithms of NMH and modern computing hardware are not necessarily isomorphic (able to represent each other). Thus, the energy consumption of elementary operations of NMH and GPUs are not directly comparable in general. We compare the energy consumption w.r.t. an image classification task. López-Randulfe et al.[52] determine the number of elementary operations required to classify handwritten digits from the MNIST dataset. They benchmark both a Spiking Neural Network (SNN, a simulation of NMH) and a Convolutional Neural Network (CNN). We arrive at a conversion rate of 1 synaptic operation ≈ 10 FLOPs. Note the conversion rate could vary for other benchmarks.We chose nanowires to represent NMH in Table 1, as they incorporate multiple neuromorphic features. If you are interested in an overview of brain-inspired technology we recommend the review by Christensen et al.[2].

- ^

J. Kelly and M. Hawken, “Quantification of neuronal density across cortical depth using automated 3D analysis of confocal image stacks,” Brain Struct. Funct., vol. 222, Sep. 2017, doi: 10.1007/s00429-017-1382-6.

- ^

B. Wang et al., “Firing Frequency Maxima of Fast-Spiking Neurons in Human, Monkey, and Mouse Neocortex,” Front. Cell. Neurosci., vol. 10, p. 239, Oct. 2016, doi: 10.3389/fncel.2016.00239.

- ^

F. A. C. Azevedo et al., “Equal numbers of neuronal and nonneuronal cells make the human brain an isometrically scaled-up primate brain,” J. Comp. Neurol., vol. 513, no. 5, pp. 532–541, Apr. 2009, doi: 10.1002/cne.21974.

- ^

D. A. Drachman, “Do we have brain to spare?,” Neurology, vol. 64, no. 12, pp. 2004–2005, Jun. 2005, doi: 10.1212/01.WNL.0000166914.38327.BB.

- ^

H. Sillin et al., “A theoretical and experimental study of neuromorphic atomic switch networks for reservoir computing,” Nanotechnology, vol. 24, p. 384004, Sep. 2013, doi: 10.1088/0957-4484/24/38/384004.

- ^

G. Milano et al., “In materia reservoir computing with a fully memristive architecture based on self-organizing nanowire networks,” Nat. Mater., vol. 21, no. 2, pp. 195–202, Feb. 2022, doi: 10.1038/s41563-021-01099-9.

- ^

A. Diaz-Alvarez et al., “Emergent dynamics of neuromorphic nanowire networks,” Sci. Rep., vol. 9, no. 1, p. 14920, Dec. 2019, doi: 10.1038/s41598-019-51330-6.

- ^

G. Milano et al., “Brain‐Inspired Structural Plasticity through Reweighting and Rewiring in Multi‐Terminal Self‐Organizing Memristive Nanowire Networks,” Adv. Intell. Syst., vol. 2, no. 8, p. 2000096, Aug. 2020, doi: 10.1002/aisy.202000096.

- ^

“NVIDIA A100 PCIe 80 GB Specs,” TechPowerUp. https://www.techpowerup.com/gpu-specs/a100-pcie-80-gb.c3821 (accessed Dec. 23, 2022).

- ^

“Fan-out,” Wikipedia. Sep. 07, 2022. Accessed: Dec. 01, 2022. [Online]. Available: https://en.wikipedia.org/w/index.php?title=Fan-out&oldid=1109096955

- ^

G. Milano, S. Porro, I. Valov, and C. Ricciardi, “Recent Developments and Perspectives for Memristive Devices Based on Metal Oxide Nanowires,” Adv. Electron. Mater., vol. 5, no. 9, p. 1800909, 2019, doi: 10.1002/aelm.201800909.

- ^

F. Tomassoni-Ardori, Z. Hong, G. Fulgenzi, and L. Tessarollo, “Generation of Functional Mouse Hippocampal Neurons,” Bio-Protoc., vol. 10, no. 15, pp. e3702–e3702, Aug. 2020.

- ^

F. Tomassoni-Ardori et al., “Rbfox1 up-regulation impairs BDNF-dependent hippocampal LTP by dysregulating TrkB isoform expression levels,” eLife, vol. 8, p. e49673, Aug. 2019, doi: 10.7554/eLife.49673.

- ^

V. Balasubramanian, “Brain power,” Proc. Natl. Acad. Sci. U. S. A., vol. 118, no. 32, p. e2107022118, Aug. 2021, doi: 10.1073/pnas.2107022118.

- ^

L. G. Wright et al., “Deep physical neural networks trained with backpropagation,” Nature, vol. 601, no. 7894, Art. no. 7894, Jan. 2022, doi: 10.1038/s41586-021-04223-6.

- ^

Reservoir computing utilizes the random network structure as a black box within a predictive algorithm[53]. Nanowire networks successfully perform image recognition with reservoir computing[12]. Embedding the unknown network structure into AI poses a major challenge to model interpretability. The combination of nanowires and shallow crossbar array chips successfully performs image recognition[12], speech recognition[54] and other tasks[55][11]. We argue nanowires will likely develop as independent inference devices, initially trained by an external digital trainer.

- ^

“How microchips are made | ASML.” https://www.asml.com/en/technology/all-about-microchips/how-microchips-are-made (accessed Oct. 09, 2022).

- ^

M. Pfeiffer and T. Pfeil, “Deep Learning With Spiking Neurons: Opportunities and Challenges,” Front. Neurosci., vol. 12, 2018, Accessed: Oct. 10, 2022. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fnins.2018.00774

- ^

C. Lee, S. S. Sarwar, P. Panda, G. Srinivasan, and K. Roy, “Enabling Spike-Based Backpropagation for Training Deep Neural Network Architectures,” Front. Neurosci., vol. 14, 2020, Accessed: Oct. 10, 2022. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fnins.2020.00119

- ^

T. C. Wunderlich and C. Pehle, “Event-based backpropagation can compute exact gradients for spiking neural networks,” Sci. Rep., vol. 11, no. 1, Art. no. 1, Jun. 2021, doi: 10.1038/s41598-021-91786-z.

- ^

K. Roy, A. Jaiswal, and P. Panda, “Towards spike-based machine intelligence with neuromorphic computing,” Nature, vol. 575, no. 7784, Art. no. 7784, Nov. 2019, doi: 10.1038/s41586-019-1677-2.

- ^

N. Imam and T. A. Cleland, “Rapid online learning and robust recall in a neuromorphic olfactory circuit,” Nat. Mach. Intell., vol. 2, no. 3, Art. no. 3, Mar. 2020, doi: 10.1038/s42256-020-0159-4.

- ^

P. Zuliani, A. Conte, and P. Cappelletti, “The PCM way for embedded Non Volatile Memories applications,” 2019 Symp. VLSI Technol., pp. T192–T193, 2019, doi: 10.23919/VLSIT.2019.8776502.

- ^

“LaneSNNs: Spiking Neural Networks for Lane Detection on the Loihi Neuromorphic Processor,” DeepAI, Aug. 03, 2022. https://deepai.org/publication/lanesnns-spiking-neural-networks-for-lane-detection-on-the-loihi-neuromorphic-processor (accessed Oct. 10, 2022).

- ^

“Von Neumann architecture,” Wikipedia. Sep. 16, 2022. Accessed: Oct. 10, 2022. [Online]. Available: https://en.wikipedia.org/w/index.php?title=Von_Neumann_architecture&oldid=1110615949#Von_Neumann_bottleneck

- ^

Z. Sun and R. Huang, “Time Complexity of In-Memory Matrix-Vector Multiplication,” IEEE Trans. Circuits Syst. II Express Briefs, vol. 68, no. 8, pp. 2785–2789, Aug. 2021, doi: 10.1109/TCSII.2021.3068764.

- ^

A. Sebastian, M. Le Gallo, R. Khaddam-Aljameh, and E. Eleftheriou, “Memory devices and applications for in-memory computing,” Nat. Nanotechnol., vol. 15, no. 7, Art. no. 7, Jul. 2020, doi: 10.1038/s41565-020-0655-z.

- ^

S. Ambrogio et al., “Equivalent-accuracy accelerated neural-network training using analogue memory,” Nature, vol. 558, no. 7708, Art. no. 7708, Jun. 2018, doi: 10.1038/s41586-018-0180-5.

- ^

M. Dazzi, A. Sebastian, L. Benini, and E. Eleftheriou, “Accelerating Inference of Convolutional Neural Networks Using In-memory Computing,” Front. Comput. Neurosci., vol. 15, 2021, Accessed: Dec. 17, 2022. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fncom.2021.674154

- ^

A. Boroumand et al., “Google Workloads for Consumer Devices: Mitigating Data Movement Bottlenecks,” ACM SIGPLAN Not., vol. 53, pp. 316–331, Mar. 2018, doi: 10.1145/3296957.3173177.

- ^

C. S. Dunham et al., “Nanoscale neuromorphic networks and criticality: a perspective,” J. Phys. Complex., vol. 2, no. 4, p. 042001, Dec. 2021, doi: 10.1088/2632-072X/ac3ad3.

- ^

J. Wei et al., “Emergent Abilities of Large Language Models.” arXiv, Oct. 26, 2022. doi: 10.48550/arXiv.2206.07682.

- ^

T. Henighan et al., “Scaling Laws for Autoregressive Generative Modeling.” arXiv, Nov. 05, 2020. doi: 10.48550/arXiv.2010.14701.

- ^

J. Kaplan et al., “Scaling Laws for Neural Language Models.” arXiv, Jan. 22, 2020. doi: 10.48550/arXiv.2001.08361.

- ^

G. Branwen, “The Scaling Hypothesis,” May 2020, Accessed: Oct. 08, 2022. [Online]. Available: https://www.gwern.net/Scaling-hypothesis

- ^

“When will singularity happen? 995 experts’ opinions on AGI.” https://research.aimultiple.com/artificial-general-intelligence-singularity-timing/ (accessed Dec. 18, 2022).

- ^

S. Russell, “Artificial Intelligence and the Problem of Control,” Perspect. Digit. Humanism, p. 19, 2022.

- ^

L. Cocchi, L. L. Gollo, A. Zalesky, and M. Breakspear, “Criticality in the brain: A synthesis of neurobiology, models and cognition,” Prog. Neurobiol., vol. 158, pp. 132–152, Nov. 2017, doi: 10.1016/j.pneurobio.2017.07.002.

- ^

R. V. Solé et al., “Criticality and scaling in evolutionary ecology,” Trends Ecol. Evol., vol. 14, no. 4, pp. 156–160, Apr. 1999, doi: 10.1016/S0169-5347(98)01518-3.

- ^

S. Shirai et al., “Long-range temporal correlations in scale-free neuromorphic networks,” Netw. Neurosci., vol. 4, no. 2, pp. 432–447, Apr. 2020, doi: 10.1162/netn_a_00128.

- ^

A. Ramesh, P. Dhariwal, A. Nichol, C. Chu, and M. Chen, “Hierarchical Text-Conditional Image Generation with CLIP Latents.” arXiv, Apr. 12, 2022. doi: 10.48550/arXiv.2204.06125.

- ^

J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova, “BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding.” arXiv, May 24, 2019. doi: 10.48550/arXiv.1810.04805.

- ^

J. Jumper et al., “Highly accurate protein structure prediction with AlphaFold,” Nature, vol. 596, no. 7873, Art. no. 7873, Aug. 2021, doi: 10.1038/s41586-021-03819-2.

- ^

S. Russell, “If We Succeed,” Daedalus, vol. 151, no. 2, pp. 43–57, May 2022, doi: 10.1162/daed_a_01899.

- ^

W. Xu, S.-Y. Min, H. Hwang, and T.-W. Lee, “Organic core-sheath nanowire artificial synapses with femtojoule energy consumption,” Sci. Adv., vol. 2, no. 6, p. e1501326, Jun. 2016, doi: 10.1126/sciadv.1501326.

- ^

J. López-Randulfe, N. Reeb, and A. Knoll, “Conversion of ConvNets to Spiking Neural Networks With Less Than One Spike per Neuron,” in 2022 Conference on Cognitive Computational Neuroscience, San Francisco, 2022. doi: 10.32470/CCN.2022.1081-0.

- ^

G. Tanaka et al., “Recent advances in physical reservoir computing: A review,” Neural Netw., vol. 115, pp. 100–123, Jul. 2019, doi: 10.1016/j.neunet.2019.03.005.

- ^

S. Lilak et al., “Spoken Digit Classification by In-Materio Reservoir Computing With Neuromorphic Atomic Switch Networks,” Front. Nanotechnol., vol. 3, 2021, Accessed: Nov. 18, 2022. [Online]. Available: https://www.frontiersin.org/articles/10.3389/fnano.2021.675792

- ^

E. C. Demis, R. Aguilera, K. Scharnhorst, M. Aono, A. Z. Stieg, and J. K. Gimzewski, “Nanoarchitectonic atomic switch networks for unconventional computing,” Jpn. J. Appl. Phys., vol. 55, no. 11, p. 1102B2, Sep. 2016, doi: 10.7567/JJAP.55.1102B2.

Normally when I hear “neuromorphic computing,” I think of custom chips, not reservoir computing. I guess that’s the “self-assembled” part?

Does anyone more into the hardware side of things know about the actual competitiveness of the approaches advertised here?

I actually believe this is important, and think that AGI becomes vastly easier if neuromorphic chips are widely used (of course it would be a holy grail if general quantum computers could actually be built.)