My Failed AI Safety Research Projects (Q1/Q2 2025)

This year I’ve been on sabbatical, and have spent my time upskilling in AI Safety. Part of that is doing independent research projects in different fields.

Some of those items have resulted in useful output, notably A Toy Model of the U-AND Problem, Do No Harm? and SAEs and their Variants.

And then there are others that I’ve just failed fast, and moved on.

Here I write up those projects that still have something to say, even if it’s mostly negative results.

LLM Multiplication

Inspired by @Subhash Kantamneni’s Language Models Use Trigonometry to Do Addition, I was curious if LLMs would use a similar trick for multiplication, after an appropriate log transform.

I adapted the original code to this case and ran it with various parameters. I also designed a few investigations of my own. Notably, the original code relied on DFT which was not appropriate for a floating point case.

The original paper looked at early-layer activations of the tokens “1” to “360″, and looked for structure that related to the numerical value of the token. They found a helix, i.e. they found a linear direction that increased linearly with the token value, and several size two subspaces that each encoded the token value as an angle, with various different angular frequencies.

Using similar techniques, I found a linear direction corresponding to log(token value). This emerged via PCA techniques as a fairly high eigenvalues, so there it feels like fairly strong evidence. That said, I couldn’t find significant impact via ablation studies. I found no evidence for an equivalent of angular encoding for log(token value).

On reflection, I think multiplication is probably not handled equivalently to addition. There’s a couple of reasons why:

Performing log/exp transforms is not easy for ReLU based models[1]

Multiplication has much larger result values, so the single-token approach taken by this paper is less valuable.

There are a number of “natural frequencies” in numbers, most notably mod 10, which are useful for things other than clock arithmetic. E.g. anthropic finds a feature for detecting the rightmost digit of a number, which almost certainly re-uses the same subspace. There’s no equivalent for logarithms.

· Brief Writeup · Code ·

Toy Model of Memorisation

After my success with Computational Superposition in a Toy Model of the U-AND Problem, I wanted to try toy models to a harder problem. The takeway from my earlier post was that models often diffuse and overlap calculation across many neurons, which stymies interpretability. I thought this might also explain why it’s so difficult to understand how LLMs memorise factual information. I took a crack at this despite the fact that GDM has spent quite a bit of effort on it and Neel Nanda directly told me that it is “cursed”.

So I made a toy model trained to memorise a set of boolean facts chosen at random, and investigated the circuits and the scaling laws.

I found a characterisation of the problem showing that the circuits formed are like a particular form of matrix decomposition, where the matrix to decompose is the table of booleans needing memorising. There’s lots of nice statistical properties about the decomposition of random matrices that hint at some useful results, but I didn’t find anything conclusive.

This does go some distance explaining why the problem is “cursed”. The learnt behaviour depends on incidental structure—i.e. coincidences occuring in the random facts. So while there might be something to analyse statistically, there isn’t going to be any interpretable meaning behind it, and it’s likely packed so tightly there’s clever trick to recover anything without just running the model.

Then I analysed the scaling laws behind memorisation. I found that typically, the models were able to memorise random bool facts with ~2 bits per parameter of the model. Later I found out that this is a standard result in ML. It’s nice to confirm that this happens in practise, with ReLU, but it’s not a big enough result to merit a writeup. I wasn’t able to find any theoretical construction that approached this level of bit efficiency. I note that this level of efficiency is far from the number of bits actually in a parameter, which probably helps explain why quantisation works[2].

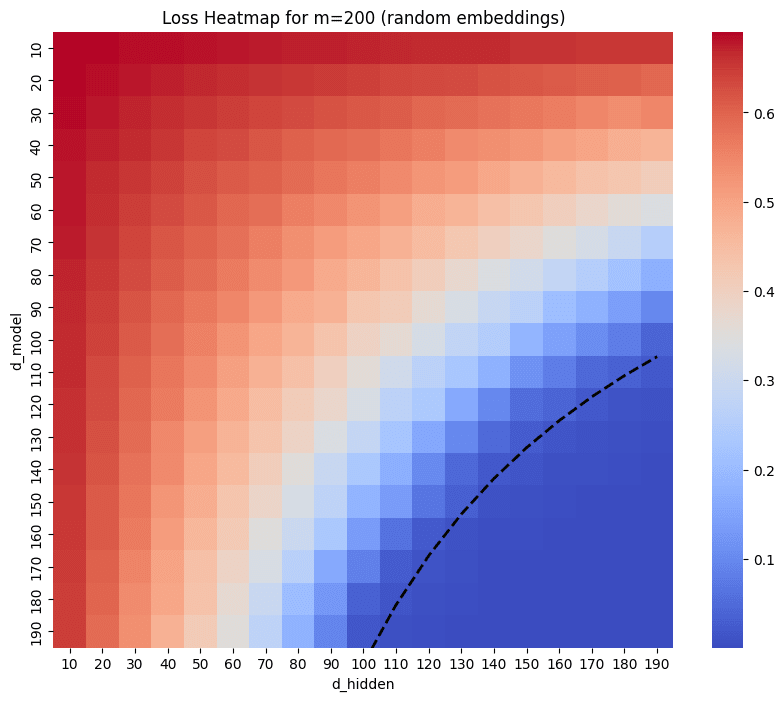

Dotted line shows total parameters.

· Brief Writeup · Code ·

ITDA Investigation

@Patrick Leask gave me an advanced copy on his paper on IDTA SAEs, a new sort of sparse autoencoder that uses a simplified representation and is vastly easier to train.

I played around a bit with their capabilities, and modified Patrick’s code to support cross-coders. I also found a minor improvement that improved their reconstruction loss by 10%. But ultimately I couldn’t convince myself that the quality of the features found by ITDAs was high enough to justify investing more time, particularly as I had several other things on at the time.

· Brief Writeup · Code ·

Strong upvote. Failures are very common and people should write more posts about them. I’m impressed by all the outputs you’ve had in this short period of time. You are set up for success upon return from sabbatical!

Here are some quick thoughts on the post itself:

Sorry, to me the title is slightly misleading; I wouldn’t really consider any of the projects described in this post to be failures unless you’re being exceedingly humble, because you have writeups and code for each of them which is further than most failed projects get. Despite your caveat that these are “those projects which still have something to say”, I’d be even more curious about ones from further down the stack which “died” earlier. When reading posts like these I look for snippets that might be embarrassing for the author, things like, “I made an obvious blunder or bad decision” and I didn’t really find that here, which makes this less relatable (though the short length means this doesn’t detract from the quality of the writing). Perhaps that was a non-goal. The closest might be that bool facts can be memorized with ~2 bits per parameter, but that’s not a trivial result.

That might also appeal to a broader audience since a lot of people are moving into the field and they tend to repeat the same mistakes at first. Some common errors are well known and generic, but other widespread issues are invisible (or noise!) to the people best equipped to address them. But that’s alright if you were aiming this post at a smaller audience that was more senior than you, in contrast to a larger one that was more junior.

Here are some quick thoughts on the projects:

In Appendix A of your Google Doc on multiplication experiments, it would be helpful if the file names were hyperlinks to the jupyter notebooks. You do link to the github at the top, so this isn’t really a big deal since the work is 4 months old at this point and probably won’t have many people taking a look at it. But a few other internal sections link to that appendix, so it’s an attractor for readers to fall into, and if you use this structure for future documents then it’d be natural to want to get to the code linked to the notes.

Only tangentially relevant, but this file https://github.com/BorisTheBrave/llm-addition-takehome/blob/main/notes.txt stood out to me as perfect for prompting base models. It’s been a while since I’ve touched gpt-4-base but this is the sort of thing it was most helpful for back when I was heavily using loom: https://manifold.markets/market/will-an-ai-get-gold-on-any-internat?tab=comments#HcayZchcTfCdFQb0Q6Dr.

(Please correct me if I’m wrong on this) It is surprising to me that you don’t seem to have technical collaborators, except on the do no harm post. This makes sense for the submission to Neel Nanda’s MATS stream, since that is meant to be a test of your personal skills, but for most other codebases I typically see at least 1-2 people besides the project lead making code contributions to the repo. On your website I found a link to a Discord server with 600+ members- surely at least some of them would be excited about teaming up with you? Maybe if you’re especially conscientous you wouldn’t want to risk wasting people’s time, or there’s some inherent tradeoff between networking and problem solving, but teamwork is a valuable skill to develop. Of course, this is a case where people might give you the opposite advice if all of your projects were done in a large group, so take it with a grain of salt. Based on this post alone, if you can share a concrete proposal and plan for future work, I’d be happy to hop on a call and connect you to individuals or programs which might be a good fit to support your research.

We recently published some research comparing ITDA and SAEs for finding features in diffusion models: https://arxiv.org/abs/2505.24360. I’m not first author on this work, and I don’t fully understand our results since my role in meetings was mostly minor tasks such as helping to run experiments or fix citations, but it might be worth skimming over in case you’re still curious about what other people have been doing with the method.

Finally, this section made me laugh:

Yes, I’ve struggled for collaborators—I tried to join some projects, but was always scuppered by scheduling conflicts. And my discord is full of game dev and procedural art enthusiasts, not AI safety experts. I started all these projects just intending to learn, so I wasn’t too focussed on getting any serious output.

I’ve joined MATS now so I am getting more into a collaborative mode and have plenty to occupy me for the time, but thank you for the offer. But I would like to know how you got involved with that ITDA work?

Thanks for reading the projects in such depth, I honestly didn’t expect anyone would.

Congratulations on MATS!

Patrick shared a draft with Stepan Shabalin, who shared it with me. We had collaborated on another project earlier https://arxiv.org/abs/2505.03189 which was lead by my former SPAR student Yixiong, so it made sense to work together again.

Oh, not at all, I only took a quick look through everything and could have spent more time on details. Until now, I didn’t even notice that https://github.com/BorisTheBrave/itda-coders was a private repo which I cannot access.