An Introduction To Rationality

This article is an attempt to summarize basic material, and thus probably won’t have anything new for the hard core posting crowd. It’d be interesting to know whether you think there’s anything essential I missed, though.

Summary

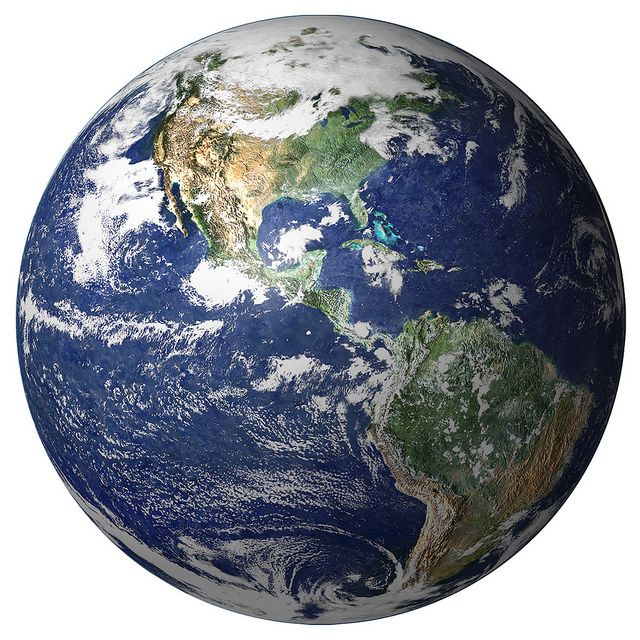

We have a mental model of the world that we call our beliefs. This world does not always reflect reality very well as our perceptions distort the information coming from our senses. The same is true of our desires such that we do not accurately know why we desire something. It may also be true for other parts of our subconscious. We, as conscious beings, can notice when there is a discrepancy and try to correct for it. This is known as Epistemic rationality. Seen this way science is a formalised version of epistemic rationality. Instrumental rationality is the act of doing what we value, whatever that may be.

Both forms of rationality can be learnt and it is the aim of this document to convince you that it is both a good idea and worth your time.

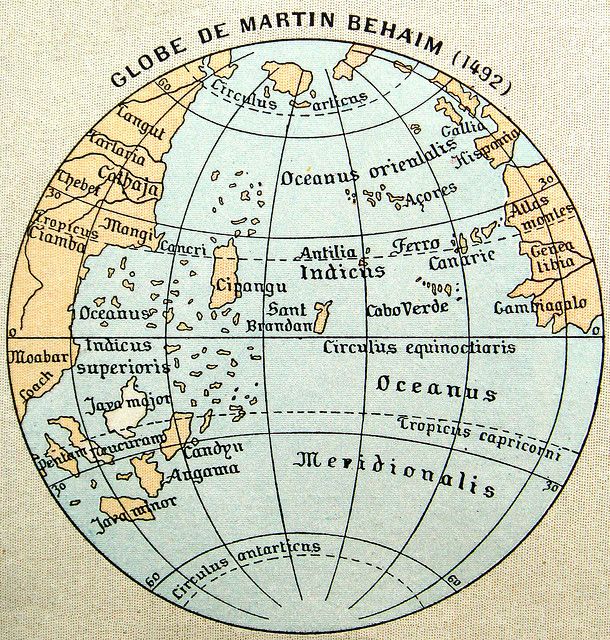

Hill Walker’s Analogy

Reality is the physical hills, streams, roads and landmarks (the territory) and our beliefs are the map we use to navigate them. We know that the map is not the same thing as the territory but we hope it is accurate enough to understand things about it all the same. Errors in the map can easily lead to us doing the wrong thing.

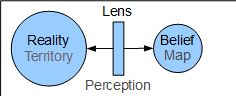

The Lens That Distorts

We see the world through a filter or lens called perception. This is necessary; there is too much information in the world to map fully but it is very important to quickly recognise certain things. For example it is useful to recognise that a lion is about to attack you.

To go back to our hill walker’s analogy: we are quicker navigating with a hill walker’s map than an aerial photograph. This is because the map has been filtered to only show what is commonly needed by walkers. Too much information can slow our judgement or confuse us. On the other hand incorrect information or a lack of information is just as bad. If when out walking we are told to turn left at the second stream yet the hill has more streams than the map then we will get lost. The map was not adequate in this situation.

In the same way our own maps can be wrong caused by errors in our perceptual filter. Optical illusions are a nice example of such a phenomenon where there is a distortion between map and territory.

An Example

Light from the sun bounces off our shoelaces, which are untied, and hits our eye (reality). These signals get perceived (the lens) as an untied shoelace and thus we have the belief (map) that our shoelaces are untied. In this case the territory and map reflect the same thing but the map contains a condensed version; the exact position of the laces were not deemed important and hence were filtered out by our perception.

Truth

“The sentence ‘snow is white’ is true if, and only if, snow is white.”—Alfred Tarski

What is being said here is that if the reality is that ‘snow is white’ then we should believe that ‘snow is white’. In other words, we should try to make our belief match reality. Unfortunately we cannot directly tell if in reality snow is white but given enough evidence we should believe it as true.

By default, our subconscious believes what it sees, it has to. You have no time to question your belief if a lion is coming towards you. We could not have survived this long as a species if we questioned and tested everything. Yet there are some times where we are reproducibly, predictably, irrational. That is, we do not update our belief correctly based upon evidence.

Rationality

-

Epistemic rationality: believing, and updating on evidence, so as to systematically improve the correspondence between your map and the territory. The art of obtaining beliefs that correspond to reality as closely as possible. This correspondence is commonly termed “truth” or “accuracy”.

-

Instrumental rationality: achieving your values. Not necessarily “your values” in the sense of being selfish values or unshared values: “your values” means anything you care about. The art of choosing actions that steer the future toward outcomes ranked higher in your preferences. Sometimes referred to as “winning”.

These definitions are the two kinds of rationality used here. Epistemic rationality is making the map match the territory. An example of an epistemic rationality error would be believing a cloud of steam is a ghost. Instrumental rationality is about doing what you should based upon what you value. An instrumental rationality error would be working rather than going to your friend’s birthday when your values say you should have gone.

Why Should We Be Rational?

-

Curiosity – an innate desire to know things. e.g. “how does that work?”

-

Pragmatism – we can better achieve what we want in the world if we understand it better. e.g. “I want to build an aeroplane; therefore I need to know about lift and aerodynamics”, “I want some milk; I need to know whether to go to the fridge or store”. If we are irrational then our belief may cause a plane crash or walking to the store when there is milk in the fridge.

-

Morality—the belief that truth seeking is important to society, and therefore it is a moral requirement to be rational.

What Rationality Is And Is Not

Being rational does not mean thinking without emotion. Being rational and being logical are different things. It may be more rational for you to go along with something you believe to be incorrect if it fits with your values.

For example, in an argument with a friend it may be logical to stick to what you know is true but rationally you may just concede a point of argument and help them even if you think it is not in their best interest. It all depends on your values and following your values is part of the definition of instrumental rationality.

If you say “the rational thing to do is X but the right thing is Y” then you are using a different definition for rationality than is intended here. The right thing is always the rational thing by definition.

Rationality also differs for different people. In other words the same action may be rational for one person and irrational for another. This could be due to:

-

Different realities. For example, living in a country with deadly spiders should give you more reason to be afraid of them. Hence the belief that spiders are scary is only rational in certain countries.

-

Different values. For example two people may agree on how unlikely it is to win the lottery but one may still value the prize enough to enter. Hence playing the lottery can be either rational or irrational depending on your values.

-

Incorrect beliefs. If you believe that a light-bulb will work without electricity and do not have sufficient evidence to support the claim then your belief is wrong. In fact if you believe anything without sufficient evidence then you are wrong but what constitutes sufficient evidence is down to your values and hence is another valid reason for beliefs to differ.

When confronting someone who has different beliefs this could be down to the points above, in which case it is worth trying to see the world through their lens to understand how their belief came about. You may still conclude that their belief is wrong. There is no problem with this. Not all beliefs are based in rationality.

An important note in such discussions is to consider whether you are actually arguing over different points or using different definitions. For example, two people may argue about how many people live in New York because each is using a slightly different definition of New York. The same sort of thing happens when the old saying “if a tree falls in the woods and no one is around to hear it does it make a sound?”. People may have a different answer for this based upon their definition of “makes a sound” but there expected experiences are generally the same; that there are sound waves but not the perception of sound.

Science and Rationality

Science is a system of acquiring knowledge based on scientific method, and the organized body of knowledge gained through such research. In other words it is the act of testing theories and gaining information from those tests. A theory proposes an explanation for an event, that’s all it is. This theory may or may not be useful; a useful theory is one that is:

-

Logically consistent,

-

Can be used as a tool for prediction.

If this seems familiar to rationality then you are correct. Science attempts to obtain a true map of reality using a specific set of techniques. It is in essence a more formalised version of rationality.

A theory is very strongly linked to a belief. Indeed they should both have the two traits listed above; to be logically consistent and to be a predictor of events. Just like a theory, a belief proposes an explanation for events.

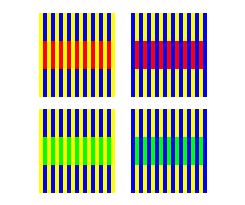

The Lens That Sees Its Flaws

We, as conscious beings, have the ability to correct the distortion to our perceptual filter. We may not be able to see through an optical illusion (our lens will always be flawed) but we can choose to believe that it is an illusion based upon other evidence and our own thoughts.

In the image on the left below (Müller-Lyer illusion) we may see the lines as different lengths but through other evidence choose not to believe what our senses are telling us. The image to the right (Munker illusion) is an illusion that is even harder to believe: the red and purple looking colours on the top parts are actually the same; as is the green and turquoise looking ones on the bottom image. I hope that you check this for yourself. Even after you convince yourself of the illusion it will still be very hard to see them as the same colour. We may never be able to fix the flaws in our perception but by being aware of them we can reduce the mistakes we make because of it.

Our Modular Brain

We don’t know what many of our desires are, we don’t know where they come from, and we can be wrong about our own motivations. For example, we may think the desire to give oral sex is for the pleasure of our partner. However our bodies create this desire as a test for health, fertility and infidelity. We consciously feel the desire and ascribe reason to it but it can be a different reason to the subconscious one.

Because of this we should treat the signals coming from our subconscious as distorted by yet another lens, one that hides much of what is behind it. We (speaking as the conscious) notice our subconscious desires and try to infer why they have occurred. Yet this understanding may be wrong. Again these flaws in our lens can be corrected. You probably already temper the amount of sugar and fat you eat even though you have a subconscious desire to eat more.

Another example is that there are no seriously dangerous spiders in the UK so there is no rational reason to be afraid of them. Yet is seems to be a universal trait. On seeing a spider and feeling afraid, we can recognise that particular flaw in our subconscious reasoning and choose to act differently by not running away.

What should we do?

To be epistemicly rational we must look for systematic flaws in the perceptual filters (lenses) between reality and our brain as well as within different parts of the brain. We must then train ourselves to recognise and correct them when they occur. Simply talking or thinking about them is not enough, active training in recognising and dealing with such errors is needed.

To be instrumentally rational we must define our values, updating them as needed, and learn how to achieve these values. Simply having well defined values is not enough, everything we do should work towards achieving them in some way. There are methods that can be learnt to help with achieving goals and it would make sense to learn these especially if you are prone to procrastination. The links below are a few examples of such things on procrastination, self help, and achieving goals.

-

http://lesswrong.com/lw/3w3/how_to_beat_procrastination/

-

http://lesswrong.com/lw/3nn/scientific_selfhelp_the_state_of_our_knowledge/

-

http://lesswrong.com/lw/2p5/humans_are_not_automatically_strategic

One of the most important concepts to grasp is the best way to update our beliefs based upon what we experience (the evidence). Thomas Bayes formulated this mathematically such that if we were behaving rationally we would expect to follow Bayes’ Theorem.

Research shows that, in some cases at least, people do not generally follow this model. Hence one of our first goals should be to think about evidence in a Bayesian way. See http://yudkowsky.net/rational/bayes for an intuitive explanation of Bayes’ Theorem.

One important concept comes out of the theorem that I will briefly introduce here. Evidence must update our existing beliefs not replace them. If a test comes up positive for cancer the probability that you have it depends on the accuracy of the test AND the prevalence for cancer in the general population. This is likely to seem strange unless you think of it in a Bayesian way.

Summary

-

The physical world is reality.

-

Inside our brains we have beliefs.

-

Our beliefs are meant to mirror reality.

-

A good belief and a good scientific theory is:

-

Logically consistent – fits in with every other good belief.

-

A predictor of events – helps you predict the future and explain past events.

-

-

Our perception of the world can distort the beliefs.

-

We can change how we perceive things through conscious thought.

-

This can reduce the error between reality and our beliefs.

-

This is called rationality.

This post would be of much higher utility if some more time was spent working on the flow of the writing. I’m currently introducing some friends to rationality, but I won’t be using this post as it feels clunky and not that engaging, although I think that the bits based on ‘the lens that sees its flaws’ (diagrams of reality, lens, beliefs and optical illusions sections) are great introductions to the subject.

Perhaps try and write more conversationally, as big parts just feel like quite formal lists of statements. Also, maybe include what’s at the top of this post at the top.

I, on the other hand, like the “telegraphic” writing style of the post (for all that I might recommend various tweaks here and there), and am happy to see this material in this form. (Just to list one advantage of such a style, it tends to produce texts with a high degree of “skimmability”).

Lately I’ve been inclining toward the view that, given human psychological diversity, there really is no such thing as too many different pedagogical texts on a given subject. So rather than encouraging this author to change the style of this post, I would sooner encourage them or (more likely) someone else to write another one if the need is felt.

Thanks for the encouragement. I have written another article but I will wait a week to post it. It again is written in a very “telegraphic” style.

Thanks for the feedback.

I wrote this to help me better understand the material when I first came across it. It was sitting doing nothing on my computer for a year and so I decided to just post it. I hope it will be useful as an article for a few beginners.

I agree I should try and make the work more engaging and I have recently read Made to Stick, On Writing Well and Elements of Style to give me ideas on how to improve my writing. I still find it very difficult and time consuming.

Agreed. I don’t really disagree with anything in this post, but I’m not sure why it was posted. It seems to largely deal with concepts that have already been discussed, which is fine—but for introductions/summaries to be good, IMO they have to be highly clear and engaging, which I don’t find this to be.

I think that one of the more critical aspects (feedback) has been glossed over to a degree that I think it falls short of its goal of being a good introduction.

I suggest that some editing is in order. I don’t actively discourage another attempt; I suspect most of us have considered writing a “Why this matters and what we mean” post, and while there are other good materials on the site, more good ones probably won’t do much harm.

I could be wrong, but I’m not sure that’s what Tarski was talking about. I think this quote has more to do with getting at the nature of the relationship between a proposition (snow is white) and its representation (the string “snow is white”). Beliefs are somewhat irrelevant, or at least thinking about them goes well beyond the point made by the Tarski quote.

The relationship between a proposition and its representation is analogous to the relationship between reality and beliefs about reality. It’s identifying truth as correspondence.

OK, that makes sense as an analogy. I’m not sure the intention of drawing such an analogy comes through in the part of the post I quoted.

I recommend that the second sentence should start with “This model”—it’s much clearer than way rather than using “world” with two different meanings.

I haven’t read the whole thing yet, but do you cover limitations of the senses as well as distortions?

Thanks.

I don’t really cover limitations of senses. It’s an important thing but maybe for another article.

If you’re taking suggestions for corrections, I’d like to make one.

Your definition of “logical” here seems to be “ideal in a world of perfectly rational agents.” I think that, for most people, ‘logical’ would already be a loaded term that’s somewhat overlapped with the term ‘rational’, and allowing people to trade saying “That’s rational but not right” for “That’s logical but not right” may not be ideal (or logical, in your parlance).