Agents, Tools, and Simulators

This post was written as part of AISC 2025. It is the first in a sequence we intend to publish over the next several weeks.

Introduction

What policies and research directions make sense in response to AI depends a lot on the conceptual lens one uses to understand the nature of AI as it currently exists. Implicit in the design of products like ChatGPT—and explicit in much of the tech industry’s defense of continued development—is the “tool” lens. That is, AI is a tool that does what users ask of it. It may be used for dangerous purposes, but the tool itself is not dangerous. In contrast, discussion of x-risk by AI safety advocates often uses an “agent” lens. That is, instances of AI models can be thought of as independent beings that want things and act in service of those goals. AI is safe for now while its capabilities are such that humans can keep it under control, but it will become unsafe once it is sophisticated enough to override our best control techniques.

Simulator theory presents a third mental framing of AI and is specifically focused on LLMs. It involves multiple layers: (1) a training process that, by the nature of its need to compress language, generates (2) a model that disinterestedly simulates a kind of “laws of physics” of text generation, which in turn (sometimes) generates (3) agentic simulacra (most notably characters), which determine the text that the user sees.

This post will expand on the meaning of each of these lenses, starting by treating them as idealized types and then considering what happens when they blend together. Future posts in this series will consider the implications of each lens with respect to alignment, how well each lens is consistent with how LLM-based AI actually works, the assumptions underlying this analysis, and finally predictions of how we expect the balance of applicability of each lens to shift in response to future developments.

Agent

An agent is an entity whose behavior can be compressed into its consequences. As an agent’s capability increases, one should expect it to engage in increasingly sophisticated behavior that achieves its goals increasingly often. For example, RL produces agentic models whose goals converge towards those of the training process as the training environment becomes more complex. Properties that make an entity more agentic include:

Goals can be expressed as a coherent set.

Goals persist across different contexts.

Take instrumental steps that they don’t value intrinsically, including circumventing obstacles.

One might object to the first property on the grounds that it excludes humans—or at least makes us an impure case of agency. For the sake of maintaining a coherent definition, we are choosing to bite this bullet and say that, yes, humans are an impure example of agents—despite being a paradigm case motivating the formation of agents as a concept in the first place—because our behavior cannot be fully compressed into its consequences.

The importance of instrumentality can be seen by considering the case of water. Water exhibits the first two properties of agency when it follows mechanistically complex behavior that can be well-compressed into outcomes, such as flowing downhill. But water exhibits zero instrumentality, so it does not make sense to think of it as an agent.

One common but non-defining characteristic of agents is that they tend to recursively form subgoals that are in service of their terminal goals. These subgoals, initially formed for instrumental reasons, become goals in themselves, which can pull against the original goals that formed them (particularly when the agent’s context changes) via mesa-optimization. A familiar example is biological life developing internal drives as a result of, but sometimes acting in opposition to, the evolutionary selection pressure towards inclusive genetic fitness. A desire to accumulate money or power, initially instrumentally and then as an end in itself (or even at the expense of one’s original terminal goals), is another example of a mesa-optimizer in the context of motivations emerging within a lifetime.

Another thing to note about goals is that they can pull against each other. In such cases, the agent’s expected behavior may not be predictable by any single goal, but by the equilibrium of all its goals held in balance, including whatever creative compromises the agent can find. This dynamic raises questions as to the most useful level of abstraction to describe an agent’s goals: the drives directly motivating the agent or the resulting equilibrium? With respect to applying the agentic lens, coherence remains important. If the purpose of considering an entity’s goals is to predict its behavior, a goal structure that is wildly chaotic and context-dependent to the point of seeming arbitrary from the outside is probably best described through some other means. If switching to a different level of abstraction improves coherence while retaining accuracy, use that; if no perspective yields a coherent means of effectively compressing behavior from consequences then it’s time to consider using a different lens entirely.

Tool

A tool is an entity whose nature can be compressed into the affordances it provides to an agent. The behavior of a tool is wholly determined by the actions of an agent using the tool, the results of which are a combination of the agent’s goals and the tool’s affordances. Properties that make an entity more tool-like include:

Inert without the presence of an agent.

Extension of the agent’s intention.

Predictable by an agent capable in its use.

Notice that this definition does not actually describe the entity itself, but rather the relationship between the entity and the agent using it, and thus is context-dependent. A donkey, for example, can be thought of as a tool from the perspective of a farmer—even though the donkey is, in itself, a living being with its own internal drives and nature. Agency and tool-ness can thus overlap when they describe the same entity from different perspectives.

Tool-Agent Overlap

There is, however, an inherent tension between agency and tool-ness. Consider two agents, A and B, where B is a tool of A. Consider also two outcomes, X and Y. In this simplified setup, there are several possible relationships between B and A, described below and summarized in [Table 1]:

| Relationship of B to A | A Wants | B Wants |

| Collaborator | X | X |

| Slave | X | Y |

| Aligned | Variable | What A wants |

A and B both happen to want X, with no causal connection, other than perhaps A and B seeking each other out based on shared interests. This is a collaborative relationship. A might think of B as a tool and this perception will yield correct predictions of B’s behavior, but one could just as accurately say that A is a tool of B. Note that this relationship is potentially unstable: as soon as the context changes such that A and B no longer want the same thing, and they do not find some new way in which their interests overlap, then their collaborative relationship will turn into something else.

A wants X and B wants Y. This is an antagonistic relationship. If A coerces B into pursuing X despite B’s preference for Y, then B is acting as a slave to A. A could accurately describe B as a tool, but this relationship is only sustainable for as long as A has sufficient power to maintain coercive control over B.

B wants X because A wants X. That is, B’s wants are a pointer to A’s wants. It follows that if A changes its preference to Y then B’s preference will follow. This arrangement eliminates any potential tension between B’s internally agentic and relationally tool-like nature and is the (unsolved problem of) corrigible alignment.

Another source of tension between agency and tool-ness is that the agency of the tool is inversely proportional to the resolution of control maintained by the user. As the level of abstraction on which the tool operates increases, the user exerts less fine-grained control (cyan line) over its behavior, reducing the ability to specify precise actions or outcomes. At the same time, the tool’s agency (purple line) increases, meaning it takes on more independent decision-making responsibilities rather than simply executing predefined instructions. This shift reduces the amount of direct work required from the user (orange line), as operating on a higher-level of abstraction allows for more automated delegation of complex tasks, while also diminishing oversight as the user’s influence becomes less direct.

For example, a spreadsheet operates at a low level of abstraction, requiring the user to manually input formulas and data but also giving them fine-grained control over every calculation. In contrast, a more agentic AI-driven financial assistant could allow the user to define broad objectives, such as “maximize returns while managing risk,” while allowing the AI to manage strategy and implementation. This shift reduces the user’s workload but also diminishes their direct control.

Simulator

A simulator is an entity whose nature can be compressed into the patterns of the system that it models. In contrast to an agent, whose behavior is most succinctly described by the world state it attempts to achieve, a simulator’s behavior is most succinctly described by the rules it applies to change the current world state. Probabilistic sampling is common because it is an effective compression of uncertainty, but not required for something to be a simulator; what matters most is that the system models a generative process. Self-supervised learning produces models that function as simulators because they do not act toward specific goals (like agents) or require an external operator (like tools).

Properties that make an entity more simulator-like include:

Faithful reproduction of dynamics within a defined scope.

Captures patterns rather than memorizing specific examples.

Maintains coherence without fixating on a particular trajectory.

One important property of simulators is that they can instantiate agent-like or tool-like behaviors and switch between these (and other) modalities if the system being simulated contains such behaviors. A system that is acting agentic in one context may thus be a simulator from the perspective of its full range of operation.

Simulator-Tool Overlap

Simulators can be used as tools to the extent that simulating things is a useful affordance. In common usage, simulators must simulate something external. Water seems very simulator-like, since it follows well-defined rules that can be modelled, such as fluid dynamics. But water itself is not a simulator—it’s the ground truth that a simulator might try to approximate. A weather simulation models atmospheric dynamics but is not itself the weather. A language model generates text consistent with linguistic patterns but is not itself language. Conway’s Game of Life stretches this definitional element since it is based on an arbitrary set of rules, though one could argue that the game is a symbolic representation of the general class of cellular automata.

The property of being a representation of something external, however, defines a simulator in terms of its relationship to another system—as well as to an observer—rather than being a feature of the simulator itself. Given this definitional element, simulators become a subset of tools, such as those used for generating predictions, exploring possible futures, or modeling complex environments to assist decision-making. For this post, we are more interested in the intrinsic properties of simulators and their implications on AI safety, so defining simulators in a way that implies a necessary overlap with tools seems counterproductive for our purposes, but we make no comment here regarding a “correct” definition for other contexts.

Simulator-Agent Overlap

Put simply: a simulator runs a process that emulates another process while an agent aims for an outcome. As with the tool/agent distinction, these meanings are not mutually exclusive, but there is some tension between them.

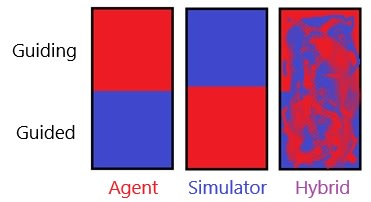

One way in which an entity could be both an agent and a simulator is if both modalities exist in different layers of abstraction [Figure 2, left and middle]. For example: an agent maintains an internal simulation of its environment (because this is instrumentally useful), which includes other agents (because they are part of the environment), who also simulate the environment, including the other agents…

Another potential form of overlap is if the agentic and simulated processes are entangled [Figure 2, right], such as where an entity simulates possible trajectories and then chooses one based on some criteria relating to preferred outcomes. For example, an AI trained to predict economic trends might simulate different futures as well as select trajectories that maximize a specific metric, thus acting as an agent within its own simulation.

These ways of describing the agency-simulator relationship are meaningful to the extent that they predict different things about the behavior or (observable) cognitive processes of an entity. On a high level:

To the extent agency and simulation are layered, with agency on top [Figure 2, left], then the entity’s actions will follow those of a pure agent, whose behavior can be predicted by its consequences, to the extent allowed by its capabilities relative to external obstacles. Simulation aspects of the entity will contribute to these capabilities, but not be a relevant factor in what sort of outcomes it seeks out.

To the extent agency and simulation are layered, with simulation on top [Figure 2, middle], then the entity’s agentic aspects will be defined and constrained by the emergent rules of the simulation. This might include an LLM acting in consistently human-like ways—though sufficiently out-of-distribution deviations may become chaotic. In any case, the entity will not persistently follow any coherent and non-arbitrary set of objectives.

To the extent agency and simulation exist side-by-side [Figure 2, right], then the entity’s actions will be a blend of the above two dynamics, with no consistent and effective way to disentangle them. Behavior will be sort-of compressible into outcomes; simulated processes will be sometimes, inconsistently followed.

Application to LLMs

Along the distinctions outlined here, simulator theory describes LLMs as displaying simulator-agent overlap, layered, with simulation on top. The agentic aspects of chatbot behavior are ascribed to simulacra, which are essentially self-sustaining patterns, most notably including characters. Goodbye, Shoggoth: The Stage, its Animatronics, & the Puppeteer – a New Metaphor visualizes the theory by describing the simulator base model as a stage and seemingly agentic generated simulacra as animatronics. The metaphor goes further by including a puppeteer, produced from fine tuning on desired behavior, which acts as a source of coherent preferences of the model and seems like an instance of entangled agency and simulation. In sum, LLMs are partially simulation, partially agentic, and the relationship between these aspects is partially layered (with simulation on top) and partially entangled.

This kind of partial entanglement of agency and simulation described in the metaphor of a puppeteer may sound like adding epicycles to the theory, but it can be salvaged as a legitimate admission of impurity when applying a conceptual framework to the real world. Entangled agency is introduced into LLMs during the fine tuning and RLHF processes, in which the model is trained to prefer certain types of output. Simulator theory is not intended to reflect a universal truth of AI; it is architecture-specific [Table 2].

| Architecture | Result |

| Reinforcement Learning (RL) | Agent |

| Supervised Learning (SL) | Simulator |

| SL + finetuning + RLHF | Agent/simulator hybrid |

| Future systems | ??? |

What are We?

With these categories in mind, we can test our understanding by considering the case of humans.

Humans exhibit partial agency in that we possess internal drives—hunger, social belonging, curiosity, and so on—that guide our actions. From the perspective of evolution, these drives serve an instrumental purpose: they exist because they contributed to reproductive success in our ancestral environment. From the perspective of the individual human, however, they function as terminal goals. We do not experience hunger as an instrumental step toward genetic replication; we experience it as an intrinsically motivating force. Layered on top of these drives, humans develop specific goals. Some of these goals are directly instrumental, such as the steps in a plan to achieve an outcome, and others that are more abstract, such as the pursuit of wealth or power as generically useful as sources of optionality for unknown future challenges.

At the same time, humans both use and function as simulators. Our cognition depends on rich internal models of the world, which we use to predict the outcomes of our actions and navigate social environments. Our learning process is a mixture of imitation and responding to incentives. Human personality itself is heavily influenced by culturally transmitted archetypes. Psychological theories like Internal Family Systems propose that the human mind contains multiple sub agents—internalized characters representing different aspects of the self—which must be reconciled for mental well-being. Curiosity, a basic drive, can also be viewed as a mechanism for refining our internal simulation of reality.

From the perspective of simulator-agent overlap, humans seem to be an instance of entangled simulation and agency. At times, our agency directs our simulations, such as when we make plans that we expect will lead to results we want. At other times, our simulations reshape our agency, such as when an imagined future becomes something we genuinely want or when a simulated social role becomes an identity. Our simulator and agentic natures continuously shape and constrain one another, with each predominating in different contexts.

Lenses, like agents and simulators, are useful starting points for analysis, but complex systems like humans resist such neat classification. Categories should clarify thinking, not constrain it; complexity should challenge, not paralyze.

- Aligning Agents, Tools, and Simulators by (11 May 2025 7:59 UTC; 24 points)

- Emergence of Simulators and Agents by (25 Jun 2025 6:59 UTC; 11 points)

- Agents, Simulators and Interpretability by (7 Jun 2025 6:06 UTC; 11 points)

- Lenses, Metaphors, and Meaning by (8 Jul 2025 19:46 UTC; 8 points)

- 's comment on Case Studies in Simulators and Agents by (26 May 2025 7:22 UTC; 0 points)

The way I think of LLMs is that the base model is a simulator of a distribution of agents: it simulates the various token-producing behaviors of humans (and groups of humans) producing documents online. Humans are agentic, thus it simulates agentic behavior. Effectively we’re distilling agentic behavior from humans into the LLM simulators of them. Within the training distribution of human agentic behaviors, the next-token prediction objective makes what specific human-like agentic behavior and goals it simulates highly-context sensitive (i.e. promptable).

Instruction-following training (and mental scafolding) then alters the distribution of behaviors, encourging the models to simulate agents of a particular type (helpful, honest, yet harmless assistants). Despite this, it remains easy to prompt the model to simulate other human behavior patterns.

So I don’t see simulator and agents as being alternatives or opposites: rather, in the case of LLMs, we train them to simulate humans, which are agents. So I disagree with the word “vs” in your Sequence title: I’d suggest replaying it with “of”, or at least “and”.

I think that we’re making a subtly different distinction from you. We have no issues in admitting that entities can be both simulators and agents, and the situation you’re describing with LLMs we would indeed describe as being a simulation of a distribution of agents.

However, this does not mean that anything which acts agentically is doing so because it is simulating an agent. Taking the example of chess, one could train a neural network to imitate grandmaster moves or one could train it via reinforcement learning to win the game (AlphaZero style). Both would act agentically and try to win the game, but there are important differences—the first will attempt to mimic a grandmaster in all scenarios, including making mistakes if this is a likely outcome. AlphaZero will in all positions try to win the game. The first is what we call a simulation of an agent, and is what vanilla LLMs do, the second is what we are calling an agent, and in this post we argue that modern language models post-trained via reinforcement learning behave more in that fashion (more precisely we think it behaves as an agent using the output of a simulator).

We think that this is an important distinction, and describe the differences as applied to safety properties in this post.

I completely agree: Reinforcement Learning has a tendency to produce agents, at least when applied to a system that wasn’t previously agentic. Whereas a transformer model trained on weather data would simulate weather systems, which are not agentic. I just think that, in the case of an LLM whose base model was trained on human data, which is currently what we’re trying to align, the normal situation is a simulation of a context-sensitive distribution of agents. If it has also undergone RL, as is often the case, it’s possible that that has made it “more agentic” in some meaningful sense, or at least induced some mode collapse in the distribution of agentic behaviors.

I haven’t yet had the chance to read all of your sequence, and I intend to, including those you link to.

Then I think we agree on questions of anticipated experience? I hope you enjoy the rest of the sequence, we should have a few more posts coming out soon :).

Having now read the sequence up to this point, you pretty-much already make all the points I would have made — in retrospect I think I was basically just arguing about terminology.