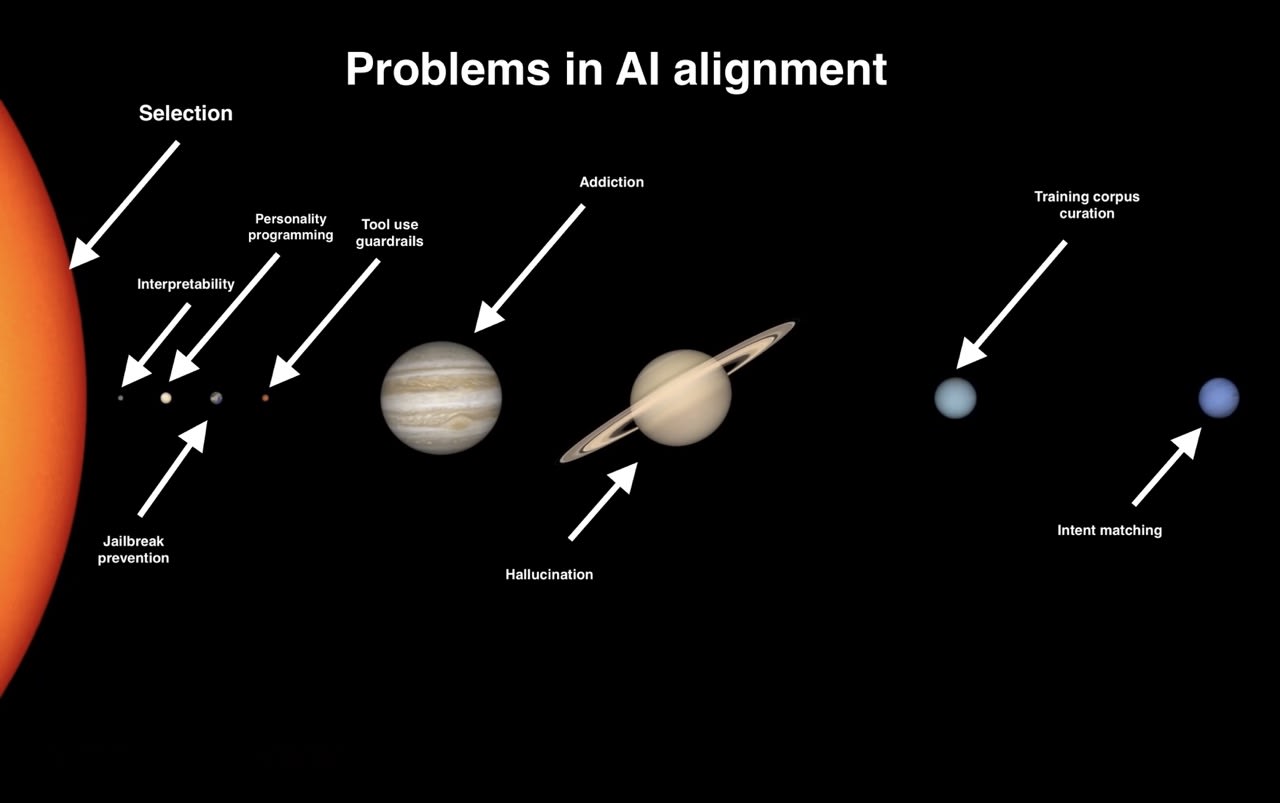

After trying too hard for too to make sense about what bothers me with the AI alignment conversation, I have settled, in true Millenial fashion, on a meme:

Explanation:

The Wikipedia article on AI Alignment defines it as follows:

In the field of artificial intelligence (AI), alignment aims to steer AI systems toward a person’s or group’s intended goals, preferences, or ethical principles.

One could observe: we would also like to steer the development of other things, like automobile transportation, or social media, or pharmaceuticals, or school curricula, “toward a person or group’s intended goals, preferences, or ethical principles.”

Why isn’t there a “pharmaceutical alignment” or a “school curriculum alignment” Wikipedia page?

I think that the answer is “AI Alignment” has an implicit technical bent to it. If you go on the AI Alignment Forum, for example, you’ll find more math than Confucius or Foucault.

On the other hand, nobody would view “pharmaceutical alignment” (if it were formulated as “[steering] pharmaceutical systems toward a person’s or group’s intended goals, preferences, or ethical principles”) as primarily a problem for math or science.

While there always are things that pharmaceutical developers can do inside the lab to at least try to promote ethical principles – for example, perhaps, to minimize preventable hazards, even when not forced to – we also accept that ethical work is done in large part outside of the lab; in purchasing decisions, in the way that pharmaceutical marketplaces operate, in the vast mess of the medical-industrial-government complex. It’s a problem so diffuse that it hardly makes sense to gather it all into one coherent encyclopedia entry.

The process by which the rest of the world influences the direction of an industry, by way of purchasing, analyzing, regulating, discussing, etc., is Selection. This comes from the terminology of evolution – in this framing, dinosaurs didn’t just decide to start growing wings and flying; Nature selected birds to fill the new ecological niches of the Jurassic period.

While Nature can’t do its selection on ethical grounds, we can, and do, when we select what kinds of companies and rules and power centers are filling which niches in our world. It’s a decentralized operation (like evolution), not controlled by any single entity, but consisting of the “sum total of the wills of the masses,” as Tolstoy put it.

The technical AI alignment problems (represented by the planets in the meme) are surely important, but what happens outside of the lab is just much bigger; AI is a small portion of the world economy; and yet AI touches almost all of us. The ways we select how AI touches us is, I want to suggest, the Big Question of AI Alignment. If you do care about AI Alignment in general, it’s a folly to ignore it.

In defense, a Selection-denier could argue that there is no progress to be made in directing the “sum total of the wills of the masses” towards the “group’s intended goals, preferences, or ethical principles.” But that would amount to rejecting the Categorical Imperative, and all the fun (and often very mathy) problems in game theory, and giving up on humanity, and only losers do that.

One sociotechnical protocol that can be applied to improving Selection efficiency is here: https://muldoon.cloud/2025/03/08/civic-organizing.html. But there are many others. This is the big work of AI Alignment. The meme said so.

Because with AI, “if anyone builds it, everyone dies”. This is not true for those other things, It’s not even true for nuclear weapons.

That is a speculative prediction. And definitely not how most people (not least within the AI alignment community) talk about alignment.

Haven’t read the book (and thanks for the link!) but are they even arguing for more alignment research? Or just for a general slowdown/pause of development?

The book isn’t out yet, so you know as much as I do what’s in it, but the title is a big clue. I doubt if there will be much speculation in it, because I’m familiar with the authors’ writings over the years. What matters is not, who agrees with them, or how many, but, is it true? To open the book is to leave the outside view behind.

Short of absolute, superintelligent doom, there are also risks from easy access to bioweapon know-how, deepfakes rendering all news sources everywhere untrustworthy, chatbots crafted as propaganda tools, and everything that anyone can think of that just needs smarts on tap to pull off.

Smarts on tap is good! But it’s a general purpose tool that can be pointed in any direction.

Pharmaceuticals and school curricula present only “obvious” risks: those flowing directly and foreseeably from what they are.