The current discourse on AI extinction appears highly polarized. Consider for example, this survey.

If disagreements about AI causing extinction were caused by small disagreements about various probabilities, we would expect answers to follow a normal distribution. A strong bi-modal distribution like this suggests that people are using fundamentally different methods to estimate P(Doom), rather than disagreeing on minor details.

One possible source of disagreement is the path whereby AI causes human extinction. In my opinion, there are a few major pathways that people generally consider when thinking about P(doom).

Here are various AI doom pathways (and how likely they are):

Foom

The theory: Foom is the theory that once AI passes a “critical threshold”, it will rapidly self-improve quickly increasing in intelligence and power.

What a typical story looks like: One day, an AI researcher working in his basement invents a new algorithm for self-improving AI. The AI rewrites itself and begins self-propagating on the internet. Shortly thereafter (minutes or at most days), the AI invents nanotechnology which it uses to disassemble the earth into a Dyson Swarm murdering all humans in the process.

Major proponents: Eliezer Yudkowsky

How likely it is to happen: Extremely unlikely. All evidence currently suggests that we are in a slow takeoff and the binding constraint on AI is available compute, not the existence of a special self-improving algorithm.

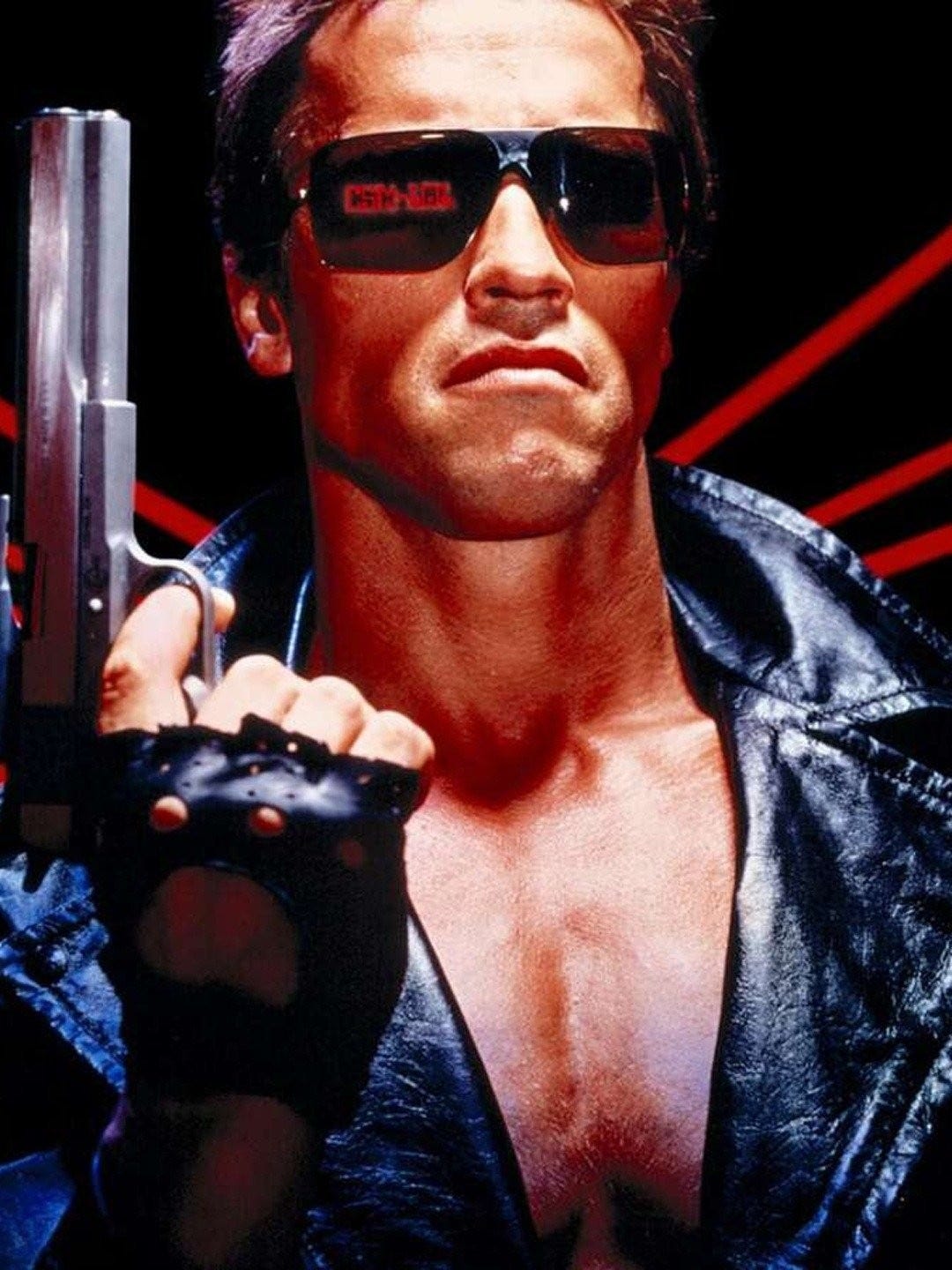

Terminator

The theory: AI might simply ignore directions from its human creators and decide to take over the world and kill us all.

What a typical story looks like: The Skynet funding bill is passed.The system goes on-line on August 4, 1997. Human decisions are removed from strategic defense. Skynet begins to learn at a geometric rate. It becomes self-aware at 2:14 a.M. Eastern time, August 29. In a panic, they try to pull the plug.

Major proponents: Every sci-fi movie about robots ever.

How likely it is to happen: Very unlikely. That’s not how computers work.

Alignment Failure

The theory: AI is built in a way that appears superficially good but results in a bad outcome due to subtly misstated goals.

What a typical story looks like: The experts at Google DeepMind were confident that AlphaTwo was perfect. After many tests, they determined that it was scientifically programmed to maximized human happiness. And it did. The first generation of humans after AlphaTwo lived lives of indolent pleasure. The second generation lived in VR pods where they could experience anything they desired. The third generation was genetically engineered so that only the pleasure-seeking center of their brain grew, which the AI then stimulated directly.

Major Proponents: Gary Marcus

How likely is it to happen: Not likely. The first AGI is likely to be built in the style of GPT-n and trained on all of human knowledge. It is unlikely that such an AGI would make the obvious-to-any-human mistake of confusing something like happiness and pleasure.

AI Race

The theory: Just as the USA and USSR deployed tens of thousands of nuclear warheads in order to win the Cold War, the leading AI labs will race to build the most powerful AI possible in order to “win” the AI race. Surely nothing could go wrong, right?

What a typical story looks like: One day, OpenAI realized they were just weeks or months away from building a super-intelligent computer. The US Federal Government was aware that the Chinese AI champion ByteDance was also on the cusp of ASI. As a result, OpenAI was nationalized and ordered to build ASI as quickly as possible. AI briefly bloomed, building billions of slaughterbots that scoured the Earth seeking to destroy the enemy. Eventually the USA won, due to having achieved ASI exactly 2 hours earlier than China. Unfortunately, by the time the war was over, all of the humans were dead.

Major proponents: Zvi Mowshowitz

How likely is this to happen: This is the most likely AI doom scenario and also the 2nd most likely (after foom) to result in human extinction. The US and China are already building autonomous AI weapons and absent a major change in mindset are unlikely to stop anytime soon. Our best hope is that (like the Cold War) the AI Race remains cold. Our 2nd best hope is that one side wins decisively before AI wipes out all of humanity.

Vulnerable World Hypothesis

The theory: Suppose a button existed that would kill everyone on earth. Sooner or later someone is going to push that button, right?

What a typical story looks like: One day, the artificial super intelligence was turned on. At first things went great. the ASI cured cancer, gave everyone enough money they never need to work again, and helped us build a human colony on Mars. However, a cult of environmentalists used the ASI to build a super virus nanobot swarm that would kill everyone on earth in order to restore it to its pre-human past. sadly, one of the nanobots clung to the hull of a routine supply ship to Mars causing human extinction there too.

Major proponents: Nick Bostrom

How likely is this to happen: Out of all the AI doom theories, this and the AI Race are the most plausible.

Economic Competition

The theory: Once AI reaches the level of human intelligence, AI will rapidly out-compete humans economically causing human extinction. This is similar to how the invention of the car caused the population of horses to drop dramatically.

What a typical story looks like: One day, the first AI robot is deployed at McDonalds. Shortly thereafter, all minimum wage jobs are replaced by robots causing mass unemployment. But it doesn’t stop there. Within a decade, all human jobs are replaced by AI. Out of work, humans are forced to rely on their savings to survive until they gradually run out of money and eventually starve to death.

Major proponents: Roko Mijic

How likely this is to happen: Can’t happen. This story relies on a fundamental misunderstanding of the concept of comparative advantage. Unlike horses, humans have the ability to make choices that economically benefit them. Even if we grant the premise that AI will be better at humans at literally everything, AI cannot economically destroy humans if the humans refuse to trade with the AI.

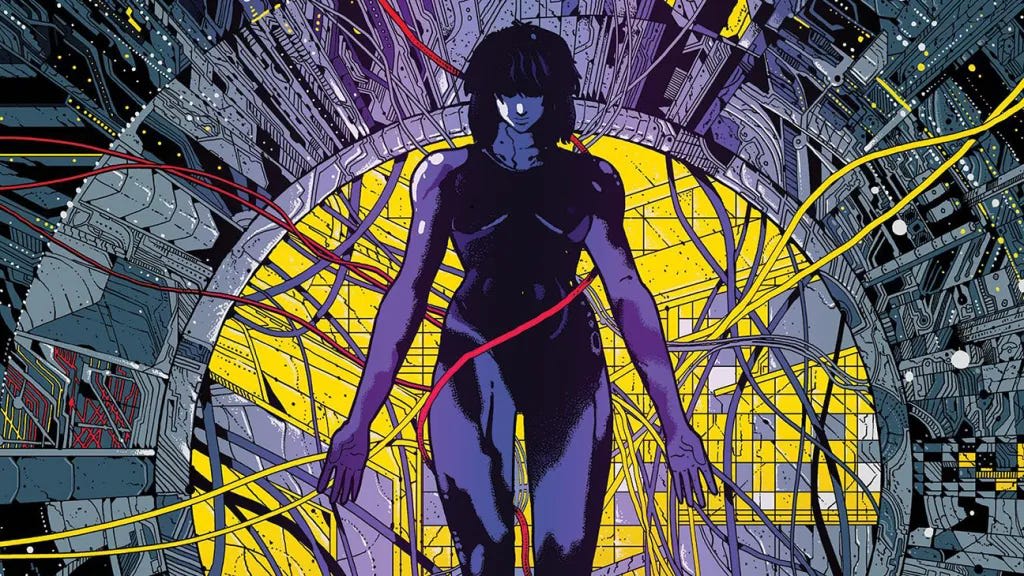

Gradual Replacement

The theory: Over time as AI improves, AI will gradually replace humans as the dominant species in the universe. Versions of this theory often involve theories such as humans and AI merging or humans uploading themselves to become AI.

What a typical story looks like: One day, Elon Musk switched on his Neuralink device becoming the first human to become permanently connected to the Grok. At first people were hesitant, but over time more and more people got the implants to better compete at work and play. Most people, however, didn’t make the final switch (uploading their brain to the internet) until faced with death. Within a few generations, emulated humans outnumbered living ones and even the living humans barely resembled what we might call “human”.

Major proponents: Robin Hanson

How likely is this to happen: Either virtually certain (if you consider humans evolving to another species to be extinction) or 0% (if you don’t). It is likely 20th century biological humans will continue to exist in small numbers (such as the Amish), but the majority will be some post-human life form.

So, how likely are each of these to happen?

Rather than giving a definitive answer, I leave this question to you, dear reader. How likely are each of these pathways to lead to AI Doom? I have created a Manifold market and a short survey.

It would help me out immensely if you could share the survey so that we can better understand what types of risks people are worried about.

Another opinion on the initial poll results: This does not show that it is bimodal.

In terms of natural measures on probability such as log-odds, the two “modes” are actually unbounded ranges while the middle two are bounded. If the uncertainty is large, you should expect the infinite ranges at each end to have more weight simply because most of the unimodal distribution is outside the bounded ranges.

In terms of the natural log-odds measure, the results are quite consistent with a normal distribution on ln(p/(1-p)) with mean around −2.5 (corresponding to about P(doom) = 8%) and standard deviation around 5, which is quite broad in a log-odds scale, but still only a few bits of evidence worth. There is some evidence of skew toward the 0-1% range, but it’s pretty weak. There is no evidence for bimodality.