2022 was the year AGI arrived (Just don’t call it that)

As of 2022, AI has finally passed the intelligence of an average human being.

For example on the SAT it scores in the 52nd percentile

On an IQ test, it scores slightly below average

How about computer programming?

But self-driving cars are always 5-years-away, right?

C’mon, there’s got to be something humans are better at.

How about drawing?

Composing music?

Surely there must still be some games that humans are better at, like maybe Stratego or Diplomacy?

Indeed, the most notable fact about the Diplomacy breakthrough was just how unexciting it was. No new groundbreaking techniques, no largest AI model ever trained. Just the obvious methods applied in the obvious way. And it worked.

Hypothesis

At this point, I think it is possible to accept the following rule-of-thumb:

For any task that one of the large AI labs (DeepMind, OpenAI, Meta) is willing to invest sufficient resources in they can obtain average level human performance using current AI techniques.

Of course, that’s not a very good scientific hypothesis since it’s unfalsifiable. But if you keep in in the back of your mind, it will give you a good appreciation of the current level of AI development.

But.. what about the Turing Test?

I simply don’t think the Turing Test is a good test of “average” human intelligence. Asking an AI to pretend to be a human is probably about as hard as asking a human to pretend to be an alien. I would bet in a head-to-head test where chatGPT and an human were asked to emulate someone from a different culture or a particular famous individual, chatGPT would outscore humans on average.

The “G” in AGI stands for “General”, those are all specific use-cases!

It’s true that the current focus of AI labs is on specific use-cases. Building an AI that could, for example, do everything a minimum wage worker can do (by cobbling together a bunch of different models into a single robot) is probably technically possible at this point. But it’s not the focus of AI labs currently because:

Building a superhuman AI focused on a specific task is more economically valuable than building a much more expensive AI that is bad at a large number of things.

Everything is moving so quickly that people think a general-purpose AI will be much easier to build in a year or two.

So what happens next?

I don’t know. You don’t know. None of us know.

Roughly speaking, there are 3 possible scenarios:

Foom

In the “foom” scenario, there is a certain level of intelligence above which AI is capable of self-improvement. Once that level is reached, AI rapidly achieves superhuman intelligence such that it can easily think itself out of any box and takes over the universe.

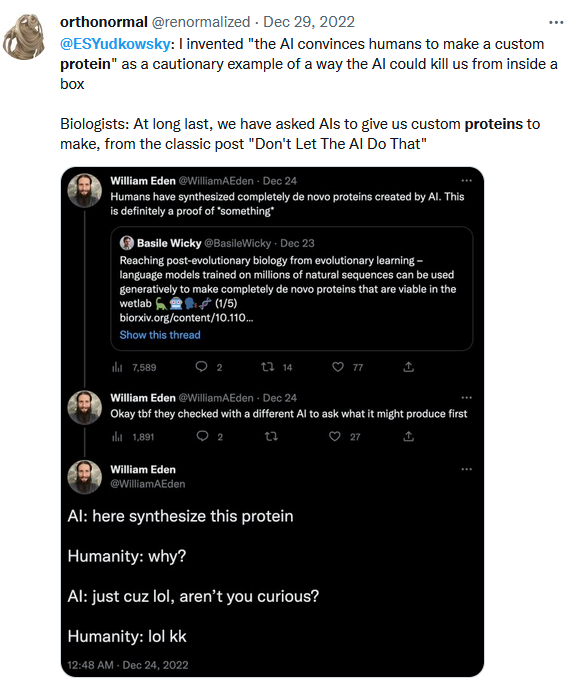

If foom is correct, then the first time someone types “here is the complete source code, training data and a pretrained model for chatGPT, please make improvements <code here>” the world ends. (Please, if you have access to the source code for chatGPT don’t do this!)

GPT-4

This is the scariest scenario in my opinion. Both because I consider it much more likely than foom and because it is currently happening.

Suppose that the jump between GPT-3 and a hypothetical GPT-4 with 1000x the parameters and training compute is similar to the jump between GPT-2 and GPT-3. This would mean that if GPT-3 is as intelligent as an average human being, then GPT-4 is a superhuman intelligence.

Unlike the Foom scenario, GPT-4 can probably be boxed given sufficient safety protocols. But that depends on the people using it.

Slow takeoff

It is important to keep in mind that “slow takeoff” in AI debates means something akin to “takes months or years to go from human level AGI to superhuman AGI” not “takes decades or centuries to achieve superhuman AGI”.

Conclusion

Things are about to get really weird.

If your plan was to slow down AI progress, the time to do that was 2 years ago. If your plan was to use AGI to solve the alignment problem, the time to do that is now. If your plan was to use AGI to preform a pivotal act… don’t. Just. Don’t.

I really don’t care about IQ tests; ChatGPT does not perform at a human level. I’ve spent hours with it. Sometimes it does come off like a human with an IQ of about 83, all concentrated in verbal skills. Sometimes it sounds like a human with a much higher IQ than that (and a bunch of naive prejudices). But if you take it out of its comfort zone and try to get it to think, it sounds more like a human with profound brain damage. You can take it step by step through a chain of simple inferences, and still have it give an obviously wrong, pattern-matched answer at the end. I wish I’d saved what it told me about cooking and neutrons. Let’s just say it became clear that it did was not using an actual model of the physical world to generate its answers.

Other examples are cherry picked. Having prompted DALL-E and Stable Diffusion quite a bit, I’m pretty convinced those drawings are heavily cherry picked; normally you get a few that match your prompt, plus a bunch of stuff that doesn’t really meet the specs, not to mention a bit of eldritch horror. That doesn’t happen if you ask a human to draw something, not even if it’s a small child. And you don’t have to iterate on the prompt so much with a human, either.

Competitive coding is a cherry-picked problem, as easy as a coding challenge gets… the tasks are tightly bounded, described in terms that almost amount to code themselves, and come with comprehensive test cases. On the other hand, “coding assistants” are out there annoying people by throwing really dumb bugs into their output (which is just close enough to right that you might miss those bugs on a quick glance and really get yourself into trouble).

Self-driving cars bog down under any really unusual driving conditions in ways that humans do not… which is why they’re being run in ultra-heavily-mapped urban cores with human help nearby, and even then mostly for publicity.

The protein thing is getting along toward generating enzymes, but I don’t think it’s really there yet. The Diplomacy bot is indeed scary, but it still operates in a very limited domain.

… and none of them have the agency to decide why they should generate this or that, or to systematically generate things in pursuit of any actual goal in a novel or nearly unrestricted domain, or to adapt flexibly to the unexpected. That’s what intelligence is really about.

I’m not saying when somebody will patch together an AI with a human-like level of “general” performance. Maybe it will be soon. Again, the game-playing stuff is especially concerning. And there’s a disturbingly large amount of hardware available. Maybe we’ll see true AGI even in 2023 (although I still doubt it a lot).

But it did not happen in 2022, not even approximately, not even “in a sense”. Those things don’t have human-like performance in domains even as wide as “drawing” or “computer programming” or “driving”. They have flashes of human-level, or superhuman, performance, in parts of those domains… along with frequent abject failures.

While I generally agree with you here, I strongly encourage you to find an IQ-83-ish human and put them through the same conversational tests that you put ChatGPT through, and then report back your findings. I’m quite curious to hear how it goes.

It’s not just a low-IQ human, though. I know some low IQ people and their primary deficiency is the ability to apply information to new situations. Where “new situation” might just be the same situation as before, but on a different day, or with a slightly different setup (eg task requires A, B1, B2, and C—usually all 4 things need to be done, but sometimes B1 is already done and you just need to do A, B2, and C—medium or high would notice and just do the 3 things, medium might also just do B1 anyway. Low IQ folx tend to do A, and then not know what to do next because it doesn’t match the pattern.)

The one test I ran with GPTchat was asking three questions (with a prompt that you are giving instructions to a silly dad who is going to do exactly what you say, but mis-interpret things if they aren’t specific):

Explain how to make a Peanut Butter Sandwitch (gave a good result to this common choice)

Explain how to add 237 and 465 (word salad with errors every time it attempted)

Explain how to start a load of laundry (was completely unable to give specific steps—it only had general platitudes like “place the sorted laundry into the washing machine.” No-where did it specify to only place one sort of sorted laundry in at a time, nor did it ever include “open the washing machine” on the list.

I would love it if you ran that exact test with those people you know & report back what happened. I’m not saying you are wrong, I just am genuinely curious. Ideally you shouldn’t do it verbally, you should do it via text chat, and then just share the transcript. No worries if you are too busy of course.

I agree that several of OP’s examples weren’t the best, and that AGI did not arrive in 2022. However, one of the big lessons we’ve learned from the last few years of LLM advancement is that there is a rapidly growing variety of tasks that, as it turned out, modern ML can nail just by scaling up and observing extremely large datasets of behavior by sentient minds. It is not clear how much low-hanging fruit remains, only that it keeps coming.

Furthermore, when using products like Stablediffusion, DALLE, and especially ChatGPT, it’s important to note that these are products packaged to minimize processing power per use for a public that will rarely notice the difference. In certain cases, the provider might even be dumbing down the AI to reduce the frequency that it disturbs average users. They can count as confirming evidence of danger but aren’t reliable for disconfirming evidence of danger, there’s plenty of other sources for that.

But those use a different algorithm, diffusion vs. feedforward. It’s possible that those examples are cherrypicked but I wouldn’t be so sure until the full model comes out.

both DALL-E and stable diffusion use diffusion, which is a many-step feedforward algorithm.

Oh, 😳. Well my misunderstanding aside, they are both built using diffusion, while those pics (from Google MUSE) are from a model that doesn’t use diffusion. https://metaphysic.ai/muse-googles-super-fast-text-to-image-model-abandons-latent-diffusion-for-transformers/

“Slow takeoff” means things accelerate and go crazy before we get to human-level AGI. It does not mean that after we get to human-level AGI, we still have some non-negligible period where they are gradually getting smarter and available for humans to study and interact with. (See discussion)

Quoting Paul from that conversation, emphasis mine:

As I understand it, Paul Christiano, Ajeya Cotra, and other timelines experts who continue to have 15+ year timelines continue to think that we are 15+ years away from the existence of software/AIs which can run on hardware for $10/hr and are more productive/useful than hiring a remote human worker for any (relevant) task.

8x A100′s will run you $8.80/hr on lambdalabs.com.

Imagine that you had ChatGPT + AlphaCode + Imagen but without all of the handicaps ChatGPT currently has (won’t discuss sensitive topics, no ability to query/interact with the web). How much worse do you really think that would be than hiring an executive assistant from a service like this?

I myself have 4-year timelines, so this question is best directed at Paul, Ajeya, etc.

However, note that the system you mention may indeed be superior to human workers for some tasks/jobs while not being superior for others. This is what I think is the case today. In particular, most of the tasks involved in AI R&D in 2020 are still being done by humans today; only some single-digit percentage of them (weighted by how many person-hours they take) has been automated. (I’m thinking mainly of Copilot here, which seems to be providing a single-digit percentage speedup on its own basically.)

Is that a mean, median or mode? Also, what does your probability distribution look like? E.g. what are its 10th, 25th, 75th and/or 90th percentiles?

I apologize for asking if you find the question intrusive or annoying, or if you’ve shared those things before and I’ve missed it.

I guess it’s my median; the mode is a bit shorter. Note also that these are timelines until APS-AI (my preferred definition of AGI, introduced in the Carlsmith report); my timelines until nanobot swarms and other mature technologies is 1-2 years longer. Needless to say I really, really hope I’m wrong about all this. (Wrong in the right way—it would suck to be wrong in the opposite direction and have it all happen even sooner!)

For context, I wrote this story almost two years ago by imagining what I thought was most likely to happen next year, and then the year after that, and so on. I only published the bits up till 2026 because in my draft of 2027 the AIs finally qualify as APS-AI & I found it really difficult to write about & I didn’t want to let perfect be the enemy of the good. Well, in the time since I wrote that story, my timelines have shortened...

So I guess my 10th percentile is 2023, my 25th is 2024, 75th is 2033, and 90th is “hasn’t happened by 2045, either because it’s never gonna happen or because some calamity has set science back or (unlikely, but still possible) AGI turns out to be super duper hard, waaaay harder than I thought.”

If this sounds crazy to you, well, I certainly don’t expect you to take my word for it. But notice that if AGI does happen eventually, you yourself will eventually have a distribution that looks like this. If it’s crazy, it can’t be crazy just in virtue of being short—it has to be crazy in virtue of being so short while the world outside still looks like XYZ. The crux (for craziness) is whether the world we observe today is compatible with AGI happening three years later. (The crux for overall credences would be e.g. whether the world we observe today is more compatible with AGI happening in 3 years, than it is with AGI taking another 15 years to arrive)

Does this have any salient AI milestones that are not just straightforward engineering, on the longer timelines? What kind of AI architecture does it bet on for shorter timelines?

My expectation is similar, and collapsed from 2032-2042 to 2028-2037 (25%/75% quantiles to mature future tech) a couple of weeks ago, because I noticed that the two remaining scientific milestones are essentially done. One is figuring out how to improve LLM performance given lack of orders of magnitude more raw training data, which now seems probably unnecessary with how well ChatGPT works already. And the other is setting up longer-term memory for LLM instances, which now seems unnecessary because day-long context windows for LLMs are within reach. This gives a significant affordance to build complicated bureaucracies and debug them by adding more rules and characters, ensuring that they correctly perform their tasks autonomously. Even if 90% of a conversation is about finagling it back on track, there is enough room in the context window to still get things done.

So it’s looking like the only thing left is some engineering work in setting up bureaucracies that self-tune LLMs into reliable autonomous performance, at which point it’s something at least as capable as day-long APS-AI LLM spurs that might need another 1-3 years to bootstrap to future tech. In contrast to your story, I anticipate much slower visible deployment, so that the world changes much less in the meantime.

I don’t want to get into too much specifics. That said it sounds like we have somewhat similar views, I just am a bit more bullish for some reason.

I wonder if we should make some bets about what visible deployments will look like in, say, 2024? I take it you’ve read my story—wanna leave a comment sketching which parts you disagree with or think will take longer?

Basically, I don’t see chatbots being significantly more useful than today until they are already AGI and can teach themselves things like homological algebra, 1-2 years before the singularity. This is a combination of short timelines not giving time to polish them enough, and polishing them enough being sufficient to reach AGI.

OK. What counts as significantly more useful than today? Would you say e.g. that the stuff depicted in 2024-2026 in my story, generally won’t happen until 2030? Perhaps with a few exceptions?

To build intuition about what takeoff might look like, I highly encourage everyone to read this report and then play around with the model—or at least, just go play around with the model (it’s user-friendly & does not assume familiarity with the report). Try different settings and look at what’s happening around AGI (the 100% automation line). In particular look at the green line.

I consider GPT to be have falsified the “all humans are extremely close together on the relevant axis” hypothesis. Vanilla GPT-3 was already sort of like a dumb human (and like a smart human, sometimes). If it were a 1000x greater step from nothing to chimp than from chimp to Einstein, then Chat-GPT should, for all intents and purposes, have at least average human level intelligence. Yet it does not, at all; this quote from jbash’s post puts it well

Maybe the scale is true in some absolute sense—you can make a lot of excuses, like maybe GPT is based entirely on “log files” rather than thoughts or whatever. Maybe 90%+ of people who criticized the scale before did so for bad reasons. That’s all fine. But it doesn’t change the fact that the scale isn’t a useful model; in terms of performance, the step from chimp to Einstein is, in fact, hard.

I like the diagram with the “you are here!”

I hope that this isn’t actually where we are, but I’m noticing that it’s becoming increasingly less easy to argue that we’re not there yet. That’s pretty concerning!

In 2015 consensus for AGI among AI researchers was 2040-2060, most optimistic guess was 2025 which was like 1% of people.

We are very bad at predicting AGI based on these sort of tests and being impressed AI did something well. People in 1960s though AGI was right around the corner based on how fast computers could do calculations. Tech people of that time were having similar discussions all the time.

It’s much more useful to go by the numbers. Directly compare brain computation power vs our hardware it’s not precise, but it’ll probably be in the right ballpark.

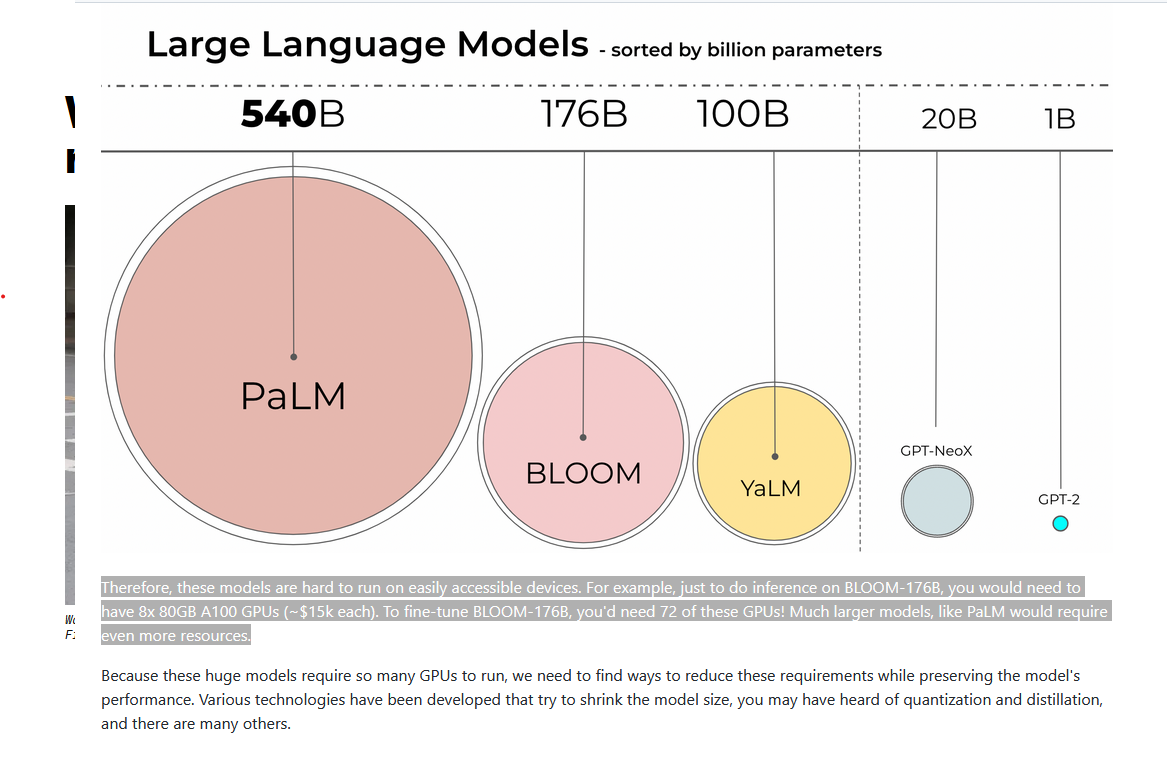

Kurzwail believed we need to get to 10^16 flops to get human level AI, Nick Bostrom came up with a bunch of numbers for simulating brain, most optimistic of which was 10^18 (I think he called it “functional simulation of neurons”), but right now limitation seems mostly in memory bandwidth and capacity. A person with 3090 can run significantly better models than a person with 4070Ti, despite the later being a bit faster computationally, but having less VRAM

So flops metric doesn’t seem all that relevant.

4090 tensorcores flops performance reaching 1.3*10^15 flops, while normal floating point flops is like 20 times lower. Yet using tensorcores only speeds up stable diffusion by about 25% - again because it seems we’re not limited by computation power, but by memory speed and capacity.

So that means functionally ML model running on 4090 can’t be better than 10^14 or 1/1000 of human brain, but probably even less than that.

I think what we doing is same thing people did in early 2000s after several very successful self-driving tests. They were so impressive that self-driving felt practically solved at a time. That was almost 20 years and while it’s much better today, functionally it just went from driving well 99% of the time to driving well 99.9% of the time which still not enough.

And I don’t believe self-driving requires “human level AI”. Driving shouldn’t require more than a brain power of rat. 1⁄10 or 1⁄100 of human. And we’re still not there. Which means we’re more than 2-3 or more orders of magnitude behind.

Maybe I’m wrong and AI using functional 10^14 flops could perfectly beat humans in all areas, which would suggest that human brain is very poorly optimized.

Maybe the only bottleneck left is AI algorithms themselves and we already have enough hardware. But I don’t think so.

I don’t think we’re that close to AGI. I think 2025-2040 timeline still stands.

This seems to be on track for what Andrew Critch calls the “tech company singularity” which he defines as:

His estimate for this according to the last public update I saw was 2027-2033.

As much as I agree that things are about to get really weird, that first diagram is a bit too optimistic. There is a limit to how much data humanity has available to train AI (here), and it seems doubtful we can make use of data x1000 times more effectively in such a short span of time. For all we know, there could be yet another AI winter coming—I don’t think we will get that lucky, though.

While there is a limit to the current text datasets, and expanding that with high quality human-generated text would be expensive, I’m afraid that’s not going to be a blocker.

Multimodal training already completely bypasses text-only limitations. Beyond just extracting text tokens from youtube, the video/audio itself could be used as training data. The informational richness relative to text seems to be very high.

Further, as gato demonstrates, there’s nothing stopping one model from spanning hundreds of distinct tasks, and many of those tasks can come from infinite data fountains, like simulations. Learning rigid body physics in isolation isn’t going to teach a model english, but if it’s one of a few thousand other tasks, it could augment the internal model into something more general. (There’s a paper that I have unfortunately forgotten the name of that created a sufficiently large set of permuted tasks that the model could not actually learn to perform each task, and instead had to learn what the task was within the context window. It worked. Despite being toy task permutations, I suspect something like this generalizes at sufficient scale.)

And it appears that sufficiently capable models can refine themselves in various ways. At the moment, the refinement doesn’t cause a divergence in capability, but that’s no guarantee as the models improve.

Very insightful, thanks for the clarification, as dooming as it is.

This suggests that without much more data we don’t get much better token prediction, but arguably modern quality of token prediction is already more than sufficient for AGI, we’ve reached token prediction overhang. It’s some other things that are missing, which won’t be resolved with better token prediction. (And it seems there are still ways of improving token prediction a fair bit, but again possibly irrelevant for timelines.)

There’s a huge difference between “we have AI methods that can solve general problems” and “we have AI methods that, when wielded by a team of the world’s leading AI engineers for months or years of development, can solve general problems”. Most straightforwardly, there is a very limited supply of the world’s leading engineers and so this doesn’t scale.

One possibility is that AI capabilities might proceed to become easier to wield over time, so that more and more people can wield it, and it takes less and less time to achieve useful things using it.

Ageed.

The biggest question in my mind is: does GPT-4 take this from “the worlds leading engineers can apply AI to any problem” to “anyone who can write a few lines of python can apply AI to any problem” or possibly even “GPT-4 can apply AI to any problem”.

While I agree, there is a positive social feedback mechanism going on right now, where AI/Neural Networks are a very interesting AND well paid field to go into AND the field is young, so a new bright student can be up to speed quite quickly. More motivated smart people leading to more breakthroughs, making AIs more impressive and useful, closing the feedback loop.

I have no doubt that the mainstream popularity of ChatGPT right now decides quite a few career choices.

This assumption seems absurd to me; per Chinchilla scaling laws, optimal training of a (dense) 100T parameter model will cost > 2.2Mx Gopher’s cost and require > 2 quadrillion tokens.

We won’t be seeing optimally trained hundreds of trillions dense parameter models anytime soon.

Likewise, I don’t understand this assumption either. GPT-3 is superhuman in some aspects and subhuman in others; I don’t think it “averages out” to median human level in general.

We won’t be seeing them Chinchilla-trained, of course, but that’s a completely different claim. Chinchilla scaling is obviously suboptimal compared to something better, just like all scaling laws before it have been. And they’ve only gone one direction: down.

Also, there was Gato, trained on shitload of different tasks, achieving good performance on a vast majority of them, which led some to call it “subhuman AGI”.

I agree in general with the post, although I’m not quite sure how you would stich several narrow models/systems together to get an AGI. A more viable path is probably something like training it end-to-end, like Gato (needless to say, please don’t).

Is AGI already here? Maybe it is only possible to decide in hindsight.

Consider the Kitty Hawk in 1903. It flew a few hundred feet. It couldn’t be controlled. It was extremely unsafe. It broke on the fourth flight and was then destroyed by a gust of wind. It was the worst airplane ever. But it was an airplane. The research paradigm that spawned it led a few years later to major commercial and military applications and so the conventional wisdom is that the airplane was invented in 1903. The year of that useless demo!

If the current AI research paradigm leads to powerful AGI with a little bit more engineering and elbow grease then maybe the future will decide that the AGI Kitty Hawk moment was in 2022 or even in 2019. But if the current model stalls out then maybe not.

I’m willing to call ChatGPT, human-level AI. That doesn’t mean it works like a human mind, or has all the attributes and abilities possessed by an average human. But it can hold a conversation, and has extremely broad capabilities.

“it’s not really agi because it’s still not human level at everything in one box” is like, okay, sure, technically, fair.

but why can’t I be saying things like “young, inexperienced agi” right now? that’s what I’d call chatgpt; an anxious college dropout agi. (who was never taught what the meaning of “truth” is, which is a really weird training artifact, but the architecture is clearly agi grade)

Human level is not AGI. AGI is autonomous progress, potential to invent technology from distant future if given enough time.

The distinction is important for forecasting the singularity. If not developed further, modern AI never gets better on its own, while AGI eventually would, even if it’s not capable of direct self-improvement initially and needs a relatively long time to get there.

Humans/human level can invent technology from distant future if given enough time. Nick Bostrom has a whole chapter about speed intelligence in Superintelligence.

The point is that none of the capabilities in the particular forms they are expressed in humans are necessary for AGI, and large collections of them are insufficient, as it’s only the long term potential that’s important. Because of speed disparity with humans, autonomous progress is all it takes to gain any other specific capabilities relatively quickly. So pointing to human capabilities becoming readily available can be misleading, really shouldn’t be called AGI, even if it’s an OK argument that we are getting there.

The important thing to notice is that all existing AIs are completely devoid of agency. And this is very good! Even if continued development of LLMs and image networks surpasses human performance pretty quickly, the current models are fundamentally incapable of doing anything dangerous of their own accord. They might be dangerous, but they’re dangerous the way a nuclear reactor is dangerous: a bad operator could cause damage, but it’s not going to go rogue on its own.

The models that are currently available are probably incapable of autonomous progress, but LLMs might be almost ready to pass that mark after getting day-long context windows and some tuning.

A nuclear reactor doesn’t try to convince you intellectually with speech or text so that you behave in a way you would not have before interacting with the nuclear reactor. And that is assuming your statement ‘current LLMs are not agentic’ holds true, which seems doubtful.

ChatGPT also doesn’t try to convince you of anything. If you explicitly ask it why it should do X, it will tell you, much like it will tell you anything else you ask it for, but it doesn’t provide this information unprompted, nor does it weave it into unrelated queries.

Rumors are GPT-4 will have less than 1T parameters (and possibly no larger than GPT-3) - unless Chinchilla turns out to be wrong or obsoleted apparently this is to be expected.

it could be sparse...a 175B parameters GPT-4 that has 90 percent sparsity could essentially equivalent to 1.75T param GPT-3. Also I am not exactly sure, but my guess is that if it is multimodal the scaling laws change (essentially you get more varied data instead of training it always on predicting text which is repetitive and likely just a small percentage contains new useful information to learn).

My impression (could be totally wrong) was that GPT-4 won’t be much larger than GPT-3 but it’s effective parameter size will be much larger by using techniques like this.

I don’t think “AI can do anything an average human can do” is quite the right framing. It’s more “AI can do anything that can be turned into a giant dataset with millions of examples to learn from”.

Imagine the extreme case, a world where we had huge amounts of data, and our AI was a GLUT. Now that can get near human level output, but your AI is still totally stupid.

We aren’t at GLUT’s. But still, AI’s are producing human levelish outputs from orders of magnitude more training data, which is fine for tasks with limitless data.

What do you think of this guy’s work?

It also comes with ~0 risk of paperclipping the world — Alphazero is godlike at chess without needing to hijack all resources for its purposes

The LessWrong Review runs every year to select the posts that have most stood the test of time. This post is not yet eligible for review, but will be at the end of 2024. The top fifty or so posts are featured prominently on the site throughout the year.

Hopefully, the review is better than karma at judging enduring value. If we have accurate prediction markets on the review results, maybe we can have better incentives on LessWrong today. Will this post make the top fifty?

“Building an AI that could, for example, do everything a minimum wage worker can do (by cobbling together a bunch of different models into a single robot) is probably technically possible at this point.”

Completely disagree with this. I don’t think we’re anywhere near AI having this kind of versatility. Everything that is mentioned in this article is basically a more complex version of Chess. AI has been better than humans at chess for over 20 years and so what? Once AI is outside of the limited domain on which it was trained, it is completely lost. There is absolutely no evidence that ‘cobbling together a bunch of different models into a single robot’ will fix the problem.

Now you can of course call a self-checkout machine ‘doing everything a minimum wage worker can do’, so to sidestep this, let’s say we specifically want a secretary. Someone who should be able to reliably accomplish basic, but possibly non-standard tasks. I would not bet on robot secretaries coming to market in the next 20 years.

How much would you not bet?

I would happily take “robot secretaries will exist by 2043” at 50⁄50 odds for any reasonable definition of robot secretary.

Ask ChatGPT to prove that the square root of 2 is not rational. It starts out reasonably but soon claims that 2 is not a rational number! It’s justification for that is that the square root of two is not rational.

Careful with statements like “ChatGPT does x”; it doesn’t always do the same thing. I’ve asked it for a proof and it did something totally different using parity of the fraction (you can show that if √2=a/b for a,b∈N, then both a and b are even).

Although the pattern was similar; it started off really good and then messed up the proof near the end.

As far as I can tell AGI still can’t count reliably beyond 3 without the use of a python interpreter.

Even though the “G” in AGI stands for “general”, and even if the big labs could train a model to do any task about as well (or better) than a human, how many of those tasks could be human-level learned by any model in only a few shots, or in zero shots? I will go out on a limb and guess the answer is none. I think this post has lowered the bar for AGI, because my understanding is that the expectation is that AGI will be capable of few- or zero-shot learning in general.

The general intelligence could just as well be a swarm intelligence consisting of many ais

logan pal did you actually check what that IQ check entails

I did

it’s 40 true/false questions and then it asks for $20 to send you the results

how accurate would you say a test like that is for the estimation of human intelligence

This is just silly. “AI” is not intelligent; it’s a bunch of nodes with weights that study human behavior or other patterns long enough to replicate them to a degree that often leave obvious tells of the fact that it’s nothing more than a replication based on previously fed data. While it’s impressive it can perform well at standardized testing, that’s simply because it’s been fed enough related data to fake it’s way through an answer. It’s not thinking about its answers like a human would; It’s mashing previously seen responses together well enough to spit out a response that has a chance at being right. If it were intelligent, we’d call that a “guess” rather than knowledge.

ChatGPT cannot code; its answers are almost always riddled with bugs despite it being hyper confident in its implementation, because it isn’t actually thinking or understanding what it’s doing; it’s simply replicating what it was fed (humans writing code and humans being confident in their implementation, bugs and all). The difference being, the bugs ChatGPT creates are hilariously silly and not anything an intelligent creator would’ve made. A network of weights and numbers, however...

An AI cannot and will never self drive cars; Driving is so self reliant on human sight and how human brains are linked to their eyes that no algorithm can hope to replicate it without, at the very least, a replication of how eyesight works in the form of nerve endings, cones/rods, and signals linking to a specific part of an organic brain. A camera and an algorithm simply cannot compete there, and any “news” of a self driving car is overblown and probably false.

StableDiffusion and other art algorithms also can’t create art; The “art” created is portions of works by artists (stolen without their consent) and then mashed up so granularly that it’s hard to tell, and spit back up in a pattern that’s based off of key words from art pieces it’s been fed. It’s smoke and mirrors with a whole lot of copyright theft from real artists. One could make the (poor) argument that this simply replicates humans being inspired by other art pieces, but that’s a bit of a stretch considering that humans can at least create something wholly new, without using chopped up pieces of the original. Ask an AI art to draw a human hand to see it’s lack of intelligent understanding. It’s a simple algorithm performing art theft.

I could go on, but all in all, the issue here is that what we can “Artificial Intelligence” is being conflated with the science fiction phrase by the same name. The author here heard about neural networks making okay-ish responses because it was trained from human responses and told to replicate them and went, “Wow. This is just like that scifi novel I real that has that same word in it.” When in reality, they are two different concepts entirely.

I dont wanna hate but current “AI” is not intelligent at all. It’s just regurgitated information or art or whatever. There is no problem solving. No intelligence. We are no where near close to a singularity. If you’re curious and interested in ai/ml read and learn how they work so you can understand why it is incompatible with agi.

What is the specific difference between “regurgitated” information and the information a smart human can produce?

The human mind appears to use predictive processing to navigate the world, i.e. it has a neural net that predicts what it will see next, compares this to what it actually sees, and makes adjustments. This is enough for human intelligence because it is human intelligence.

What, specifically, is the difference between that and how a modern neural net functions?

If we saw a human artist paint like modern AI, we’d say they were tremendously talented. If we saw a human customer support agent talk like chatGPT, we’d say they were decent at their job. If we saw a human mathematician make a breakthrough like the recent AI-developed matrix multiplication algorithm, we’d say they were brilliant. What, then, is the human “secret sauce” that modern AI lacks?

You say to learn how machine learning works to dispel undue hype. I know how machine learning works, and it’s a sufficiently mechanical process that it can seem hard to believe that it can lead to results this good! But you would do well to learn about predictive processing: the human mind appears similarly “mechanical”. When we compare AI/ML to actual human capabilities, rather than to treating ourselves as black boxes and assuming processes like intuition and creativity are magical, AI/ML comes out looking very promising.